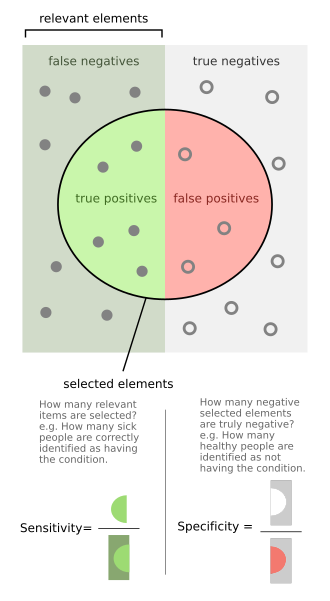

In medicine and statistics, sensitivity and specificity mathematically describe the accuracy of a test that reports the presence or absence of a medical condition. If individuals who have the condition are considered "positive" and those who do not are considered "negative", then sensitivity is a measure of how well a test can identify true positives and specificity is a measure of how well a test can identify true negatives:

- Sensitivity (true positive rate) is the probability of a positive test result, conditioned on the individual truly being positive.

- Specificity (true negative rate) is the probability of a negative test result, conditioned on the individual truly being negative.

If the true status of the condition cannot be known, sensitivity and specificity can be defined relative to a "gold standard test" which is assumed correct. For all testing, both diagnoses and screening, there is usually a trade-off between sensitivity and specificity, such that higher sensitivities will mean lower specificities and vice versa.

A test which reliably detects the presence of a condition, resulting in a high number of true positives and low number of false negatives, will have a high sensitivity. This is especially important when the consequence of failing to treat the condition is serious and/or the treatment is very effective and has minimal side effects.

A test which reliably excludes individuals who do not have the condition, resulting in a high number of true negatives and low number of false positives, will have a high specificity. This is especially important when people who are identified as having a condition may be subjected to more testing, expense, stigma, anxiety, etc.

The terms "sensitivity" and "specificity" were introduced by American biostatistician Jacob Yerushalmy in 1947.[1]

There are different definitions within laboratory quality control, wherein "analytical sensitivity" is defined as the smallest amount of substance in a sample that can accurately be measured by an assay (synonymously to detection limit), and "analytical specificity" is defined as the ability of an assay to measure one particular organism or substance, rather than others.[2] However, this article deals with diagnostic sensitivity and specificity as defined at top.

- ^ Yerushalmy J (1947). "Statistical problems in assessing methods of medical diagnosis with special reference to x-ray techniques". Public Health Reports. 62 (2): 1432–39. doi:10.2307/4586294. JSTOR 4586294. PMID 20340527. S2CID 19967899.

- ^ Saah AJ, Hoover DR (1998). "[Sensitivity and specificity revisited: significance of the terms in analytic and diagnostic language]". Ann Dermatol Venereol. 125 (4): 291–4. PMID 9747274.