Pending changes analyzed, Foundation report, Main page bias, brief news

Analysis of Pending changes trial published

The full analysis of the Pending changes trial has been published. The data are an elaboration on an earlier preliminary analysis (see previous story). The full table is here, and a compacted version focusing on anon revert percentages is here. The data ranges widely; the two most common percentages are 100 and 0 percent.

Pending changes is a new form of page protection that makes use of the FlaggedRevs extension, allowing editing as usual but giving visiting IPs only the most recent "approved" version of the protected pages, as decided by trusted editors. Flagged revisions has been praised by supporters as an alternative to semiprotection, opening up editing to IPs while still curbing vandalism; on the other hand, it has been criticized as a contradiction to Wikipedia's open editing model.

A straw poll on the future of Pending changes has concluded. In the light of what many consider a confusing poll, several users have posted analyses aiming to clarify the consensus.

Foundation reports book statistics and legal work from July

The Wikimedia Foundation Report for July 2010 has been published, following a lapse in the publication of monthly reports (the April, May and June reports are being worked on). Wikification and other improvement of the version on Meta are invited. Apart from many items previously covered in the Signpost, the report shares some highlights from statistics about the usage of the book tool (which allows readers to compile Wikipedia articles into PDF files and order bound paper copies of them), provided by PediaPress: "From May through July, PediaPress shipped 1,671 printed books to 981 buyers in 46 countries. 38% of books were sold to Germany and 28% to the United States. The feature was also used to generate approximately 85,000 PDF files per day." The report says that the WMF's legal team has "been in contact with both the Apple and Android app stores to ask their assistance in policing trademark-infringing apps" and was separately negotiating with Apple about the Wikimedia trademark use in Apple products. The Foundation contracted an attorney specialized in charities, and "confirmed that there are ongoing structural issues, particularly in Europe, with transferring charitable funds to WMF."

Main page biases?

Last week, two blogs independently examined the choice of topics featured on the English Wikipedia's main page. "Deeply Problematic", a blog about "feminism, and stuff", examined which persons were mentioned in the various sections of the main page on ten different dates during the past year (using the Wayback machine), finding that 130 of them were men and only 15 were women. Wikipedian Utcursch asked whether there is "Too much bad news on Wikipedia’s main page?" He examined the content of the "In the news" section during August, when it had featured 45 unique news stories. Around 40% of them belonged "to the 'bad news' category (disasters, accidents, wars and terrorist attacks)". In addition, he shared the informal impression that bad news stays longer on the main page because "the new updates are continuously posted, as the casualties keep increasing over a period of time". Utcursch, who is from India, also examined the geographical distribution of the ITN entries, finding "that the 'In the news' section does a decent job of covering stories from all across the world". - In related news, a Twitter feed announcing India-related topics from the "Did You Know" (DYK) section has recently been set up (DYKIndia); the underlying software can be adapted to other topic areas too (WikipediaDYKTweeter).

Briefly

- New Wikimedia chapter: On August 31, Wikimedia Estonia was officially recognized by the Foundation as the youngest Wikimedia chapter. (Per the bylaws, its official name is "Mittetulundusühing Wikimedia Eesti", abbreviated as "MTÜ Wikimedia Eesti" ["Wikimedia Estonia non-profit organization"].) According to a start-up grant request, it plans a "Wikipedia in schools" project, collaborations with local municipalities, museums and unions, and the acquisition of texts for Vikitekstid (the Estonian language Wikisource).

- Foundation hiring microblogged: To add transparency to the Wikimedia hiring and working processes, two Wikimedia message accounts, one on Identi.ca and one on Twitter, have been opened. It is hoped these feeds will assist Wikimedia to reach out to a broader candidate audience and to encourage the open sharing of this information.

- Swedish chapter report: Wikimedia Sverige has released their chapter report for August. The report announces the donation of 104 high resolution images of Vänsterpartiet party election candidates in the upcoming Swedish general election, a photo hunt in Scania and Bergslagen, two new toolserver tools by a WMSE member, a board meeting, media attention relating to the journalist Adam Svanell adding false information into the article about himself, and the passing of the 2,000-article point by the Sorani Wikipedia, the project of speakers of a Kurdish language of that name in Iran and Iraq, which receives most of its traffic from Sweden.

- Sneak peek at the fundraising campaign: The Wikimedia Foundation is organizing its annual fundraising campaign for November and December. The campaign, which will run on banner ads asking for donations placed above articles, has traditionally been the largest single source of revenue for the project. A post on the Wikimedia blog reveals the basis of this year's fundraiser as a 'collaborative "contribution" campaign'. The fundraiser will center on personalizing the messages across the different languages, "recognizing that messages that work in the United States don’t always work worldwide", and on encouraging new users to contribute to the project. Banner-ad brainstorming and testing is ongoing.

- Board minutes: The minutes for the July 8 meeting of the Foundation's Board of Trustees have been published. They largely concern discussions and decisions about internal Board matters, informed by a report of the Board's "Governance Committee" and aided by Sal Giambanco from the Omidyar Network. The Board will reduce the number of its face-to-face meetings from four to three for the coming year; the next one will be held on October 8–9 in San Francisco. "After a robust discussion", the Governance Committee was tasked with creating "recommendations for Board member evaluation to determine the effectiveness of appointed and elected Trustees". A consultant and project manager (Jon Huggett) was hired and a Board workgroup was formed for the "Movement Roles II" project, a process to clarify "the roles of the various organizational structures in the Wikimedia movement", especially chapters.

- Wikimedia and free book publishing: The functionality to import books from Wikibooks was recently added to Booki, a collaborative book publishing platform by the FLOSS Manuals foundation. Last week, both Booki and FLOSS Manuals' "Book Sprint" projects received an endorsement from the Wikimedia Foundation's Deputy Director Erik Möller, who called them "important new approaches in knowledge production and dissemination." (See the recent story of a project that applied the book sprint method to improve a Wikipedia article.)

- Study on controversial content: Robert Harris, the consultant tasked by the Foundation with studying the issue of potentially offensive content following controversial image deletions on Commons (see previous story), posted an email exchange about the subject with a librarian from the University of Northern British Columbia. As summarized by Harris: "In the library community, the key concept is something they call Intellectual Freedom (IF), and they've been discussing this concept and its ramifications for many decades (some of the key policies in this area were enunciated in the 1950s). You can see that, by and large, the library community is very concerned to preserve and protect IF, although there have been, and continue to be, many challenges to their efforts in this regard." According to the initial announcement, Harris' recommendations will be presented to the Board at its next meeting in October.

Reader comments

WikiLeaks and Wikipedia; Google–WP collaboration to translate health information

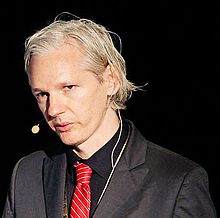

Difficult relationship between WikiLeaks and Wikipedia

As reported in last week's "In the news", the continuing media attention for Julian Assange's website WikiLeaks (which recently published thousands of documents revealing what The Guardian newspaper called "the true Afghan war") has had an adverse effect on Jimmy Wales and Sue Gardner[1] who both reported that they frequently had to deal with people who mistakenly assume that they are involved in Wikileaks. In an interview with The Independent, Wales said he was "fed up" with the volume of e-mails he was receiving that attacked him for "putting the lives of thousands of US troops at risk", but that his reaction was to "just roll my eyes, chuckle to myself and tell them they've got the wrong man." Somehow in agreement with Assange, who had responded to Gardner's statements by pointing out that "wiki" was around a long time before "Wikipedia", Wales said that he could not just copyright the word 'wiki', because he did not want to prevent other people from starting wikis. He said it is not the Wikimedia Foundation's "style" to "come to blows with Wikileaks in court". He did say, however that he thinks "the issue of having to protect our name is something I can anticipate coming up again in the future."

Both Wales' concern and the public confusion between the two "web behemoths" (The Independent) are nothing new. On January 3, 2007, (the day of the first public report of Wikileaks' existence), the domains wikileaks.com, wikileaks.us, wikileaks.biz and wikileaks.net were registered, apparently by Wales out of concern about the name. To this day, they belong to Wales' company Wikia, although they now appear to be used by Wikileaks in addition to its main site wikileaks.org.

Around that time, Wikileaks described itself as "an uncensorable version of Wikipedia for untraceable mass document leaking and analysis."[2] According to internal emails published by Wikileaks co-founder John Young on his own leaking site, Cryptome, the Wikileaks founders deliberately used Wikipedia's fame to draw attention to themselves among "1000 other organizations jostling for time" at the January 2007 World Social Forum in Kenya, where Assange lived around that time: "even those [people who aren't net-savvy] can't but help hearing about the wikpedia."

Back to 2010: In a post on his "The Wikipedian" blog some weeks ago, titled "WikiLeaks: No Wiki, Just Leaks", William Beutler (User:WWB) speculated "that the site was so named to borrow from the credibility enjoyed (and earned) by Wikipedia". He observed that while Wikileaks was running on the MediaWiki software, all participatory functions of the software seemed to be disabled, along with those providing transparency (history and recent changes).

However, Assange initially did intend to model Wikileaks after Wikipedia's collaborative processes, according to his remarks at a symposium at the Berkeley Graduate School of Journalism on April 18 (video, from 36:30, or on YouTube), where he explained what made him change his mind:

| “ | It was our hope initially, because we had vastly more material than we could possibly go through, that if we just put it out there, people would summarize it themselves. That very interestingly didn't happen – quite an extraordinary thing.

Our initial idea was: Look at all those people editing Wikipedia. Look at all the junk that they are working on. If you give them a fresh classified document about the human rights atrocities in Fallujah that the rest of the world has not seen before – a secret document – surely all these people that are busy working on articles about history and mathematics and so on, and all those bloggers ..., will step forward, given fresh source material, and do something? No! It's all bullshit. In fact, people write about things in general, if it's not part of their career, because they want to display their values to their peers who are already in the same group. Actually they don't give a fuck about the material, that's the reality. |

” |

Wikipedia has at least twice been the subject of leaks published by Wikileaks. In 2009, an archive file was uploaded to Wikileaks that was described as containing postings from a private mailing list of some Wikipedia editors, several of whom were later sanctioned by ArbCom due to their involvement in the list. In November of the same year, during a debate in the German public about deletionism on Wikipedia (see Signpost coverage: "German Wikipedia under fire from inclusionists"), Wikileaks published a copy of one of the articles in question, which had been deleted for notability reasons. And in December 2007, Assange had generated media coverage with a report titled Wikileaks busts Gitmo propaganda team, largely containing Wikipedia edits carried out under an IP belonging to the Joint Task Force Guantanamo (but that connection had already been noted four months earlier by User:Computerjoe).

At the same time, Wikileaks and Assange appear to have been concerned about their coverage on Wikipedia. On April 9, a Twitter message by Wikileaks read "WL opponents seem to have created Julian's Wikipedia page. For ethical reasons we can't edit. Please fix". (Privatemusings, who had started the article Julian Assange, replied that he was "not sure where that's coming from".) On April 21, Assange posted comments on the article's talk page itself, again acknowledging that "it would not be ethical for me to edit this article directly" but stating that "The nature of my work, exposing abuses by powerful organizations and nation states, tends to attract attacks on my person as a way to color debate. The history of this page has numerous examples" (without naming any) and voicing concern that a portrait photo used in the article "tends to undermine my message" (he later donated a publicity image under a free license). On August 16, Assange said "there are frequent attempts by military apologists and others to manipulate our Wikipedia pages".

Major Google–Wikipedia translation project: Health Speaks

Fast-forward to 2010, and Google.org has announced on its home page a new collaboration with the English Wikipedia's WikiProject Medicine on an initiative called Health Speaks. Haroon says the aim is to support pilot translation projects in which "volunteers translate health articles into [Arabic, Hindi and Swahili] and publish them online on Wikipedia for all to access". The project will explore active cooperation between professional medical editors (hired by Google) and any interested Wikipedians to further improve the quality of articles selected by the Google Foundation. Haroon says, "We have chosen hundreds of good quality English language health articles from Wikipedia that we hope will be translated with the assistance of Google Translator Toolkit, made locally relevant, reviewed and then published to the corresponding local language Wikipedia site." The countries targeted are Kenya, Egypt, Tanzania, and India; among those encouraged to register for volunteer translation and translation review are medical, nursing and public health students; health professionals; health NGO employees; and other students.

Volunteers will work from a dedicated site for each language, with access to an ongoing table of articles and their translators'/reviewers' usernames. Volunteers may use the online Google translator toolkit to assist their task, and can register for news updates and information about training events. For the first two months, Google will donate 3 cents (US) to three non-profit medical institutions in India, Egypt, and east Africa for each English word translated, up to $50,000 each; these institutions will provide local support for the program.

The collaboration follows activities by Google.org's parent company Google, which likewise uses the Translator Toolkit to translate Wikipedia articles (Signpost coverage: Google uses machine translation to increase content on smaller Wikipedias and Wikipedia, Google Translate and Wikimedia's India strategy).

Wikipedia's health-science experts comment

Tim Vickers is a US-based biochemist and a key player in our Google Project Taskforce. He says the main issues for Wikipedia will be "improving the quality of the articles as much as possible before they are translated, and trying to tone down the US-centred approach we commonly take to topics." Fvasconcellos is a professional translator and an experienced member of both WP:MED and WikiProject Pharmacology. As a translator, he is glad of two things: the first is Google's choice to use Google Translator Toolkit rather than Google Translate. "The Toolkit is basically a postediting suite, and thus allows articles to be translated more easily and quickly than if the job was done manually, while reducing much of the inevitable inaccuracy of machine translation." The second thing is Google's use of "folks who know what they're doing—the usernames suggest many translators and reviewers are medical professionals. Professional input is of the utmost importance when translating medical and health-related content, as the pitfalls and potential implications of inaccuracy can be far more significant than in other fields.... when you're talking about drug dosage, symptoms, or how to prevent infectious diseases, quality assurance can become a matter of life or death."

Vasconcellos says, "I do think there is a risk that talk pages will become magnets for misguided medical advice, possibly dangerous anecdotal information, and quackery. This happens in the English Wikipedia all the time [and] should certainly be on the minds of all those involved. What's the user base like? Will knowledgeable users be watching the articles for misguided edits, and the article talk pages for inappropriate content?" Kilbad (of the Dermatology task force) says: "As the coverage of medicine-related content continues to grow and improve on Wikipedia, the number of talk-page requests for medical advice will likely increase. [If this occurs, it will be] important to make Wikipedia's medical disclaimer (WP:MEDICAL) a prominent feature of these pages to discourage the giving and/or asking of advice". However, Tim Vickers feels the "talk-page advice" problem is not that common on enwiki pages, and is likely to be "similarly rare in other languages. Most of the important medical articles are on multiple watchlists, so this won't be a big job."

To Vasconcellos, there are more significant issues. "What will happen when well-meaning users start adding sources that enwiki would not use due to quality concerns? Arguments on the appropriateness of sources happen every day at enwiki. How will they be handled? Will they happen at all, or will articles grow and evolve in an unorganized fashion, with questionable content added and left to 'fester' unchecked?" And "how will traditional medical practices be dealt with? How do these Wikipedias deal with this sort of information at the moment? Is it allowed? Is it given equal footing with (Western) science-based content? We strive to make the medical content of enwiki evidence-based, and by that I mean based on recent, high-quality scientific sources. Is that practice followed at Hindi Wikipedia, for instance, and if not, should it be? Should content based on traditional medical views of malaria or diabetes, for instance, be added/allowed/encouraged for cultural reasons?"

For the Wikimedia Foundation, working through these and related issues is likely to make Health Speaks a fascinating venture into cross-cultural and -linguistic management. If it succeeds, Google hopes the program will grow into a larger scheme involving more languages and more articles.

Briefly

- Indian Wikimedia office: An article by Indian business newspaper Live Mint (Wikimedia to open office in India in six months) reported on the Wikimedia Foundation's plans to open its first ever presence outside the US. The article quoted chief global development officer Barry Newstead, who will visit India this month, and Board member Bishakha Datta. A small local team will be built for the office. (The WMF already announced a job opening for a "National Program Director, India", as reported in last week's News and notes.) The office will likely be in Mumbai, in Bangalore (where the newly formed Indian Wikimedia chapter is located) or in Pune. In an interview with The Guardian, the WMF's Executive Director Sue Gardner addressed concerns that its presence in India (a country that recently threatened to ban BlackBerry communication devices) would expose Wikimedia to state censorship pressure: "Our stance is that we won't compromise. We haven't, for example, made any concessions to the Chinese government and we were blocked there up to the [2008 Beijing] Olympics. As a result, our share is still very, very small in China."

- Jimbo on collaboration skills: Jimmy Wales was interviewed by Howard Rheingold about collaboration skills in an 11-minute video. Wales said much of such skills consisted of basic "kindergarten ethics", but described three things that he had "learned over the years, the hard way": Assuming good faith, but realizing that sometimes a group needed to hold firm to its principles and ask malicious members to leave, in which case they should be offered alternatives, i.e. other projects that might be more suited to their goals. Wales named two conditions for starting successful online collaboration projects: First, it "must be immediately obvious" to a new contributor how to help out or what to do, a condition fulfilled by Wikipedia. He said that his Campaigns Wikia project failed for this reason, as its stated purpose was ambiguous. Secondly, the project has to have a worthwhile goal, even if this needs to be understood in a wider sense such as in the case of the Muppets wiki.

- COI editing advice: Manny Hernandez, an US activist who founded the Diabetes Hands Foundation, gives other non-profit organizations some quick tips on how to get an article on Wikipedia. [3]

- Houellebecq plagiarizes Wikipedia: The new novel of French writer Michel Houellebecq contains several passages that have been plagiarized from Wikipedia, according to news website Slate.fr[4]. It noted the similarity of three passages from the book with the French Wikipedia's articles about Frédéric Nihous, the housefly and the town Beauvais. Wikimedia France stated that the passages indeed appeared to have been borrowed from Wikipedia, although the text was of a certain "banality". Slate.fr quoted the chapter's president, Adrienne Alix, who clarified the relevant legal issues concerning free licenses.

- Singaporean wiki course: The Chronicle of Higher Education reported on a course in wiki-based collaboration for Singaporean students (Wiki project brings some student wariness at Singapore Management U.).

- Wikipedia more credible than newspapers: A study about information usage and Web 2.0 in Austria, Slovenia and Croatia, commissioned by telecommunications firm Telekom Austria (English press release), surveyed 500 Internet users in each country and found that among young users (born 1980 or later), Wikipedia is regarded as at least as credible as traditional news sources: "Wikipedia is turning into the new TV and the new newspaper." In Austria, 63 percent regarded Wikipedia as "credible" or "very credible", compared to only 53 percent for print media and 50 percent for online newspapers and magazines, but slightly behind TV, which 65 percent regarded as trustworthy.[5]

- The Correctional Service of Canada is investigating a Wikipedia entry made on a government-owned computer renaming Canada's Official Languages Act as the "Quebec Nazi Act." Fed to Investigate 'disgusting' wikipedia entry- Canada

- News agency Associated Press asked Jimmy Wales about his opinions on the future of paid news content last week. He was skeptical about paywalled newspaper websites, but optimistic about paid apps on the iPad or Kindle. Concerning Wikipedia, "he joked about how his relatively modest means has misled people into thinking he's an ideological opponent of commerce. Far from it; his new [sic] venture Wikia, which builds online communities around shared interests, is ad-supported. 'I don't think it's right for Wikipedia but I think it's perfectly fine for other contexts," he said. 'When you go to Wikipedia you should be there to learn, reflect, think contribute in a positive way ... We don't need to commercialize it.'"

Reader comments

Cognitive surplus, by Clay Shirky

Wikipedia is a prime example of such a collaboration, and Shirky was one of its earliest observers: In his April 2003 talk "A group is its own worst enemy", where he warned of the fundamental group dynamics that tend to threaten online communities, he singled out Wikipedia as a group that had avoided such threats. In his 2008 book Here Comes Everybody, Wikipedia figures as a key example. (Shirky has been on the Wikimedia Foundation's Advisory Board since 2007 and was consulted for the WMF's Strategic Planning project.) In Cognitive surplus, Wikipedia is less prominent, but the book is filled with insights, anecdotes and research results that make it an excellent read for Wikimedians who want to reflect on the successes and challenges of the Foundation's projects.

Wasting or using free time

Cognitive surplus grew out of an April 2008 talk (the transcript provocatively titled "Gin, television, and social surplus"). Shirky is following a decades-old tradition of criticizing TV as a passive medium, poignantly expressed in his exchange with a TV producer to whom he had described the intense collaboration of Wikipedians:

| “ | she sighed and said, "Where do people find the time?" Hearing this, I snapped and said, "No one who works in TV gets to ask that question. You know where the time comes from." She knew, because she worked in the industry that had been burning off the lion's share of our free time for the last fifty years. | ” |

For Shirky, even playing (collaborative) computer games such as World of Warcraft is a more participatory activity than watching TV; but not all collaborations enabled by the Internet are equally beneficial to society. He contrasts examples such as Wikipedia and Ushahidi (a platform where Kenyan citizens collaborated to track outbreaks of ethnic violence) with Icanhascheezburger.com, a website for making Lolcat images, which he calls a candidate for 'the stupidest possible creative act', but at least one that bridges the gap "between doing nothing and doing something" and has a collaborative element. (While writing the book, Shirky considered "LOLcats as soulcraft" as its title; Ragesoss offered an interpretation.) Shirky calls the making and sharing of Lolcats a "communal" collaboration, i.e. one that is mainly benefiting participants, while communities such as Wikipedia generate "civic" value for the outside society. One of the book's main points is that although technological advances more or less guarantee there will be communal activity, transforming cognitive surplus into civic value requires active effort. In his NYC talk, he framed this difference in required effort as a spectrum from "Let it happen" to "Make it happen"; a notion that Sue Gardner quoted last week with respect to the different levels of facilitation required from the Foundation regarding the already established English Wikipedia and the still developing Hindi Wikipedia.

The second chapter examines the new means by which people can aggregate cognitive surplus. The basic theme – that the Internet has radically reduced the cost for communicating, and particularly for publishing – has been elaborated many times; but Shirky still manages to present it in a fresh, clear narrative, by focusing on what he calls "Gutenberg economics", where the act of publication carries a high financial risk and the decision on what to publish is therefore crucial. After remaining in force for the past five centuries, this economic principle is no longer valid. Just as Gutenberg's invention of movable type had precipitated a sharp drop in the costs of making a book, and consequently a drop in the average quality of books ("before Gutenberg, the average book was a masterpiece"), the Internet caused a drop in the average quality of published content, but compensates for this with room for experimentation and diversity, such that "the best work becomes better than what went before".

Motivating and cultivating collaboration

Why exactly do people share and collaborate? Shirky's discussion of several research results from psychology and behavioral economics might be of great interest to Wikimedians. Edward L. Deci's "Soma" experiments found that intrinsic motivations – "the reward that an activity creates in and of itself" – can be "crowded out" by an extrinsic motivation such as a financial reward. Some recent attempts to increase participation in Wikipedia could be interpreted as supplementing intrinsic motivations (editing Wikipedia for fun) with extrinsic motivations such as winning prizes or passing a university course. Might the avoidance of crowding-out effects become an important concern in such projects? Signpost readers might remember the frustration of Muddyb, a Wikipedian from Tanzania and a bureaucrat on the Swahili Wikipedia, about the lack of motivation of the participants in Google's "Kiswahili Wikipedia Challenge" after the prizes had been distributed:

| “ | Nearly all of them are gone now and left a lot of [low quality] articles ... they don’t care because they were there for laptops and other prizes (no need to be rude, but it hurts me pretty bad).[6] | ” |

According to Deci, there are two kinds of "personal" intrinsic motivation: a desire to be autonomous (to be in control of one's actions) and to be competent ("to be good at what we do"). These are complemented and reinforced by two kinds of "social" intrinsic motivations (according to Yochai Benkler and Helen Nissenbaum): a desire for a connectedness (membership) and for sharing (generosity). These two pairs – personal and social motivations – are summed up nicely by Shirky in the two messages "I did it" and "We did it", which he says form a feedback loop that applies to Wikipedia and most other uses of cognitive surplus. However, at his NYC keynote Shirky remarked that these four can sometimes also be in competition. For example, the desire for connectedness might make one welcome new members into a collaboration and learn from them, but at the same time this exchange might make one feel less competent than before.

Shirky addresses the "digital sharecropping" criticism formulated by Nicholas Carr, who argues that just as land owners used to exploit farm workers without pay, allowing them only a share of the crops they had grown themselves, today's owners of online platforms reap the value that the participants of online communities generate without pay. Shirky mostly dismisses these complaints, which "arise partly from professional jealousy", by arguing they are a misapplication of Gutenberg economics: the participants are not workers driven by financial incentives (external motivations), but contributors motivated by a desire to share. However, Shirky notes the AOL Community Leader Program, where a "change from a community-driven site to an advertising-driven site" robbed volunteer moderators of such motivations (and prompted a class action lawsuit that concluded this May, after 11 years), as a case where the "digital sharecropping" term is justified. Historians of Wikipedia will recall how concerns over possible advertising on Wikipedia prompted the Spanish fork in 2002.

Chapter four, titled "Opportunity", describes the ways in which such motivations, enabled by the new collaboration tools, lead to successful "social production" (the example that Shirky devotes most room to is the Apache HTTP Server project). One interesting notion here is that of "combinability", further explored in the fifth chapter, which describes the kind of "culture" that according to Shirky must be fostered to enable society to benefit from its cognitive surplus. As a lucid comparison, he describes the Invisible College in 17th century England, a collaboration of scholars which played an important role in the scientific revolution, later morphing into the Royal Society. Shirky's main point here is that Gutenberg had provided the technical means for this kind of collaboration long before, but it was brought about only by a change in culture – from that of the alchemists, whose secretive attitude made them repeat each others' errors, to the open scientific communication we know today, that enables scholars to combine their knowledge better. Shirky cites economist Dominique Foray who posits four conditions that must be met for a community to combine knowledge effectively, regarding:

- The size of the community

- The cost of sharing the knowledge

- The clarity of the shared information

- The cultural norms of the recipients

It is clear that the Internet helps to reduce the cost of sharing and to enlarge the number of possible participants. "Clarity" might refer to forms like recipes or standard description formats. Here again, Shirky argues that "culture", the fourth condition, is the most crucial one, relating to "shared assumptions about how [the group] should go about its work, and about its members' relations". The term community of practice has been coined for groups that have developed a certain form of such a culture. Shirky does not mention Wikipedia in this respect (citing free software projects instead), but it is not hard to see that it might be worthwhile to apply Foray's criteria and the notion of a "community of practice" to Wikipedia – British historian Dan O'Sullivan has written an entire book called "Wikipedia: a new community of practice?" (see Signpost review).

In the sixth chapter, Shirky comes back to the difference between projects like Wikipedia and platforms like I Can Has Cheezburger?, or between collaborations that provide civic value for society and those that only reward their members. He revisits a main topic from his 2003 "A Group Is Its Own Worst Enemy" talk: The results of group dynamics researcher W. Bion, who identified three ways in which the emotional needs of participants often derail collaborating groups from pursuing their shared goal (see Wilfred_Bion#Basic_assumptions): The group can degenerate into blind veneration of an idol (Tolkien in a Lord of the Rings newsgroup), into quasi-paranoid defense against real or perceived outside threats (Microsoft among Linux fans in the 1990s), or into "pairing off" when the members are mostly concerned with forming romantic couples "or discussing those who form them". Groups have to respect the emotional needs of their members (Shirky notes that even an extremely hierarchical group such as the military "is deeply concerned about the soldier's morale"), but if they aim at more and provide civic value, they need to develop some sort of governance. Again, the Wikipedian reader might reflect how Bion's insights relate to Wikipedia policies.

In the final chapter, Shirky ventures to give some more direct advice on how to start successful social media platforms. However, he cautions that it is not possible to formulate an overall strategy for harnessing cognitive surplus – experimentation is still essential to find "the most profound uses of social media".

Assessing Shirky's ideas

The book's basic themes have some inevitable overlap with those of "Here Comes Everybody". However, by introducing the notion of cognitive surplus, Shirky manages to provide a fresh perspective.

Shirky's frequent use of examples and anecdotes has often been criticized (for example by Evgeny Morozov [7]). Indeed, some examples in "Cognitive Surplus" may fail to convince by themselves, such as when Shirky contrasts the restrictions that advertisers' expectations place on women's magazines (as criticized by Naomi Wolf in her 1991 book The Beauty Myth), with an edgy blog post about casual misogyny from 2009. While, say, Cosmopolitan may not have published such a text in 1991, it is entirely imaginable that it could have run in more specialized feminist magazines (say Spare Rib or off our backs) before the Web, where it could equally well have attracted the "thousands of readers" that Shirky reports for the blog post. Or consider the anecdote that concludes the book: A four-year-old girl watches a DVD, jumps off the couch and searches behind the TV, saying she is "looking for the mouse" – a striking emblem for a young generation that expects media to be participatory. However, snarky Internet commenters have remarked that the programme the child was watching, Dora the Explorer, frequently features imagery borrowed from computer desktops, including mouse-pointer-like arrows and "clicks", which makes her action less striking. But it would be wrong to say that Shirky relies on his anecdotes and examples to prove his points. Their real value lies in illuminating and clarifying ideas, especially for readers like Wikipedians who will be able to test those statements against their own experiences. And especially in the middle chapters, the anecdotal approach is balanced by a multitude of established research results. Finally, Shirky has proven his insight not just in hindsight. Observers of free encyclopedias may recall the clear predictions that Shirky made about Citizendium's model of collaboration at the time of its foundation in 2006, which appear to have been largely vindicated since then (as observed some months ago by researcher Mathieu O'Neil, see Signpost coverage: Role of experts on Wikipedia and Citizendium examined, or in my own talk about Citizendium at Wikimania 2009).

Reader comments

Tools, part 1: References, external links, categories and size

Broadly defined, a tool is any implement designed to make a process simpler, more efficient, or easier, adding extra functionality in the process. On Wikipedia, tools for editing articles run in various ways; some are attached to the standard edit toolbar (which may need to be activated in the Preferences), some to the sidebar, some are bots, and others run on the Toolserver. Some are completely independent of Wikipedia and run on their own, separate sites. However, they all have something in common: they were designed and built by competent coders to make editing easier and faster.

User scripts consist of JavaScript code that runs in your browser, adding functionality to Wikipedia's standard user interface. They range from extra links to extensive editing platforms. Never copy scripts into your skin.js page; instead use importScript("User:Example/awesome script.js") which keeps scripts updated with bug fixes and enhancements. Compatibility varies with skin and browser, with Internet Explorer being problematic. A more extensive script list is at Wikipedia:WikiProject User scripts/Scripts.

RefToolbar 2.0

The RefToolbar 2.0 is one of the most popular Wikipedia tools. The improved second version adds an extra drop-down menu, "Cite," at the end of your toolbar. This allows you to insert any of the four main citation templates: Cite web, Cite news, Cite book, and Cite journal. Just as useful are its "Named references" insertion button and its error check. This saves you from memorizing all of the citation parameters, which can be simply inserted into the template.

- Author : Mr.Z-man

- Placement : Adds an extra drop down-menu to your toolbar.

- Demo : Try it in Wikipedia:Sandbox

- Installation : Two ways: (i) Go to Special:Preferences, click on the tab "Gadgets," check the box for refToolbar, and hit the Save button; or (ii) go to Special:MyPage/skin.js, and insert the text

{{subst:js|User:Mr.Z-man/refToolbar 2.0.js}}

Editrefs

Editrefs searches an article for every instance of <ref> </ref> tags and displays them in convenient individual text boxes for easy editing. This makes checking that reference fields are filled out, making sure they are standardized, and other general reference checks easier and simpler to do.

- Author : Dr pda

- Placement : Adds "Edit references" to the side bar. Note: Only displayed when editing the page.

- Demo : Paste

javascript:importScript("User:Dr_pda/editrefs.js");editRefs()into your browser's address bar while editing the article of interest. - Installation : Add

{{subst:js|User:Dr pda/editrefs.js}}to your Special:MyPage/skin.js page

Reflinks

Reflinks adds titles to bare references, taken from the HTML <title> element of the linked Web page. For example,

<ref>http://example.com</ref>

becomes

<ref>[http://example.com Example Web Page]</ref>

The tool is available in two modes: interactive and automatic. In interactive mode, assumptions made upon expanding references are presented for review, while the automatic mode tags titles with <!-- bot generated title --> comments for possible later review by humans. It also supports the Cite web template, filling out other fields besides the title, such as the access date.

DOI bot

When given an article name and the requesting user's name, this toolserver bot crosschecks Internet databases to add DOIs (character strings identifying online papers) and PMIDs (for PubMed-indexed life science and biomedical papers) you may have missed while preparing an article, and then the Citation bot automatically adds the values to the article.

- Author: Smith609

Checklinks

Checklinks checks all of the outgoing links on a page and ensures that they work. A table is displayed giving the status of each. It is an efficient way to check an article for dead and broken links. WebCite and Wayback Machine integration allows easy fixing from within the tool.

- Author: Dispenser

Hotcat

Hotcat allows the placement of categories on a page from a "hot" list. It adds icons (−) and (±) onto the page's Category bar. Clicking on these icons allows you to quickly delete, edit, or add categories to a page. As you type in a word, the tool displays a list of categories starting with those letters; for example, typing in "Shield" will give you Shield volcanoes, Shield bugs, and Shields. Press the button and the tool will automatically edit in the category for you, saving you from having to search out the category yourself.

- Author : TheDJ

- Placement : Adds text buttons to the category tab at the bottom of the page.

- Demo : Try it in Wikipedia:Sandbox

- Installation : Add

{{subst:js|User:TheDJ/Gadget-HotCat.js}}to your Special:MyPage/skin.js page, or go to Special:Preferences, check its box under "Gadgets", and click "Save".

Prosesize

Prosesize measures the amount of "readable prose" in an article. Installing the tool adds a "Page size" link to the sidebar; pressing this highlights the readable prose on the page and displays the total size, in both kilobytes (kB) and number of words. This is useful for determining whether a long article should be split or condensed, or whether an article meets the criteria for the Did you know? section of the Main Page.

- Author : Dr pda

- Placement : Adds a "Page size" link to the Wikipedia side bar.

- Demo : Paste

javascript:importScript('User:Dr_pda/prosesize.js');getDocumentSize();into your browser address bar while viewing the article of interest. - Installation : Add

{{subst:js|User:Dr pda/prosesize.js}}to your Special:MyPage/skin.js page

DYKcheck

DYKcheck is similar to Prosesize, except it has more features, also checking expansion dates, article creation, and other parameters. It can be used by editors reviewing suggestions to help assess articles for Did you know.

- Author : Shubinator

- Placement : Adds a "DYK check" blue link to the Wikipedia side bar.

- Demo : Paste

javascript:importScript('User:Shubinator/DYKcheck.js');dykCheck();into your browser address bar while viewing the article of interest. - Installation : Add

{{subst:js|User:Shubinator/DYKcheck.js}}to your Special:MyPage/skin.js page.

Reader comments

Putting articles in their place: the Uncategorized Task Force

This week, we spent some time with members of the Uncategorized Task Force, a part of WikiProject Categories. Their job on Wikipedia is, not surprisingly, to add categories to uncategorized articles, making these articles easier to find and browse. The task force's members can patrol the uncategorized category to find articles and use tools like HOTCAT to easily add categories. We talked to Pichpich and EdGl.

What motivated you to join the Uncategorized Patrol/Task Force at WikiProject Categories?

- Pichpich: Fun project to participate in. I'm also a big fan of categories which are, imo, essential to the project's ease of use. Lots to do and lots to learn about the tons of article sitting in uncategorized limbo. Often, the uncategorized articles need all sorts of cleanup and quite a few are deletion candidates.

- EdGl: I created this task force because I was interested in categorizing pages that didn't belong to a category. I saw that there was a large backlog of these pages that grew and grew each day, so I thought it would make a good task force of WikiProject Categories. Other Wikipedians were supportive, and quite a few jumped on board. I think a big reason why people join is that it's easy to do, and it's fun to find appropriate categories. Also, like Pichpich said (above), the task touches on other areas of editing such as cleanup and deletion. It's perfect for a jack-of-all-trades WikiGnome!

The project's backlog only includes the months July and August of this year. How has the project been able to tackle so many articles on such a regular basis?

- Pichpich: No idea. Part of it is probably the number of participants, part of it is probably due to a few very active participants. It can be a fairly quick task for easy cases.

- EdGl: Categorizing an article is quick and easy, requiring only a minor edit or two. Tools like HOTCAT are available to make the process even faster. That said, a sizeable group of hardworking, dedicated Wikipedians are to thank for the magnificent progress over the years. This task force started in February 2007 with a very significant backlog. Like with anything, a select few do most of the work, but we do have almost 200 signatures on the participants list. Some leave after awhile, but people are added to the list every month.

What kind of "quality-control" measures does your project have to make sure contributors are adding accurate categories to articles?

- Pichpich: As far as I know, there is none and it's almost impossible to put in place. I've seen complaints in the past and they're usually handled by simply reminding editors that categorization is not a race. If anything, sloppiness can make matters worse because poorly categorized articles are much harder to identify.

- EdGl: Pichpich (above) said it well. Participants are encouraged to take their time and carefully determine accurate, specific categories for a page. If a good category cannot be determined, due to the poor wording of an article or lack of an appropriate category, participants should leave the page alone and let another editor take a look at it. Placing it in Category:Better category needed is also an option.

Do you use AutoWikiBrowser or HOTCAT to help you in categorizing articles? Would you recommend all users install these features? Are there any efforts to get some of these features added to Wikipedia's primary interface?

- Pichpich: Nope.

- EdGl: I use HOTCAT, and it's great. It makes the job quicker and easier, with less typing necessary. I highly recommend it, although there are a couple of downsides. First of all, it's easy to be lazy and not check to see if a category is really the best (i.e., most accurate and specific) one to use. Secondly, adding multiple categories requires multiple edits, which is annoying and makes the article history messier. It's worth it being able to add, change, or remove a category without using the edit window, however.

What tips do you have for editors who have just written a new article but don't know how to categorize it?

- Pichpich: Make sure the article is at least part of one non-trivial category. (By "trivial" I mean things like Category:1885 births which are not used for browsing) When in doubt, just leave the article uncategorized and let the task force handle it eventually.

- EdGl: If you start an article, you should know enough about the subject to know what category it should belong to. After that it's just a matter of finding the corresponding Wikipedia category(ies). Do what we do: look at how similar articles are categorized. If you're still lost, then at least make it easy for us by making your article clear and readable. Describe the subject in as much detail as possible. Give the location of the subject, if applicable. One pet peeve of mine is when an article about a person does not have his/her place of birth or current location, or when an article about a company or organization does not give the location of its headquarters. Include wikilinks to similar articles and proper nouns to make our category search easier.

Anything else you'd like to add?

- EdGl: Thanks to the Signpost for this opportunity, all the participants that have made this an active and successful task force, and for you for reading this article. Oh, and consider joining the UNCAT task force :)

Next week, the Report will be drawing on a largely unknown WikiProject for an interview. Until then feel free to keep a steady eye on the archive.

Reader comments

Bumper crop of admins; Obama featured portal marks our 150th

Administrators

There has been a significant increase in the number of successful RfAs over the past month, which has seen 15 promotions, at least double the typical rate. The Signpost congratulates the five editors who were promoted over the past week.

- Michig (nom), from England, plays guitar and bass guitar when not editing Wikipedia. Not surprisingly, his content interests involve music-related articles—particularly alternative music, reggae and hip hop. His interests also lie in Caribbean- and IT-related articles. Michig has created more than 370 articles, with more than 20,000 edits over his five years with us, three-quarters of them in mainspace. His content work will be combined with administrative tasks such as reviewing and, if appropriate, deleting articles tagged for deletion.

- Mandsford (nom) lives and works in the United States. History is his specialty; many of the articles to which he has contributed—such as 1909 college football season—have had a strong historical element. This interest in history has led to his work on improving year and month articles, with a goal of presenting accurate and sourced information about the events that happened on each day of the 20th century. He counts among his best contributions his efforts to keep new editors here, and says that most of his admin work will lie "in determining the result of Articles for Deletion debates, and in participation in civility matters brought to the noticeboard or to Wikiquette".

- Dana boomer (nom), from the United States, has been at Wikipedia for two and a half years, specialising in equine articles; her leopard-spot Appaloosa gelding apparently calls the tune in real life. Her editing interests also cover history, astronomy, books, endurance sports, the outdoors, and labour unions. She has six FAs, two FLs, a featured portal, and 15 GAs to her name. Best of all, she is one of the two featured article review delegates, making a solid contribution to maintaining high standards among our best articles. She performs valuable work in sorting stubs, recovering red links, and contributing to our slowest deletion process.

- HelloAnnyong (nom), from New York, became active in early 2007 and has made more than 21,000 edits, at least half of them in article space. He continues to make a significant contribution in translating Japanese Wikipedia articles into articles on the English Wikipedia, and is active at WikiProject Japan. He is an expert in the PHP programming language. Annyong is a long-time member and the second-highest contributor to the dispute-settling WikiProject Third Opinion.

- Connormah (nom), from Edmonton in Western Canada, has a set of Good articles and several Did you knows to his name. He is a specialist in images, including signatures, and has been an active participant in the Counter-Vandalism Unit.

150th featured portal

- two featured articles,

- twelve good articles,

- one featured picture (right),

- six related portals,

- six WikiProjects,

- six categories;

- "Did you know" entries,

- "In the news" entries, and

- a vast array of topics.

The page features rotating selected article excerpts and quotations from the president. Users are invited to contribute to this field in the "Things to do" box at the portal's bottom right.

Three other portals were promoted on 1 September:

- The Volcanoes portal links to 24 featured artlcles, three featured lists, a gallery of 38 featured pictures, and 38 good articles. Among other resources, there are links to the Hawaii and Canada Workgroups. Selected quotes are updated regularly. "Is this volcano active?", said by a tourist in 2000 on Mount Etna, after being reprimanded for camping out at the base of a dangerous volcanic vent.

- The Speculative fiction portal deals with the "What if?" scenarios imagined by dreamers and thinkers worldwide. It brings together a wide array of genres, from webcomics to novels to films and television, and features "Did you know" and "On this day" sections.

- The US roads portal covers a huge and complex topic involving a hierarchy of interstate, numbered and state highways. It is regularly updated with selected pictures and articles, and presents recent news about construction, maintenance and events concerning US roads.

Featured articles

Twelve articles were promoted to featured status:

- Millennium Park (nom), part of the larger Grant Park, known as the "front lawn" of downtown Chicago (nominated by TonyTheTiger).

- Raid at Cabanatuan (nom), the dramatic story of how more than 500 Allied prisoners of war and civilians were rescued from a Japanese camp near Cabanatuan City in the Philippines in January 1945. One POW said during the trek back to American lines "I made the Death March from Bataan, so I can certainly make this one!" (Nehrams2020). (video at right)

- Blue-faced Honeyeater (nom), a generously sized member of the family of Honeyeaters, with a wingspan of 44 cm (17 in). Along with its eponymous blue face, it has distinctive olive, white and black plumage. It is common in northern and eastern Australia, and southern New Guinea (nominated by Casliber). (video at right)

- Liberty Bell (nom) in Philadelphia, Pennsylvania, was made by the London firm of Lester and Pack and is one of the iconic symbols of American independence. It is likely that it was rung to mark the public reading of the American Declaration of Independence on July 8, 1776 (Wehwalt). (picture at right)

- William de Corbeil (nom) (c. 1070–1136), one of the more obscure but interesting archbishops of England. He spent most of his episcopate in a dispute with the archbishop of York over the primacy of Canterbury, but managed to build the tower at Rochester Castle and to supervise the finishing of Canterbury Cathedral (Ealdgyth, with input from Malleus Fatuorum).

- Capitol Loop (nom), which nominator Imzadi1979 says is the "highway that's not really a highway [but] a collection of streets in downtown Lansing, Michigan". The Capitol Loop was controversial from the start, with public arguments over reconstruction at the turn of the century; more recently, there have been issues surrounding its use by speedsters.

- Sherlock Holmes Baffled (nom), the first detective film ever made, although only 30 seconds long. The article includes a modified derivative of the 16 mm print held by the Library of Congress (Bob).

- Sarcoscypha coccinea (nom), the "scarlet elf cup", a small bright-red fungus that is widely distributed over much of the Northern Hemisphere (Sasata).

- The Judd School (nom), a grammar school in Tonbridge, Kent, England (Tom).

- Nikita Filatov (nom), a Russian professional ice hockey winger playing for the US-based Columbus Blue Jackets, and a former top-ranked European skater (Canada Hky).

- Ben Paschal (nom) (1895–1974), an American outfielder who played eight seasons in Major League Baseball from 1915 to 1929, mostly for the New York Yankees, one of the best "pinch hitters" in the game during the period (Secret).

- The Boys from Baghdad High (nom), the powerful and eye-opening British–Iraqi documentary television film in which four boys in their final year of an Iraqi high school were given video cameras and told to record their lives over the course of a year (Matthewedwards).

Choice of the week. The Signpost asked long-time FA nominator and reviewer Malleus Fatuorum to select the best of the week, disregarding his own nomination. "With eleven to choose from it was a difficult decision, but three articles stood out for me, for quite different reasons. My choice is Liberty Bell, a technically excellent and engagingly written account of, well, a bell, but an iconic one. Sherlock Holmes Baffled and The Judd School deserve honourable mentions though; Wikipedia needs more quality articles on early cinema, and a model to follow for high-quality school articles."

Four featured articles were delisted:

- Shotgun house (nom: sourcing)

- Indian Institutes of Technology (nom: prose, sourcing, inconsistent style)

- Lost (TV series) (nom: original research, sourcing and comprehensiveness)

- Gbe languages (nom: prose, sourcing and images)

Featured lists

- List of BC Lions head coaches (nom). Two coaches have led the Lions (a Canadian football team) to a Grey Cup championship, and three have been elected to the Canadian Football Hall of Fame in the builders category (nominated by K. Annoyomous).

- List of 1932 Winter Olympics medal winners (nom). Irving Jaffee and John Shea won the most gold medals (two), all in speed skating (Courcelles and Geraldk)

- 2009 NCAA Men's Basketball All-Americans (nom), 2009 honorary college basketball teams composed of the best amateur players of a specific season for each team position. Blake Griffin, a unanimous selection, won most 2009 college basketball awards (TonyTheTiger).

- Glee (season 1) (nom), the first season of the musical comedy-drama television series about a high school glee club. It received praise for its "plain full-throttle, no-guilty-pleasure-rationalizations-necessary fun". (CycloneGU, Frickative)

- Hugo Award for Best Professional Magazine (nom), a defunct award given for professionally edited magazines related to science fiction or fantasy. Astounding Science-Fiction/Analog Science Fact & Fiction and The Magazine of Fantasy & Science Fiction both received the most awards (eight) (PresN).

- List of accolades received by An Education (nom). Carrie Mulligan won many awards for her performance in the lead role, including a BAFTA Award for Best Actress and an Alliance of Women Film Journalists award for Best Depiction Of Nudity, Sexuality, or Seduction (JuneGloom07).

Choice of the week. We asked regular FL nominator Another Believer for his choice of the best: "Of these six newly promoted lists, Glee (season 1) is my favorite. Not only does it illustrate the featured list criteria wonderfully, but it borders qualifying as a featured article because it contains so much relevant information. The fact that I have enjoyed several episodes of the show certainly doesn't hurt either."

One featured list was delisted:

- List of mergers and acquisitions by Red Hat (nom: consensus was that the article was an unnecessary content fork)

Featured pictures

- Meleager et Atalanta (nom), a scan of Meleager (far left, with the bow) and Atalanta (just to the right of her, with the boar spear) fight the Calydonian Boar in this 1773 engraving based on a painting by Giulio Romano (created by Giulio Romano and Franc[is?] Loving).

- Only the navy can stop this (nom), a 1917 World War I recruitment poster for the US Navy by William Allen Rogers.

- Aerial View of Meteor Crater (nom), the crater of more than a mile (1.6 km) in diameter that is left from a meteorite strike on Earth some 50,000 years ago (created by NASA, upload by Originalwana).

- Tagged monarch butterfly (nom), a monarch butterfly shortly after tagging at the Cape May Bird Observatory. Tracking information is used to study the migration patterns of monarchs, including how far and where they fly (created by Derek Ramsey).

- Attack on the Malakoff (nom), a lithograph by William Simpson, an on-the-spot journalist at the time, depicting a scene from the Battle of Malakoff, one of the defining moments of the Crimean War (1854–55). Reviewer Maedin said "The sweep up the hill to the bravely erected flag and the dramatic sky does it for me."

- The Fields Medal (nom), regarded as one of the top honours a mathematician can receive (created by Stefan Zachow).

- Calcoris affinis (nom), a member of the "true bug" order of insects that share a common arrangement of sucking mouthparts (created by Darius Bauzys). (Choice of the week: picture below)

- Black Tusk 4 (nom), the core of an extinct stratovolcano overlooking Whistler in Western Canada. The unusual formation developed about 1.2 million years ago when the loose cinder around it eroded, leaving only the hard lava core. (created by Andysonic777).

- Olive baboons social grooming (nom), an adult Olive Baboon grooms an infant at the Ngorongoro conservation Area in Tanzania. The original was edited after reviewers' concerns about depth of field (created by Muhammad Mahdi Karim). (picture at right)

- A bad hoss (nom), a 1904 lithograph by Charles Marion Russell, which nominator Adam Cuerden suggested may have had a role in codifying the cowboy image. (picture at right)

- Lager Nordhausen (nom), Rows of bodies of dead inmates fill the yard of Lager Nordhausen, a Gestapo concentration camp. This photo shows fewer than half of the bodies of the several hundred inmates who died of starvation or were shot by the Gestapo. Germany (created by James E Myers).

- Bombardment of Barcelona (nom), an aerial shot showing the explosions of Italian airforce bombs on Barcelona in 1938 during the Spanish Civil War (created by the Italian airforce).

- Tibia insulaechorab (nom), commonly known as the "Arabian Tibia", is a large species of true conch (created by Hans Hillewaert).

Choice of the week. IdLoveOne, a regular reviewer and nominator at featured picture candidates, told The Signpost, "If you're looking at the picture below and thinking "This insect seems familiar. Where have I seen it before?" it might be because it's in the same order of insects as the stink bug! However, it's not such a bug, but a Calocoris affinis, or grass bug. The order it's in is pretty diverse, including the herbivorous pests in the genus Miridae, the insectivorous genus Geocoris and the interesting family Gerridae which is sometimes called the "Jesus bug" because of its ability to move on top of water.

That's your science lesson for the day. Returning to the photo at hand, as a non-entomophobe, I find it to have great eye-appeal, and I'm sure some entomophiles will enjoy using it as a pleasant wallpaper. A triple-green image of a field scabious plant with those bold-edged sepals and the light green and brown bud orbs nested between them, an almost perfectly matching green bug that, in a way only a bug could, nearly defies a human's understanding of gravity before an almost dreamy and halo-like green background. This is my Choice of the week.

Reader comments

Interim desysopping, CU/OS appointments, and more

The Arbitration Committee opened no new cases, leaving one open.

Open case

Climate change (Week 13)

This case resulted from the merging of several Arbitration requests on the same topic into a single case, and the failure of a related request for comment to make headway. Innovations have been introduced for this case, including special rules of conduct that were put in place at the start. However, the handling of the case has been criticized by some participants; for example, although the evidence and workshop pages were closed for an extended period, no proposals were posted on the proposed decision page and participants were prevented from further discussing their case on the case pages (see earlier Signpost coverage).

The proposed decision, drafted by Newyorkbrad, Risker, and Rlevse, sparked a large quantity of unstructured discussion, much of it comprising concerns about the proposed decision (see earlier Signpost coverage). A number of users, including participants and arbitrator Carcharoth, have made the discussion more structured, but the quantity of discussion has continued to increase significantly. Arbitrators made further modifications to the proposed decision this week; drafter Rlevse said that arbitrators are trying to complete the proposed decision before the date of this report. However, Rlevse will not be voting on the decision as he has marked himself as inactive for the case.

Motions

- Date delinking: A motion was passed to permit Lightmouse to use his Lightbot account for a single automation task authorized by the Bot Approvals Group.

- Eastern European mailing list: A motion was passed to amend the restriction that was imposed on Martintg at the conclusion of the case. Martintg is now banned from topics concerning national, cultural, or ethnic disputes within Eastern Europe (previously, this topic ban concerned all Eastern Europe topics).

- Tothwolf: Motions were passed in relation to this case. The enforceable civility restriction that was imposed earlier on JBsupreme (see earlier Signpost coverage) has been extended in duration – it will now expire in March 2011. An enforceable civility restriction was also imposed on Miami33139, which will expire at the same time. An additional restriction was imposed on Miami33139, JBsupreme, and Tothwolf which bans each user from interacting with one another.

Other

Interim desysopping

Several users were not satisfied with this and attempted to seek clarification about the desysop but arbitrator Carcharoth then collapsed these comments as well, and modified the mentions of 'emergency procedures' to 'interim desysop procedures'. A comment by arbitrator Newyorkbrad was left at the bottom of the discussion, which stated:I will request that people refrain from speculating on this matter, nor should any other action being done about the account without Committee approval...this is simply a temporary measure until the matter is cleared up.

There are aspects of this situation that may not be suited for discussion on-wiki. (I say this without criticism of those who have commented, given that the posted announcement created a discussion section; someone should probably have posted here preemptively.) We will appreciate everyone's understanding and consideration in this matter.

CheckUser/Oversight appointments

The Committee endorsed all candidates that were being actively considered in August for appointment to CheckUser and Oversight positions (see earlier Signpost coverage). Earlier in the week, the following permissions were granted to the following users:

- CheckUser permissions

- Frank – successful candidate

- MuZemike – successful candidate

- Tiptoety – successful candidate

- Tnxman307 – successful candidate

- Hersfold – was re-appointed to a CheckUser position (a "[r]outine return of the tools, following a Wiki-break")

- Oversight permissions

- Beeblebrox – successful candidate

- LessHeard vanU – successful candidate

- Phantomsteve – successful candidate

Note: for the reasons reported earlier, MBisanz and Bastique will not be granted Oversight permissions until November 2010 and December 2010, respectively. However, The Signpost notes that these candidates were also successful.

Reader comments

Development transparency, resource loading, GSoC: extension management

Development team to start publishing updates regularly

As the MediaWiki software behind Wikimedia sites grows and matures, it becomes more complicated to manage and to oversee major changes. As such, the Foundation has begun to bring in more paid contractors and employees (though not many for such a large and popular set of websites), each with their own project. The first in a soon-to-be-monthly series of posts outlining these projects was posted this week on the Wikimedia Techblog. The projects that receive some sort of paid support rather than being left entirely to the community to develop include the following. This is not complete list and the items are numbered only for convenience:

- Virginia Data Center – To set up a world-class primary data center for Wikimedia Foundation properties.

- Media storage – To re-vamp our media storage architecture to accommodate expected increase in media uploads.

- Monitoring – To enhance both ops and public monitoring to (a) notice potential outages sooner, (b) increase transparency to the community, and (c) support the progress-tracking required in the five-year plan.

- Article assessment – To collaboratively assess article quality and incorporate reader ratings on Wikipedia.

- Pending changes – The enwiki trial.

- Liquid threads – An extension that brings threaded discussions capabilities to Wikimedia projects and MediaWiki.

- Upload wizard – An extension for MediaWiki that provides an easier way to upload files to Wikimedia Commons, the media library associated with Wikipedia.

- Add-media wizard – A gadget to facilitate the insertion of media files into wiki pages. Its development is supported by Kaltura.

- Resource loader – To improve the load times for JavaScript and CSS components on any wiki page.

- Central notice – CentralNotice is a banner system used for global messaging across Wikimedia projects.

- Analytics revamp – To incorporate an analytics solution that can grow and answer questions posed by the Wikimedia movement.

- Selenium deployment – To build an automated browser-testing environment for MediaWiki.

- Fraud prevention – To focus on integrating new fraud prevention schemes in our credit-card donation pipeline.

- CiviCRM upgrade – To upgrade our heavily customized CiviCRMv2 install to a mostly stock CiviCRMv3 install.

- Process improvement – To increase transparency and generally organize the Foundation’s engineering efforts more efficiently.

Further information on each, including their current status, is available on the original post. Updates on each should be more accessible in future.

ResourceLoader coming "soon"

Developer Trevor Pascal announced on Twitter that his work on a new ResourceLoader to improve loading speeds on Wikimedia sites had progressed and could now be expected "soon". He went into more detail on the Wikitech-l mailing list, explaining the main features to expect:

| “ |

|

” |

He gave the example of a page that would previously require 35 requests (totalling 30kB) now taking just one of 9.4kB. Gains for users on older hardware or mobile devices might be improved even more, he said, since they were being served whole scripts they could do nothing with.

Google Summer of Code: Jeroen De Dauw

We continue a series of articles about this year's Google Summer of Code (GSoC) with student Jeroen De Dauw, who describes his project to develop a system for managing the extensions installed on a wiki (read full blog post):

| “ | My initial proposal was to create an awesome extension management platform for MediaWiki that would allow for functionality similar to what you have in the WordPress admin panel ... I started with porting the filesystem abstraction classes from WordPress, which are needed for doing any upgrade or installation operations that include changes to the codebase. (The current MediaWiki installer can do upgrades, but only to the database.) I created a new extension called Deployment, where I put in this code ... but it turned out that doing filesystem upgrades securely is not an easy task, so after finishing the port, I stopped work on this temproarily. I then poked somewhat at the new MediaWiki installer [due to ship with MediaWiki version 1.17], which is a complete rewrite of the current installer. I made some minor improvements there, and split the Installer class, which held core installer functionality, into a more generic Installer class and a CoreInstaller. This allows for creating an ExtensionInstaller that uses the same base code ...

I decided to create the package repository, from which MediaWiki and extensions could get updates and new extensions, from scratch, and started working on another extension, titled Distribution, for this purpose. I merged it together with a rewritten version of the MWReleases extension written by Chad, which already had core update detection functionality. After the Distribution APIs where working decently I started work on the Special pages in Distribution that would serve as the equivalent of the WordPress admin panel. As I had put off the configuration work, and also the file-system manipulation for the initial version, this came down to simply listing currently installed software, update detection and browsing through extensions available in the repository ...

Although some very basic functionality is working, quite some work still needs to be done to get this to the WordPress-awesomeness level. |

” |

In terms of Wikimedia sites, developments in this field could improve the turnaround time for extension deployment, but the significant gains will be for spreading extensions to and from other MediaWiki-based sites.

In brief

Not all fixes may have gone live to WMF sites at the time of writing; some may not be scheduled to go live for many weeks.

- The cause of disappearing images has been identified as a code flaw that allowed the upload to stick in some places on the servers but not in others, where, for extraordinary reasons (such as the recent server transition), some but not all actions were impossible. The upload process will now be consistent in its approach (revision #72021).

- A two-day public "hackathon" to get together the WMF tech staff with volunteer engineers for "an old-fashioned face-to-face coding sprint" is in the pipeline. This meeting will be formally announced in September and will take place in October on the east coast of the US (Wikitech-l mailing list).

- Also in the planning stage is an invitational meeting to discuss plans related to "how data is organized, displayed, captured and analyzed on WMF properties" ("Data Summit"). The invite list is to be kept small, but interested parties should apply to

danese at wikimedia dot orgfor an invite (Wikitech-l mailing list). - Systems Administrator Domas Mituzas blogged about how the Wikipedia database system compares with those of other websites, and the upgrade from MySQL versions 4.0 to 5.1.

- The last of the Wikimedia Foundation wikis have been switched over to the Vector skin. At the same time, a new "global opt-out" was enabled, allowing users to easily switch back to Monobook for multiple projects simultaneously.

- MediaWiki developer Yaron Koren published a proposal for Making Wikipedia into a database (cf. Signpost coverage of related proposals). The article expanded on statements by Koren that had been quoted in an article in Technology Review ("Wikipedia to Add Meaning to Its Pages"), and was written for Hatilda Harevi’it ("The Fourth Tilde"), the Signpost's sister publication in the Hebrew Wikipedia, where it appeared in Hebrew translation last week. Although Koren's consultancy company "WikiWorks" specializes in Semantic MediaWiki, he uses a simple CSV data format in the proposal.

Reader comments