Unbiased information from Ukraine's government?

Ministry of Foreign Affairs of Ukraine starts project

The Ministry of Foreign Affairs of Ukraine launched an "Ambitious Campaign to Enrich Wikipedia with Unbiased Information on Ukraine and the World" on April 22, in cooperation with Wikimedia Ukraine. The initiative is aimed at reducing disinformation about Ukraine and spreading "unbiased facts about the country in various languages on Wikipedia". The campaign is set to begin with an online editing marathon - 'Month of Ukranian diplomacy' in May and will ask Wikipedia editors and Ukrainian diplomats to 'correct' and fill in information gaps about the nation. The ministry plans to publish data that can be incorporated into Wikipedia.

After Раммон posted the original MFA announcement to Jimbo Wales' talk page, users had mixed responses. Guy commented "Good news, well-informed Ukraininans to counter the GRU disinformation campaign", but Carrite considers the campaign tantamount to an "assault on NPOV".

The original announcement included surprising wording such as "mega campaign to saturate Wikipedia with unbiased information" in the title and "Ukrainian diplomats will also help to write Wikipedia's articles". The original also mentioned "Russian aggression against Ukraine".

The next day, following inquiries from The Signpost, the announcement, including its title, was restated. "Ukrainian diplomats will also organize campaigns for writing Wikipedia's articles in languages of different countries to give the international community a better understanding about Ukraine," replacing "help to write" with "organize". The sentence containing "Russian aggression" was left unchanged.

Anton Protsiuk, Project Manager for Wikimedia Ukraine, replied to The Signpost that the chapter "will not directly work with diplomats to edit Wikipedia. But we cannot prohibit Ukrainian diplomats from editing Wikipedia on their own, so we want to explain to them how Wikipedia works, what are the relevant policies, including copyright and NPOV. In other words, we will do everything so Ukrainian diplomats do not push a Ukrainian point-of-view in Wikipedia and understand the nature and rules of Wikipedia."

The chapter will conduct online webinars for the diplomats so that they will understand Wikipedia's rules. The diplomats will then publish information useful to Wikipedia editors on the MFA website, which is now freely licensed.

This is not the Ukrainian government's first such campaign. Notably, KyivNotKiev is a campaign begun on October 2, 2018 to get English-language media to spell the name of its capital "Kyiv", which is transliterated from the Ukrainian language, rather than "Kiev", which is transliterated from the Russian language. As part of a larger "CorrectUA" campaign, the government has seen some success, with several large news organizations changing their usage, including the BBC, the Associated Press, The Wall Street Journal, The Economist, and The New York Times.

Russian reaction to the MFA's initiative, as shown by two reports from the wire service RIA Novosti, has been critical. The first report notes that "relations between Moscow and Kiev deteriorated after the coup in Ukraine, the reunification of the Crimea with Russia and the beginning of the confrontation in the Donbass." The second report speculates that the project will be used to whitewash the history of Ukrainian nationalists who fought against the Soviet Union during World War II. RIA Novosti labeled these nationalists as "active accomplices of the German Nazis."

Access to information during the pandemic

Since the beginning of the 2019–20 coronavirus pandemic, various information-sharing organizations have responded by making some (or all) of their content freely available. JSTOR announced that they were dramatically increasing the content available to 'participating institutions', expanding the free-article limit for registered users from six to one-hundred articles a month and making over 6,000 articles related to the disease free through June 30, 2020. Project MUSE made various resources free to the public (list here), including over 25,000 books and 300 journals.

Hathitrust announced a similar service on April 22, giving member libraries who have disruptions to their services the ability to access materials from Hathitrust that match physical copies the libraries hold. The Internet Archive announced it would would modify its controlled digital lending to lift check-out limits for 1.4 million non-public domain books in its Open Library, becoming a "National Emergency Library" through June 30 or later.

Various authors and writer advocacy groups slammed the Internet Archive, calling its decision "an excuse for piracy". The Authors Guild stated it was "shocked that the IA would use the COVID-19 epidemic as an excuse to push copyright law further out to the edges, and in doing so, harm authors, many of whom are already struggling".[1]

A very big long-term project

There are more than 3,000,000 stub articles on the English Wikipedia. That's right – more than half of enWiki's articles are stubs. The 50,000 Destubbing Challenge is taking the bull by the horns. Encyclopædius helped start the project, and 32 contributors are signed up on the main project page as of April 26. They've destubbed 1,105 articles as of April 21, having started in March with The Great Britain and Ireland Destubathon. The goal is to destub 5,000 articles per year for ten years and ideally move on to a 1 million Destubbing Challenge.

Wikipedia Weekly returns

The Wikipedia Weekly podcast was dormant for several years, but has been revived in the current pandemic as the livestreamed Wikipedia Weekly Network. One of the first episodes was an online, non-religious WikiSeder, adapting the Jewish tradition remembering the plagues and liberation to a celebration of wiki wisdom in the age of the quarantini. This is best enjoyed if you play along and toast the "Four Cups to Free Knowledge" together at home—that will surely prepare you for the song at the end. WWN is now podcasting several times each week, and hopes to release summaries of highlighted episodes in The Signpost as a monthly column.

Brief notes

- Guild of Copy Editors The Guild of Copy Editors (GOCE) is celebrating the tenth anniversary of their signature copy-editing drives this May. The drives focus on reducing the number of pages tagged with {{Copy edit}}, and have succeeded in reducing the backlog from 8,323 pages to 274 as of 19 April.

- Did you know ... that all 11 of the DYK articles appearing on the main page on April 1 received more than 5,000 pageviews, which may be a record?

- The mainpage hooks included President Clinton's hankering for bacon butties, Volkswagen's ketchup lubricated part, Captain Kirk's computer encryption skills, and sexy Pepsi cans.

- Abuse filter manager A vote on whether to create a global abuse filter manager permissions group was closed on 3 April with "clear consensus for creating the group". This comes after an RfC that was closed on 27 January.

- New user-groups: The Affiliations Committee announced the approval of this week's newest Wikimedia movement affiliate, the Wikimedia Community of Kazakh language User Group[2]

References

Coronavirus, again and again

Following a February 9 article by Omer Benjakob, a flood of news articles in March praised Wikipedia's coverage of all things related to coronavirus. This month the flood slowed down, but is showing signs of resuming.

More coronavirus news

- Why Wikipedia Is Immune to Coronavirus in Haaretz by Omer Benjakob following up his February 9 story. With lock-downs around the world and almost everybody with internet access actively browsing, the internet has been stressed with an 'infodemic' of misinformation. Lacking the resources of YouTube, Google, Twitter and Facebook, Wikipedia is nonetheless "having its moment," with 115 million pageviews of coronavirus related articles on the English language encyclopedia this year through April 7. The role "of being the public’s main source of medical and health information" has been thrust upon Wikipedia. The role of WikiProject Medicine and its tough standards is emphasized and how it has been "immunized" by dealing with previous public-health scares like the 2003 SARS and the 2015 Zika outbreaks.

- Wikipedia breaks five-year record with high traffic in pandemic in Dawn provides outstanding straight news coverage of Wikipedia's efforts to keep up with the pandemic, including pageviews, and the articles on misinformation related to the 2019-20 coronavirus pandemic, and the 2020 coronavirus pandemic in Pakistan.

- Why Wikipedia is winning against the coronavirus 'infodemic' The Telegraph interviews the "chief steward of the greatest collection of knowledge in the entire history of human civilisation", Katherine Maher, aka the ED and CEO of the Wikimedia Foundation. Maher makes the point "the committed, meticulous and sometimes eccentric community of volunteer editors" are the actual bosses of the encyclopedia, not her. Using examples from the current pandemic, she explains how Wikipedia works and how the new traffic records stress the site. "It's a good thing that Wikipedia works in practice, because it would never work in theory," she says. "It works because ... people want it to work?" That may be the best explanation we'll ever get.

- She concedes that there is evidence of state-sponsored campaigns on Wikipedia, for example on the Chinese Wikipedia, and that the WMF is watching a few possible cases. A bigger fear, though, is that large areas of the encyclopedia could be captured by ideologically-driven communities.

Rosie

Wikipedia is a world built by and for men. Rosie Stephenson-Goodknight is changing that in The Lily (Washington Post)). You might think you know about Rosiestep but you will learn much more by reading this article, She was born in Gary, Indiana. While growing up in California, she wanted to be an anthropologist, but bowed to her father's wishes and majored in business administration, then became a healthcare administrator. She first edited Wikipedia in 2007, creating an article on the defunct publisher Book League of America. She's created articles on the Kallawaya, Perry River, Donna, and her grandmother. You likely know about her work at Women in Red, reducing Wikipedia's gender gap, and her writing of the article Maria Elise Turner Lauder, which was recognized as the English-language Wikipedia's sixth millionth article, but the beauty of this Lilly article is in the details.

Jew-Tagging

Jew-Tagging @Wikipedia by Edward Kosner in Commentary. Kosner who describes himself as "a proud if non-observant Jew" thought it was intrusive that the Wikipedia article about him described him as being "born to a Jewish family." Neither he, nor his son, could remove the offending text. But when he responded to a Wikipedia solicitation for a donation commenting that he'd "be much more inclined to contribute had Wikipedia made it possible to deal with my problem" - perhaps coincidentally - he received an answer from Coffee. The story gets complicated from here. There are different reasons why an article subject might want to be, or not want to be, identified by their religion or ethnic group. There are different reasons why an editor might want to identify an article subject by their religion or ethnicity. Several editors said on the Jimbo Wales talkpage that they were offended by the implication that a refusal to donate could result in the changing of article content.

In brief

- A small town newspaper gives good advice on determining news reliability: The Kokomo Perspective suggests using the SIFT method. The acronym is straightforward "Stop. Investigate the source. Find better coverage. Trace back to origins." Under "Investigate the source" they note that "nearly every English language publication or media website has a Wikipedia site, which will summarize it." For most reliable sources, and some unreliable sources, this is correct.

- The many languages missing from the internet in BBC Future: "There are nearly 7,000 languages and dialects in the world, yet only 7% are reflected in published online material." The dominance of English on the internet, including the dominance of the English Wikipedia, is part of the problem. But Wikipedia and Wikipedians are working to ameliorate the problem as well. Miguel Ángel Oxlaj Kumez is working to create a Kaqchikel Mayan version of Wikipedia. Lingua Libre is a WMF-funded oral language archive run by Wikimedia France.

- How are translations between English and Arabic helping to tackle misinformation? on Euronews: Arabic is the fourth most commonly spoken language among internet users, but only 1% of internet content is in Arabic, according to Euronews. University students in Mosul, supported by Ideas Beyond Borders, are doing something about it. Many of them lived through the 3-year occupation of Mosul by Islamic State. They've translated 11,000 articles, about ten million words, to the Arabic Wikipedia.

- Wikidata founder floats idea for balanced multilingual Wikipedia: Reviews a proposal by Denny Vrandečić. See In focus.

- Penn Museum faculty, students create Wikipedia pages about women to boost representation: Despite the demands of social distancing, the Penn Museum held an editathon on March 26. Using Zoom, they created 3 articles: Deborah A. Thomas, Sophia Wells Royce Williams, and Mary Virginia Harris, and edited 20 other articles.

- A Matter of Facts: Thomas Leitch on Wikipedia: a refreshingly direct podcast from Delaware First Media with an interview of the author of Wikipedia U.

- An Ambitious Campaign To Enrich Wikipedia with Unbiased Information: From Ukraine's Ministry of Foreign Affairs. See News and notes.

- Wikipedia evidence for/against coronavirus lab release theory?: Saying there is no official list of such facilities, BBC News used Wikipedia's list of biosafety level 4 labs in covering a fringe theory.

- NoFap struggles against Wikipedia, accuses editors of bias in Reclaim the Net: NoFap, a self-help website and community forum aimed at curbing pornography viewing, has taken offense to the way it is described on Wikipedia. NoFap feels that "activist" editors and porn industry personnel have distorted the relevant article to give an inaccurate representation of its purpose.

Redesigning Wikipedia, bit by bit

Redesigning the left sidebar

Wikipedia’s design has changed very little in the last ten years.[1][2][3] For example, the current Vector skin was introduced in 2010 (although some changes are currently being planned—more on that below), the Main Page has had basically the same layout since 2006, and that sidebar on the left-hand side of the page with all the links is almost as old. A recent WMF-funded report concluded in part that the sidebar was one of the most confusing parts of Wikipedia's design for casual readers:

Readers were unable to understand the purpose of the Menu on the left hand side of the site, noting in particular that they did not understand the items in the menu (e.g. Related changes, Special Pages). They felt that it was not relevant for them.

Editors have tried to improve the sidebar over the last decade. A 2013 request for comments (RfC) on the sidebar noted that:

- Even compared to other pieces of site-wide navigation, the sidebar is an extremely important navigation tool. With the vast majority of readers and editors using a skin (Vector or Monobook) with the sidebar placed on the left, it is in a natural position of importance considering English speakers tend to scan left to right.

- The sidebar is currently cluttered. On the Main Page, English Wikipedia readers see 22 linksin 2020, it's 21, not including language linksor "In other projects" links. Basic usability principles tell us that more choices increases the amount of time users have to spend understanding navigation (see Hick's law), and that simplicity and clarity are worthwhile goals. The most recent design of the homepage of Google.com, famous for its simplicity, has half the number of links, for comparison. While removing some semi-redundant links (like Contents or Featured contents) would be preferable, if we're going to have this many links it means prioritization is key, leading to the next point...

- The sidebar has poor prioritization. Users read top to bottom, and it is not unfair to say that the vertical order of the links should reflect some basic priority. However, currently, this prioritization is sloppily done. Even if we assume all the current links are important and should stay, the order needs work.

- The names for some links are overly verbose or unclear. Brevity is the soul of wit, and of good Web usability. We should not use two or three words where one will do.

The RfC proposed a new design for the sidebar which featured several collapsible sections. Ultimately, there was no consensus to make the change. After an incubation period at the Village Pump Idea Lab, the RfC was formally introduced (and advertised) this month. This year's RfC page has a different format: rather than one proposal to completely change the sidebar, many smaller proposals have been made to add or remove links. There are too many proposals to discuss each one, but here's the table of contents:

1 Background

2 Reorderings

2.1 Reorder the links in the left sidebar to create a new "contribute" section

2.2 Move Wikidata to "In other projects"

2.3 Move "In other projects" under "Print/export"

2.4 Separate "Page tools" and "User tools"

2.5 Move "Print/export" above "Tools"

3 Renamings

3.1 Donate to Wikipedia → Donate

3.2 Wikipedia store → Merchandise

3.3 About Wikipedia → About

3.4 Contact page → Contact

3.5 Main page → Main Page

3.6 Logs → Logged actions

3.7 Languages → In other languages

3.8 User rights management → Manage user rights

3.9 Tools section → ???

3.10 Print/export → Export

3.11 Mute preferences → Mute this user

3.12 Printable version → Print

3.13 Download as PDF → Save as PDF

4 Additions

4.1 An introduction to contributing page

4.2 An FAQ page

4.3 A dashboard

4.4 Logs

4.5 Deleted contributions

4.6 Search page

5 Removals

5.1 Featured content

5.2 Upload file

5.3 Permanent link

5.4 Wikipedia store

5.5 Print/export (both "Download as PDF" and "Printable version")

5.6 Random Article

5.7 Recent changes

6 Autocollapsing

6.1 "Tools" section

7 Changing tooltips

7.1 Comprehensive overview

7.2 Featured content tooltip

7.3 Current events tooltip

7.4 Random article tooltip

7.5 Donate to Wikipedia tooltip

7.6 Wikipedia store tooltip

7.7 About Wikipedia tooltip

7.8 Community portal tooltip

7.9 Recent changes tooltip

7.10 Contact page tooltip

7.11 Upload file tooltip

7.12 Special pages tooltip

7.13 Permanent link tooltip

7.14 Page information tooltip

7.15 Wikidata item tooltip

7.16 Logs tooltip

7.17 View user groups tooltip

7.18 Mute preferences tooltip

7.19 Download as PDF tooltip

8 A Customizable Sidebar (or an Advanced Mode)

9 A note on power users, usability, and systemic bias

10 Technical underpinnings

10.1 Sidebar settings page

10.2 Tools

11 Comparison to other projects and languages

While the English Wikipedia community works to make the sidebar better for readers and editors, the Wikimedia Foundation (WMF) has been proceeding with its own plans to update the Vector skin. Current plans concern two proposed changes that would be incorporated into the standard Vector skin: moving the language selector to the top right of the page, and collapsing the sidebar by default. The WMF is currently accepting feedback on the changes here. —P

April Fools' Day: have we gone too far with the pranks?

This year's April Fools' Day festivities were some of the more chaotic ones in recent memory. Articles for Deletion had a record 93 nominations (not counting some deleted coronavirus-related ones), which was about double the amount of serious nominations that day. Editing the page meant to document all the pranks was near-impossible due to edit conflicts caused by 1) yet another edit war over the title of the "other pranks"/"general tomfoolery", and 2) outright vandalism of the page (typical example here), though this one became less of a problem after the page was semi-protected. By the end of the day, it was a foregone conclusion that there would be an RfC to bring about some changes to how Wikipedia celebrates April 1. (Similar RfCs took place in 2013 and 2016.) Notable proposals on the RfC page include:

- Requiring the customary joke "did you know..." entries to be labelled as jokes)

- Clarifying that vandalism of the year's prank log page is prohibited

- Banning joke deletion nominations

—P

In brief

- On Wikipedia talk:Non-free content, eliminating or restricting vector graphics has been proposed as a necessary step to comply with the fair-use requirement of minimal infringement of the owner's rights, which in Wikipedia's compliance means low-resolution graphics. This is technically impossible with vector graphics, as they are inherently resolution independent. —B

- On Wikipedia talk:Manual of Style/Accessibility: Should all tables be required to have captions? This change was proposed with the goal of making Wikipedia more accessible to blind readers who use screen readers, though some users see making this a bright-line rule to be overkill. —P

- On current events: discussion at WikiProject COVID-19 (the subject of last issue's WikiProject report) that is contemplating removal of the current-event template {{Current}} at the top of some articles. —B

- On the policy village pump: Should a "Main Page Editor" usergroup be created with the

editprotectedandprotect(at least untileditcascadeprotectedgets implemented) rights? This would allow non-admins to edit the main page in order to fix errors. —P - Also on the policy village pump: Proposed updates to Wikipedia's policy on bureaucrat activity, to remove the provision that allows bureaucrats to keep their tools if they express willingness to participate in bureaucrat activities but don't actually use the tools. —P

Follow-ups

- The proposal to remove the section on job titles from MOS:BIOGRAPHY closed with a result of no consensus. —P

- The other three discussions mentioned in the last issue are all still open. —P

- ^ https://www.fastcompany.com/3028615/the-beautiful-wikipedia-design-that-almost-was

- ^ https://thenextweb.com/dd/2017/06/29/why-are-some-of-the-ugliest-sites-on-the-web-also-the-most-popular/

- ^ https://medium.com/freely-sharing-the-sum-of-all-knowledge/why-does-wikipedia-in-2018-have-a-mobile-site-and-a-desktop-site-bc2982f30fb9

Featured content returns

Featured Content is back, and here to stay! The editors of The Signpost regret that the past year and the first few months of this year were not covered. Please review the archives of Goings-on or various other logs to see that content.

Featured articles

Seven featured articles were promoted this month.

- Interstate 82 (nominated by SounderBruce) is an Interstate Highway in the Pacific Northwest region of the United States that travels through parts of Washington and Oregon. It runs 144 miles (232 km) from its northwestern terminus at I-90 in Ellensburg, Washington, to its southeastern terminus at I-84 in Hermiston, Oregon. The highway passes through Yakima and the Tri-Cities, and is also part of the link between Seattle and Boise, Idaho. I-82 travels concurrently with U.S. Route 97 (US 97) between Ellensburg and Union Gap; US 12 from Yakima to the Tri-Cities; and US 395 from Kennewick and Umatilla, Oregon.

- Ghostbusters II (nominated by Darkwarriorblake ) is a 1989 American supernatural comedy film directed by Ivan Reitman and written by Dan Aykroyd and Harold Ramis. It stars Bill Murray, Aykroyd, Sigourney Weaver, Ramis, Rick Moranis, Ernie Hudson, and Annie Potts. It is the sequel to the 1984 film Ghostbusters and the second film in the Ghostbusters franchise. Set five years after the events of the first film, the Ghostbusters have been sued and put out of business after the destruction caused during their battle with the demi-god Gozer. When a new paranormal threat emerges, the Ghostbusters re-form to combat it and save the world. The film received generally negative reviews and was considered a critical and commercial failure by Columbia Pictures.

- The 1978 FA Cup Final (nominated by The Rambling Man) was an association football match between Arsenal and Ipswich Town on 6 May 1978 at the old Wembley Stadium, London. It was the final match of the 1977–78 FA Cup, the 97th season of the world's oldest football knockout competition, the FA Cup. The game was watched by a stadium crowd of around 100,000 and was broadcast live on television and radio. Ipswich dominated the match, hitting the woodwork three times (including twice from John Wark) before Roger Osborne scored the only goal of the game with a left-foot shot, as Ipswich triumphed 1–0. It remains Ipswich Town's only FA Cup triumph to date and they have not appeared in the final since. Arsenal returned to Wembley the following season and won the 1979 FA Cup Final over Manchester United.

- Cyclone Chapala (nominated by Hurricanehink) was a powerful tropical cyclone that caused moderate damage in Somalia and Yemen during November 2015. Chapala was the third named storm of the 2015 North Indian Ocean cyclone season. It developed as a depression on 28 October off western India, and strengthened a day later into a cyclonic storm. Chapala then rapidly intensified amid favorable conditions. On 30 October, the India Meteorological Department (IMD) estimated that Chapala attained peak three-minute sustained winds of 215 km/h (130 mph). The American-based Joint Typhoon Warning Center (JTWC) estimated sustained winds of 240 km/h (150 mph), making Chapala among the strongest cyclones on record in the Arabian Sea. After peak intensity, Chapala skirted the Yemeni island of Socotra on 1 November, becoming the first hurricane-force storm there since 1922. High winds and heavy rainfall resulted in an island-wide power outage, and severe damage was compounded by Cyclone Megh, which struck Yemen a week later. It caused eight fatalities and over $100 million in damage.

- George Washington and slavery (nominated by Factotem) covers George Washington's slave ownership and views about slavery in the colonial United States. Washington was a Founding Father of the United States and slaveowner who became uneasy with the institution of slavery but provided for the emancipation of his slaves only after his death. Slavery was ingrained in the economic and social fabric of colonial Virginia, and Washington inherited his first ten slaves at the age of eleven on the death of his father in 1743. In adulthood his personal slaveholding grew through inheritance, purchase and natural increase. In 1759, he gained control of dower slaves belonging to the Custis estate on his marriage to Martha Dandridge Custis. Washington's early attitudes to slavery reflected the prevailing Virginia planter views of the day, and so he demonstrated no moral qualms about the institution. He became skeptical about the economic efficacy of slavery before the American Revolutionary War, and expressed support in private for abolition by a gradual legislative process after the war. Washington remained dependent on slave labor, and by the time of his death in 1799 there were 317 slaves at his Mount Vernon estate, 124 owned by Washington and the remainder managed by him as his own property but belonging to other people.

- The Loveday of 1458 (nominated by Serial Number 54129) was a ritualistic reconciliation between warring factions of the English nobility that took place at St Paul's Cathedral on 25 March 1458. Following the outbreak of the Wars of the Roses in 1455, it was the culmination of lengthy negotiations initiated by King Henry VI to resolve the lords' rivalries. English politics had become increasingly factional during his reign, and was exacerbated in 1453 when he became catatonic. This effectively left the government leaderless, and eventually the King's cousin, and at the time heir to the throne, Richard, Duke of York, was appointed Protector during the King's illness. Alongside York were his allies from the politically and militarily powerful Neville family, led by Richard, Earl of Salisbury, and his eldest son, Richard, Earl of Warwick. When the King returned to health a year later, the protectorship ended but partisanship within the government did not.

- Marcian (nominated by Iazyges) was the Eastern Roman Emperor from 450 to 457. Marcian was elected and inaugurated on 25 August 450, and as emperor reversed many of the actions of Theodosius II in the Eastern Roman Empire's relationship with the Huns under Attila and in religious matters. After Attila's death in 453, Marcian took advantage of the resulting fragmentation of the Hunnic confederation by settling Germanic tribes within Roman lands as foederati ("federates" providing military service in exchange for benefits). Marcian also convened the Council of Chalcedon, which declared that Jesus had two "natures": divine and human. Marcian died on 26 January 457, leaving the Eastern Roman Empire with a treasury surplus of seven million solidi coins.

Featured lists

Eighteen featured lists were promoted this month:

- Between 1975 and 1989 the England cricket team represented England, Scotland and Wales in Test cricket. During that time England played 152 Test matches (nominated by Harrias), resulting in 40 victories, 62 draws and 50 defeats.

- Monmouthshire is a county and principal area of Wales. In the United Kingdom the term "listed building" refers to a building or structure officially designated as of special architectural, historical or cultural significance.There are 244 Grade II* listed buildings (nominated by KJP1) in Monmouthshire. They include seventy-two houses, forty-two churches, thirty-five farmhouses, twenty-one commercial premises, eight bridges, seven barns, six garden structures, four sets of walls, railings or gates, three gatehouses, two chapels, two community centres, two dovecotes, an almshouse, an aqueduct, a castle, a courthouse, a cross, a dairy, a folly, a masonic lodge, a mill, a prison, a former slaughterhouse, a statue and a theatre.

- Cardiff City Football Club is a Welsh professional association football club based in Cardiff, Wales. The list of Cardiff City F.C. records and statistics (nominated by Kosack) encompasses the major honours won by Cardiff City, records set by the club, its managers and players, and details of its performance in European competition.

- During the Holocaust, most of Slovakia's Jewish population was deported (nominated by Buidhe) in two waves—1942 and 1944–1945. In 1942, there were two destinations: 18,746 Jews were deported in eighteen transports to Auschwitz concentration camp and another 39,000–40,000 were deported in thirty-eight transports to Majdanek and Sobibór extermination camps and various ghettos in the Lublin district of the General Governorate. A total of 57,628 people were deported; only a few hundred returned. In 1944 and 1945, 13,500 Jews were deported to Auschwitz (8,000 deportees), with smaller numbers sent to the Sachsenhausen, Ravensbrück, Bergen-Belsen, and Theresienstadt concentration camps. Altogether, these deportations resulted in the deaths of around 67,000 of the 89,000 Jews living in Slovakia.

- Hot Country Songs is a chart that ranks the top-performing country music songs in the United States, published by Billboard magazine. In 1976, 37 different singles topped the chart (nominated by ChrisTheDude), which at the time was published under the title Hot Country Singles, in 52 issues of the magazine. Chart placings were based on playlists submitted by country music radio stations and sales reports submitted by stores.

- In baseball, a home run is credited to a batter when he hits a fair ball and reaches home safely on the same play, without the benefit of an error. Fifty-eight different players (nominated by Bloom6132) have hit two home runs in an inning of a Major League Baseball (MLB) game to date, the most recent being Edwin Encarnación of the Seattle Mariners on April 8, 2019. Regarded as a notable achievement, five players have accomplished the feat more than once in their career; no player has ever hit more than two home runs in an inning.

- Red Dead Redemption 2, a Western-themed action-adventure game developed and published by Rockstar Games, follows the story of various characters (nominated by Rhain), namely Arthur Morgan, an outlaw and member of the Van der Linde gang. Led by Dutch van der Linde, the gang attempts to survive against government forces and rival gangs while dealing with the decline of the Wild West. Several characters reprise their roles from the 2010 game Red Dead Redemption, to which Red Dead Redemption 2 is a prequel.

- BTS are a seven-member South Korean boy band formed under record label Big Hit Entertainment, composed of three rappers (RM, Suga, and J-Hope) and four vocalists (Jin, Jimin, V, and Jungkook). The group has won 264 awards out of 395 nominations (nominated by DanielleTH).

- Emilia Clarke is an English actress. She has won 12 awards out of 47 nominations (nominated by LuK3).

- Local nature reserves (LNRs) in England are designated by local authorities under Section 21 of the National Parks and Access to the Countryside Act 1949. As of January 2020, there are forty-one LNRs (nominated by Dudley Miles) in Berkshire. Five are also Sites of Special Scientific Interest, two are Special Areas of Conservation and four are managed by the Berkshire, Buckinghamshire and Oxfordshire Wildlife Trust.

- Newfoundland and Labrador is the ninth-most populous province in Canada with 519,716 residents recorded in the 2016 Canadian Census and is the seventh-largest in land area at 370,514 km2 (143,056 sq mi). Newfoundland and Labrador has 277 municipalities (nominated by Mattximus and Hwy43) including three cities, 269 towns, and five Inuit community governments, which cover only 2.2% of the territory's land mass but are home to 89.6% of its population.

- The Nashville Xpress Minor League Baseball team played two seasons in Nashville, Tennessee, from 1993 to 1994 as the Double-A affiliate of the Minnesota Twins. In those seasons, a total of 60 players (nominated by NatureBoyMD) competed in at least one game for the Xpress. The 1993 roster included a total of 35 players, while 38 played for the team in 1994. There were 13 players who were members of the team in both seasons. Of the 60 all-time Xpress players, 22 also played in at least one game for a Major League Baseball (MLB) team during their careers.

- Jennifer Aniston is an American actress, film producer, and businesswoman who made her film debut in the 1987 comic science fiction film Mac and Me in an uncredited role of a dancer in McDonald's. She has since appeared in numerous films and television shows (nominated by CAPTAIN MEDUSA). Her biggest box office successes include the films Bruce Almighty (2003), The Break-Up (2006), Marley & Me (2008), Just Go with It (2011), Horrible Bosses (2011), and We're the Millers (2013), each of which grossed over $200 million in worldwide box office receipts. Some of her most critically acclaimed film roles include Office Space (1999), The Good Girl (2002), Friends with Money (2006), Cake (2014), and Dumplin' (2018). She returned to television in 2019, producing and starring in the Apple TV+ drama series The Morning Show, for which she won another Screen Actors Guild Award.

- The Torrens Trophy (nominated by MWright96) is awarded to an individual or organisation for demonstrating "Outstanding Contribution to the Cause or Technical Excellence of Safe and Skilful Motorcycling in the UK". The RAC established the trophy to recognise "outstanding contributions to motor cycle safety" before extending its purpose to include individuals considered to be "the finest motor cyclists". The inaugural recipient was Frederick Lovegrove in 1979. Since its establishment, the award has not been presented during four periods in history: from 1982 to 1988, between 1990 to 1997, from 1999 to 2007 and between 2009 and 2012. As of 2019, the accolade has been won fourteen times: Superbike riders have won it four times, with road motorbike racers, Grand Prix motorcycle riders and motorcycle speedway competitors honoured once. The 2019 winner was Tai Woffinden, the three-time Speedway world champion.

Featured pictures

-

(created by NASA; nominated by User:Bammesk)

Two difficult cases

Two very difficult cases were heard in April, with one ongoing. Jytdog, a productive and controversial editor, was indefinitely banned on April 13. The Medicine case opened on April 7. Two frequent Signpost contributors are involved. No Signpost staffer is available to write this article who considers themselves to be unbiased about these cases. This writer has strong, and mixed, feelings on both cases, and will keep the descriptions short.

Jytdog

Jytdog was indefinitely banned on April 13 by a vote of 11 arbitrators to 1. He may appeal the ban in 12 months. The case was originally started two years ago and closed soon after when Jytdog resigned as an editor, stating that he would never return. After he expressed a desire to return as an editor in March, the case was reopened.

Much of the case revolved around Jytdog's efforts to fight paid or conflict-of-interest editing. A key aspect of the case involved his uninvited telephone contact with another editor. Strong evidence was presented that Jytdog repeatedly badgered other editors.

Medicine

A long term dispute at WikiProject Medicine that came to a head over drug pricing information in articles was taken to ArbCom and the case opened April 7.

On the evidence page RexxS states "the vast majority of parties to this case are respected, long-term editors who have made considerable contributions to the field of medicine on Wikipedia over many years. It should be taken as a given that every single party's foremost aim is to improve Wikipedia, although there exists a wide range of opinion on how that is best achieved."

The parties include Doc James, a long-time contributor to The Signpost and a member of the WMF Board of Trustees. Bluerasberry, another long-time contributor to The Signpost joins several other editors in favoring the inclusion of drug prices in medical article. Sandy Georgia and several other medical editors are concerned about multiple long-term trends affecting the Medicine Project.

At the evidence page, editors are roughly split, in the type of evidence they have presented, in whether it favors one side or the other in the dispute. In the Workshop phase, which ends May 5, many of the proposals appear to favor letting the editors solve the content dispute on their own. A proposed decision is expected by May 12.

Disease the Rhythm of the Night

- This traffic report is adapted from the Top 25 Report, prepared with commentary by Igordebraga, Rebestalic, (March 22 to April 18) Soulbust (March 22 to April 4), A lad insane (April 5 to 11), and Berrely (April 12 to 18)

(data provided by the provisional Top 1000)

You could put a mask upon my face (March 22 to 28, 2020)

| Rank | Article | Class | Views | Image | Notes/about |

|---|---|---|---|---|---|

| 1 | 2019–20 coronavirus pandemic | 7,865,205 |  |

The little spiky sphere that you see on the left is a computer illustration of something that is measured in millionths of a centimetre. That's not a big deal. The illustrated virus is tiny. But that little spiky sphere has sickened nearly nine hundred thousand people and killed more than forty thousand. It has sent stocks reeling, swept shop-shelves empty through panic-buying and locked down whole countries and their economies. The severe acute respiratory syndrome coronavirus 2 causes coronavirus disease 2019 (COVID-19), which is responsible for the 2019–20 coronavirus pandemic and for all the illness, death and other consequences. | |

| 2 | 2020 coronavirus pandemic in India | 3,327,313 |  |

India is becoming increasingly affected by the global coronavirus pandemic, numbering 1,998 cases at the time of writing. The country, which is home to over a sixth of the world population, is currently under a lockdown that Prime Minister Narendra Modi declared on the 24th March. 41 have sadly passed away from infection and 144 have recovered. | |

| 3 | Coronavirus | 2,692,771 |  |

For a while, the Latin word for crown could mean a star's surrounding fire, a Mexican beer, a shower brand, the group that sung "The Rhythm of the Night"... but lately if you say "Corona", we know it's the virus type which has an instance spreading itself while locking us at home. | |

| 4 | Spanish flu | 2,403,781 |  |

The sheer scale of the current pandemic has earned it comparisons to the influenza-fuelled Spanish Flu, which lasted from January 1918 to December 1920. The number of victims of the pandemic, as estimated in 2006, was 500 million—a quarter of the population of the entire world at the time, and a little higher than the combined populations of the US and Russia today. | |

| 5 | 2020 coronavirus pandemic in the United States | 2,178,259 |  |

For some variety, let's borrow from Reddit learning The Offspring might be to blame if Chile sees a sudden rise in COVID-19 cases:

Like the latest fashion. | |

| 6 | 2020 coronavirus pandemic in Italy | 2,173,809 |  | ||

| 7 | Coronavirus disease 2019 | 2,153,131 |  | ||

| 8 | 2019–20 coronavirus pandemic by country and territory | 2,091,311 |  | ||

| 9 | Madam C. J. Walker | 1,971,771 |  |

Madam C. J. Walker was the first female self-made millionaire in the United States. Born in the Deep South that was once home to many slaves, Walker eventually launched the Madam C. J. Walker Manufacturing Company and propelled herself into the world of beauty and cosmetics. She died in former Union territory in Irvington, New York. Walker has recently been the subject of Netflix miniseries Self Made; her role is acted out by one of my favourite actors, Octavia Spencer. That's her on the left. | |

| 10 | Orthohantavirus | 1,762,835 |  |

As one guy dies on a bus, everyone goes crazy. |

Oh the street is now an empty place (March 29 to April 4, 2020)

| Rank | Article | Class | Views | Image | Notes/about |

|---|---|---|---|---|---|

| 1 | 2019–20 coronavirus pandemic | 5,593,988 |

|

It's terrifying how something like the Severe acute respiratory syndrome coronavirus 2 can damage the very biological functions of a person. Want it more terrifying? Okay, let's make it Terrifyingly Terrifying to a Terrifying Extent of Terrifyingly Terrific Proportions. To do that, make that same coronavirus damage the very biological functions of more than one million people, immobilise whole countries, send top-grade economies spinning, make two nurses in Italy commit suicide from stress, make people repeatedly clear whole shelves of supermarkets from panic buying, make the manufacturer of Corona beer lose 165 million US dollars, and kill the equivalent of all – that's all – the players in the entire NFL, NBA, MLB, NHL, La Liga and Bundesliga...

...fifteen times. | |

| 2 | Joe Exotic | 4,076,724[a] |  |

'I am broke as shit, I have a judgement against me from some bitch down here in Florida, and this is all paid for by the Committee of Joe Exotic Speaks for America.'

Joseph Allen Maldonado-Passage, better known as Joe Exotic, is a former zoo operator and criminal. Exotic, who refuses to wear a suit, was convicted on 19 criminal charges in 2019, split across things such as animal abuse and hiring to kill (specifically, Carole Baskin, owner of a big cat rescue facility and the above mentioned "bitch" who he was forced to pay $1 million in damages). Aside from abusing animals and hiring hitmen, events of the (self-claimed) best tiger breeder in the US include being raped at the age of five (okay, that's really sad regardless of criminal status), contending for the 2016 United States presidential election, trying out at the 2018 Oklahoma gubernatorial election and featuring in Netflix's documentary Tiger King: Murder, Mayhem and Madness, which takes the third spot in this list. | |

| 3 | Tiger King: Murder, Mayhem and Madness | 2,460,831 | |||

| 4 | Carole Baskin | 2,341,891 | |||

| 5 | 2019–20 coronavirus pandemic by country and territory | 2,306,585 |  |

Currently, India has a bit above 5,000 cases of COVID-19, the most affected state being Maharashtra, home of Mumbai (or, if you like, Bombay) among other cities. Meanwhile, the land of the Star-Spangled Banner has just passed a whopping four hundred thousand cases, with daily case increases consistently reaching above 20,000. New York is the hardest-hit state—and about that, see Andrew Cuomo (#11 on this list) for more details. | |

| 6 | 2020 coronavirus pandemic in India | 2,149,064 |  | ||

| 7 | 2020 coronavirus pandemic in the United States | 1,906,786 |  | ||

| 8 | Spanish flu | 1,890,887 |  |

Unfortunately, we don't know that much about the real stats of the Spanish Flu pandemic, as results were censored by a countries such as Germany, the UK (at the time including Ireland), France and the United States, who were in the war effort. What we do know, though, is that confirmed cases could have rocketed to half a billion, with anywhere from 17 to 50 million deaths. A note that some of the devastation of the Spanish Flu was due to resulting bacterial infections caused by things such as overcrowding and poor hygiene. | |

| 9 | Coronavirus disease 2019 | 1,454,002 |  |

Okay, some clarification: Coronavirus disease 2019 is the thing that's also called COVID-19. It's the illness that's taking world media by storm. The illness itself is caused by the immune system's response to the Severe acute respiratory syndrome coronavirus 2, also known as SARS-CoV2. The 2019-20 coronavirus pandemic is the ongoing outbreak of COVID-19 which is caused by SARS-CoV2. SARS-CoV2 is one of many viruses that fall under the coronavirus category – a coronavirus is simply a virus that has little spikes called peplomers on it. There are many coronaviruses, not just a few. | |

| 10 | Coronavirus | 1,338,199 |  |

- ^ combined page views total for "Joe Exotic" (2,739,317) and "Joseph Maldonado-Passage" (1,337,407), the latter of which is now a redirect due to a page move during the week (on March 29).

Quarantine reached month two, hope it's not here to stay (April 5 to 11, 2020)

| Rank | Article | Class | Views | Image | Notes/about |

|---|---|---|---|---|---|

| 1 | 2019–20 coronavirus pandemic | 4,126,190 |

|

This week, the current pandemic's cases exceeded the total number of recorded cases in the 2009 swine flu pandemic, which is not in this week's list (after strong showings for the quite a few of the preceding weeks). Indeed, worldwide cases are about to blast through two million. For me, the scale of this thing is becoming too much to comprehend. How in the world do you visualise two million of anything? | |

| 2 | Joe Exotic | 2,264,454 |  |

In all honesty, all this buzz about Joe Exotic (caused by the documentary Tiger King: Murder, Mayhem and Madness) is making Joe Exotic not at all that exotic. | |

| 3 | 2019–20 coronavirus pandemic by country and territory | 2,199,766 |  |

According to the Worldometers coronavirus tracker, COVID-19 has smeared itself on about 210 unique countries and territories. Now, a clarifier that 'Territories' is used amongst 'Countries' as places such as Taiwan that aren't fully recognised as an independent country (sorry for the politics). | |

| 4 | Boris Johnson | 2,002,392 |  |

COVID-19 (as you may have noticed) is well-represented on this list. Many articles it brings into public view – such as #s 1, 3, 14, 15, 18, you get the idea) are rather obviously related to this virus. Some, however, require a cursory following of the news to connect, although one must have been living with one of the uncontacted tribes of the Amazon to not be hearing any news these days. Boris Johnson, the Prime Minister of the United Kingdom (and I'll fully admit I'm not entirely sure what his duties are) was diagnosed with COVID-19 several days ago and landed in the intensive care unit for a spell – he has though, fortunately for him, now been released from the hospital. | |

| 5 | WrestleMania 36 | 1,882,062 | 2020 saw WWE's 36th annual WrestleMania, which was held with no live audience (due to #1). This particular Granddaddy of Them All consisted of two parts; the first won by The Undertaker and the second won by Brock Lesnar. | ||

| 6 | Carole Baskin | 1,765,033 |  |

Another person featured on Tiger King, namely the woman our #2 tried to get murdered. | |

| 7 | Money Heist | 1,507,486 |  |

Who doesn't want money?!? And this Spanish show, which became an international sensation once picked up by Netflix, deals with people who decide to take it straight from the source! At least in the first season, don't know what is happening in the fourth which hit the streaming service. | |

| 8 | 2020 coronavirus pandemic in the United States | 1,468,892 |  |

Total COVID-19 cases in the Land of the Star-Spangled Banner are almost at an almighty 600,000. However, the daily increase data offered at Johns Hopkins University's very detailed, map-based tracker shows that the best is coming for the US – a steep curve has been turned, and daily increases are now starting to fall. Best of luck to all Americans and don't forget to stay safe. | |

| 9 | 2020 coronavirus pandemic in India | 1,438,743 |  |

Best of luck to all Indians as well. It's an absolute miracle that India, with its vast population, has managed to keep infection numbers so low compared to its demographics. It has been estimated that without the ongoing lockdown, cases in Bhārat Gaṇarājya could have surged by 35,000. | |

| 10 | John Prine | 1,363,120 |

|

In any normal time, this American country-folk legend would have topped this list upon his death last week at the age of 73. Unfortunately, these are not normal times, as the rest of this list indicates. Prine made last week's list (albeit lower, at #22) upon the news that he had entered the intensive care unit on March 26 with symptoms of COVID-19, and sadly succumbed to the virus on April 7, just months after being selected for a Lifetime Achievement Award. Perhaps I'll have to send him an angel from Montgomery. |

This is the Reebok or the Nike?(April 12 to 18, 2020)

| Rank | Article | Class | Views | Image | Notes/about |

|---|---|---|---|---|---|

| 1 | 2019–20 coronavirus pandemic | 3,255,130 |

|

Believe it or not, it's been about 191/2 weeks (137 days) since the first reported cases of COVID-19 emerged in Wuhan. As of 23 April, there are approx. 2.7 million cases! The number already was and has now become even more frightening. To give you an idea, here is a website showing dots. And that only goes up to 1 million! Sadly, we still haven't been able to flatten the curve, but in a lot of countries, the curve is slowly starting to decline. | |

| 2 | Joe Exotic | 1,285,515 |  |

Tiger King, burning bright, on the flatscreens of the night, as viewers on the docuseries are still numerous enough to boost views on its primary subject, this weirdo who along with operating a big cat private zoo also indulged in rapping, politics and attempted murder. | |

| 3 | Charles Ingram | 1,260,050 |  |

Ingram was already well known for cheating on Who Wants to Be a Millionaire? to win the top prize using a clever series of coughs. There has been a lot of attention on him currently following the release of ITV show Quiz. The show is based on the award winning play of the same name, following Ingram's earlier life. | |

| 4 | 2020 coronavirus pandemic in the United States | 1,200,164 |  |

The US currently has the most coronavirus cases in the world; so the article's high ranking is hardly surprising. One of the worst hit areas is the city of Detroit, with over 8,000 cases. | |

| 5 | Deaths in 2020 | 1,118,629 |  |

I don't wanna be buried In a Pet Sematary I don't want to live my life again! | |

| 6 | 2020 coronavirus pandemic in India | 1,108,561 |

|

Unfortunately for India, the population of Indians who have been infected with SARS-CoV2 is starting to balloon towards the 20K mark – now, that may not seem that much compared to the US's almost 900 thousand, but that's still a lot. Thank goodness for the lockdown that's currently in force – it has been predicted that if the current lockdown never happened, the amount of Indian COVID-19 cases could have been in excess of 30 thousand. | |

| 7 | 2019–20 coronavirus pandemic by country and territory | 1,077,954 |

|

Almost all of the world's countries and territories have experienced some cases of COVID-19 within their borders – it isn't called a pandemic for nothing. In total, over two million people have been infected by SARS-CoV-2. | |

| 8 | Money Heist | 1,071,411 |

|

Money Heist, known in its native Spanish as La Casa de Papel (the House of Paper – i.e. paper money, printed in places like the Royal Spanish Mint to the left), is a Spanish crime drama concerning a Professor and his collaborators. It is the most-watched non-English language series to date and also quite an awarded one, having won a mammoth sixteen awards at time of writing. | |

| 9 | Brian Dennehy | 1,057,388 |

|

Brian Dennehy was an American actor, appearing on stage, on air and in the movies. You might know him as the father of Romeo in Romeo + Juliet as well as a mainstay at the Goodman Theatre in Chicago. In his lifetime of 81 years, he won two Tony Awards (an award for stage acting in the US), an Olivier Award (likewise but in British contexts) and a Golden Globe (film acting). Dennehy passed away of sepsis-induced cardiac arrest. | |

| 10 | Carole Baskin | 999,748 |  |

As you can see, Big Cat Rescue owner Carole Baskin amassed a cool 999 thousand (rounded down of course) views this week. This is because our #2 tried to kill her (the culmination of a feud that included Joe Exotic accusing Baskin of murdering her disappeared husband, and Baskin winning a trademark infringement lawsuit because Exotic decided to copy the branding of her sanctuary in the website of his tiger zoo). |

Exclusions

- These lists exclude the Wikipedia main page, non-article pages (such as redlinks), and anomalous entries (such as DDoS attacks or likely automated views). Since mobile view data became available to the Report in October 2014, we exclude articles that have almost no mobile views (5–6% or less) or almost all mobile views (94–95% or more) because they are very likely to be automated views based on our experience and research of the issue. Please feel free to discuss any removal on the Top 25 Report talk page if you wish.

Roy is doing fine and sending more photos

Everybody has their own story about the coronavirus pandemic. Everybody has somebody that they've been worried about. This story is about photographer Roy Klotz who has uploaded 5,651 photos to Commons over the last eight years. The photos cover sites on the National Register of Historic Places, other historic buildings, and places on his many world travels. Some of my favorite photos taken by Roy follow, in no particular order.

The octogenarian started his fifth around-the-world ocean cruise in early January from Florida. Until recently, the last photos he uploaded were from the Caribbean.

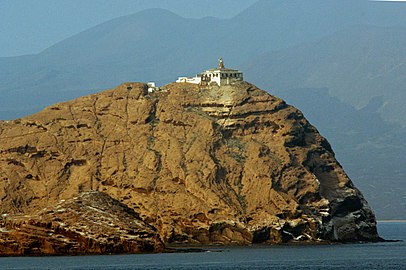

-

Lighthouse in the Hanish Islands, Yemen

-

Jame' Asr Hassanil Bolkiah Mosque in Bandar Seri Begawan

By late March, the ship was off Australia and ports were closing due to the coronavirus pandemic. They managed to dock in Perth, but catching a flight home was another matter. Borders within Australia were closing, flights were disrupted, and Roy had another adventure getting back to the United States. He's still not quite home, but is staying with a relative. He's put her to work driving him around to take a few photos.

Roy began taking photos before World War II using his mother's Kodak bellows camera when he was 8 years old. Since then he's photographed with a Zeiss Contax, Argos, Nikon, and Canon cameras. He currently uses a Nikon D3. Over the years, he's photographed in 218 countries, all 50 U.S. states, and every Canadian province.

Welcome back Roy!

Trending topics across languages; auto-detecting bias

A monthly overview of recent academic research about Wikipedia and other Wikimedia projects, also published as the Wikimedia Research Newsletter.

What is trending on (which) Wikipedia?

- Reviewed by Isaac Johnson

"What is Trending on Wikipedia? Capturing Trends and Language Biases Across Wikipedia Editions" by Volodymyr Miz, Joëlle Hanna, Nicolas Aspert, Benjamin Ricaud, and Pierre Vandergheynst of EPFL, published at WikiWorkshop as part of The Web Conference 2020, examines what topics trend on Wikipedia (i.e. attract high numbers of pageviews) and how these trending topics vary by language.[1] Specifically, the authors study aggregate pageview data from September - December of 2018 for English, French, and Russian Wikipedia. In the paper, trending topics are defined as clusters of articles that are linked together and all receive a spike in pageviews over a given period of time. Eight high-level topics are identified that encapsulate most of the trending articles (football, sports other than football, politics, movies, music, conflicts, religion, science, and video games). Articles are mapped to these high-level topics through a classifier trained over article extracts in which the labeled data comes from a set of articles that were labeled via heuristics such as the phrase "(album)" being in the article title indicating music.

The authors find a number of topics that span language communities in popularity, as well as topics that are much more locally popular (e.g., specific to the United States or France or Russia). Singular events (e.g., a hurricane that has a specific Wikipedia article) often lead to tens of related pages (e.g., about past hurricanes or scientific descriptions) receiving correlated spikes. This is a trend that has been especially apparent with the current pandemic, as pages adjacent to main pandemic such as social distancing, past pandemics, or regions around the world have also received high spikes in traffic. They discuss how these trending topics relate to the motivations of Wikipedia readers, geography, culture, and artifacts such as featured articles or Google doodles.

It is always exciting to see work that explicitly compares language editions of Wikipedia. Highlighting these similarities and differences as well as developing methods to study Wikipedia across languages are valuable contributions. While it is interesting to explore differences in interest across languages, these types of analyses can also help recommend what types of articles are valuable to be translated into a given language and will hopefully be further developed with some of these applications in mind. The authors identify that Wikidata shows promise in improving their approach to labeling articles with topics. It should be noted that Wikimedia has also recently developed approaches to identifying the topics associated with an article that have greater coverage (i.e. ~60 topics instead of 8) and are based on the WikiProject taxonomy. This has been expanded experimentally to Wikidata as well (see here).

For more details, see:

- Author's talk at WikiWorkshop: https://www.youtube.com/watch?v=Oa6WPOv6sHQ

- Visualizations: https://wiki-insights.epfl.ch/wikitrends/

- Code: https://github.com/epfl-lts2/sparkwiki

- Earlier work by these authors: April 2019, November 2019, December 2019

Briefly

- See also in this month's Signpost issue: "Open data and COVID-19: Wikipedia as an informational resource during the pandemic"

Other recent publications

Other recent publications that could not be covered in time for this issue include the items listed below. Contributions, whether reviewing or summarizing newly published research, are always welcome.

- Compiled by Tilman Bayer

"Automatically Neutralizing Subjective Bias in Text"

From the abstract:[2]

From the abstract: "we introduce a novel testbed for natural language generation: automatically bringing inappropriately subjective text into a neutral point of view ("neutralizing" biased text). We also offer the first parallel corpus of biased language. The corpus contains 180,000 sentence pairs and originates from Wikipedia edits that removed various framings, presuppositions, and attitudes from biased sentences. Last, we propose two strong encoder-decoder [algorithm] baselines for the task [of 'neutralizing' biased text]."

Among the example changes the authors quote from their corpus:

| Original | New (NPOV) version |

|---|---|

| A new downtown is being developed which will bring back... | A new downtown is being developed which [...] its promoters hope will bring back... |

| Jewish forces overcome Arab militants. | Jewish forces overcome Arab forces. |

| A lead programmer usually spends his career mired in obscurity. | Lead programmers often spend their careers mired in obscurity. |

As example output for one of their algorithms, the authors present the change from

- John McCain exposed as an unprincipled politician

to

- John McCain described as an unprincipled politician

"Neural Based Statement Classification for Biased Language"

The authors construct a RNN (Recurrent Neural Network) able to detect biased statements from the English Wikipedia with 91.7% precision, and "release the largest corpus of statements annotated for biased language". From the paper:[3]

"We extract all statements from the entire revision history of the English Wikipedia, for those revisions that contain the POV tag in the comments. This leaves us with 1,226,959 revisions. We compare each revision with the previous revision of the same article and filter revisions where only a single statement has been modified.[...] The final resulting dataset leaves us with 280,538 pov-tagged statements. [...] we [then] ask workers to identify statements containing phrasing bias in the Figure Eight platform. Since labeling the full pov-tagged dataset would be too expensive, we take a random sample of 5000 statement from the dataset. [...] we present our approach for classifying biased language in Wikipedia statements [using] Recurrent Neural Networks (RNNs) with gated recurrent units (GRU)."

Dissertation about data quality in Wikidata

From the abstract:[4]

"This thesis makes a threefold contribution: (i.) it evaluates two previously uncovered aspects of the quality of Wikidata, i.e. provenance and its ontology; (ii.) it is the first to investigate the effects of algorithmic contributions, i.e. bots, on Wikidata quality; (iii.) it looks at emerging editor activity patterns in Wikidata and their effects on outcome quality. Our findings show that bots are important for the quality of the knowledge graph, albeit their work needs to be continuously controlled since they are potentially able to introduce different sorts of errors at a large scale. Regarding human editors, a more diverse user pool—in terms of tenure and focus of activity—seems to be associated to higher quality. Finally, two roles emerge from the editing patterns of Wikidata users, leaders and contributors. Leaders [...] are also more involved in the maintenance of the Wikidata schema, their activity being positively related to the growth of its taxonomy."

See also earlier coverage of a related paper coauthored by the same author: "First literature survey of Wikidata quality research"

Nineteenth-century writers important for Russian Wiktionary

From the abstract:[5]

"The quantitative evaluation of quotations in the Russian Wiktionary was performed using the developed Wiktionary parser. It was found that the number of quotations in the dictionary is growing fast (51.5 thousands in 2011, 62 thousands in 2012). [...] A histogram of distribution of quotations of literary works written in different years was built. It was made an attempt to explain the characteristics of the histogram by associating it with the years of the most popular and cited (in the Russian Wiktionary) writers of the nineteenth century. It was found that more than one-third of all the quotations (the example sentences) contained in the Russian Wiktionary are taken by the editors of a Wiktionary entry from the Russian National Corpus."

The top authors quoted are: 1. Chekhov 2. Tolstoy 3. Pushkin 4. Dostoyevsky 5. Turgenev

"Online Disinformation and the Role of Wikipedia"

From the abstract:[6]

"...we perform a literature review trying to answer three main questions: (i) What is disinformation? (ii) What are the most popular mechanisms to spread online disinformation? and (iii) Which are the mechanisms that are currently being used to fight against disinformation?. In all these three questions we take first a general approach, considering studies from different areas such as journalism and communications, sociology, philosophy, information and political sciences. And comparing those studies with the current situation on the Wikipedia ecosystem. We conclude that in order to keep Wikipedia as free as possible from disinformation, it is necessary to help patrollers to early detect disinformation and assess the credibility of external sources."

"Assessing the Factual Accuracy of Generated Text"

This paper by four Google Brain researchers describes automated methods for estimating the factual accuracy of automatic Wikipedia text summaries, using end-to-end fact extraction models trained on Wikipedia and Wikidata.[7]

"Revision Classification for Current Events in Dutch Wikipedia Using a Long Short-Term Memory Network"

From the abstract:[8]

"Wikipedia contains articles on many important news events, with page revisions providing near real-time coverage of the developments in the event. The set of revisions for a particular page is therefore useful to establish a timeline of the event itself and the availability of information about the event at a given moment. However, many revisions are not particularly relevant for such goals, for example spelling corrections or wikification edits. The current research aims [...] to identify which revisions are relevant for the description of an event. In a case study a set of revisions for a recent news event is manually annotated, and the annotations are used to train a Long Short Term Memory classifier for 11 revision categories. The classifier has a validation accuracy of around 0.69 which outperforms recent research on this task, although some overfitting is present in the case study data."

"DBpedia FlexiFusion: the Best of Wikipedia > Wikidata > Your Data"

From the abstract and acknowledgements:[9]

"The concrete innovation of the DBpedia FlexiFusion workflow, leveraging the novel DBpedia PreFusion dataset, which we present in this paper, is to massively cut down the engineering workload to apply any of the [existing DBPedia quality improvement] methods available in shorter time and also make it easier to produce customized knowledge graphs or DBpedias.[...] our main use case in this paper is the generation of richer, language-specific DBpedias for the 20+ DBpedia chapters, which we demonstrate on the Catalan DBpedia. In this paper, we define a set of quality metrics and evaluate them for Wikidata and DBpedia datasets of several language chapters. Moreover, we show that an implementation of FlexiFusion, performed on the proposed PreFusion dataset, increases data size, richness as well as quality in comparison to the source datasets." [...] The work is in preparation to the start of the WMF-funded GlobalFactSync project (https://meta.wikimedia.orgview_html.php?sq=Envato&lang=en&q=Grants:Project/DBpedia/GlobalFactSyncRE ).

"Improving Neural Question Generation using World Knowledge"

From the abstract and paper:[10]

"we propose a method for incorporating world knowledge (linked entities and fine-grained entity types) into a neural question generation model. This world knowledge helps to encode additional information related to the entities present in the passage required to generate human-like questions. [...] . In our experiments, we use Wikipedia as the knowledge base for which to link entities. This specific task (also known as Wikification (Cheng and Roth, 2013)) is the task of identifying concepts and entities in text and disambiguation them into the most specific corresponding Wikipedia pages."

Concurrent "epistemic regimes" feed disagrements among Wikipedia editors

From the (English version of the) abstract:[11]

"By analyzing the arguments in a corpus of discussion pages for articles on highly controversial subjects (genetically modified organisms, September 11, etc.), the authors show that [disagreements between Wikipedia editors] are partly fed by the existence on Wikipedia of concurrent 'epistemic regimes'. These epistemic regimes (encyclopedic, scientific, scientistic, wikipedist, critical, and doxic) correspond to divergent notions of validity and the accepted methods for producing valid information."

"ORES: Lowering Barriers with Participatory Machine Learning in Wikipedia"

From the abstract:[12]

"... we describe ORES: an algorithmic scoring service that supports real-time scoring of wiki edits using multiple independent classifiers trained on different datasets. ORES decouples several activities that have typically all been performed by engineers: choosing or curating training data, building models to serve predictions, auditing predictions, and developing interfaces or automated agents that act on those predictions. This meta-algorithmic system was designed to open up socio-technical conversations about algorithmic systems in Wikipedia to a broader set of participants. In this paper, we discuss the theoretical mechanisms of social change ORES enables and detail case studies in participatory machine learning around ORES from the 4 years since its deployment."

References

- ^ Miz, Volodymyr; Hanna, Joëlle; Aspert, Nicolas; Ricaud, Benjamin; Vandergheynst, Pierre (17 February 2020). "What is Trending on Wikipedia? Capturing Trends and Language Biases Across Wikipedia Editions". WikiWorkshop (Web Conference 2020): 794–801. arXiv:2002.06885. doi:10.1145/3366424.3383567. ISBN 9781450370240.

- ^ Pryzant, Reid; Martinez, Richard Diehl; Dass, Nathan; Kurohashi, Sadao; Jurafsky, Dan; Yang, Diyi (2019-12-12). "Automatically Neutralizing Subjective Bias in Text". arXiv:1911.09709 [cs.CL]., To appear at the 34th AAAI Conference on Artificial Intellegence (AAAI 2020)

- ^ Hube, Christoph; Fetahu, Besnik (2019-01-30). "Neural Based Statement Classification for Biased Language". Proceedings of the Twelfth ACM International Conference on Web Search and Data Mining. WSDM '19. Melbourne VIC, Australia: Association for Computing Machinery. pp. 195–203. doi:10.1145/3289600.3291018. ISBN 9781450359405.

- ^ Piscopo, Alessandro (2019-11-27), Structuring the world's knowledge: Socio-technical processes and data quality in Wikidata, doi:10.6084/m9.figshare.10998791.v2 (dissertation)

- ^ Smirnov, A.; Levashova, T.; Karpov, A.; Kipyatkova, I.; Ronzhin, A.; Krizhanovsky, A.; Krizhanovsky, N. (2020-01-20). "Analysis of the quotation corpus of the Russian Wiktionary". arXiv:2002.00734 [cs.CL].

- ^ Saez-Trumper, Diego (2019-10-14). "Online Disinformation and the Role of Wikipedia". arXiv:1910.12596 [cs.CY].

- ^ Goodrich, Ben; Rao, Vinay; Liu, Peter J.; Saleh, Mohammad (2019-07-25). "Assessing The Factual Accuracy of Generated Text". Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining. KDD '19. Anchorage, AK, USA: Association for Computing Machinery. pp. 166–175. doi:10.1145/3292500.3330955. ISBN 9781450362016.

- ^ Nienke Eijsvogel, Marijn Schraagen: Revision Classification for Current Events in Dutch Wikipedia Using a Long Short-Term Memory Network (short paper). Proceedings of the 31st Benelux Conference on Artificial Intelligence (BNAIC 2019) and the 28th Belgian Dutch Conference on Machine Learning (Benelearn 2019). Brussels, Belgium, November 6-8, 2019.

- ^ Frey, Johannes; Hofer, Marvin; Obraczka, Daniel; Lehmann, Jens; Hellmann, Sebastian (2019). "DBpedia FlexiFusion the Best of Wikipedia > Wikidata > Your Data". In Chiara Ghidini; Olaf Hartig; Maria Maleshkova; Vojtěch Svátek; Isabel Cruz; Aidan Hogan; Jie Song; Maxime Lefrançois; Fabien Gandon (eds.). The Semantic Web – ISWC 2019. Lecture Notes in Computer Science. Cham: Springer International Publishing. pp. 96–112. doi:10.1007/978-3-030-30796-7_7. ISBN 9783030307967.

Author's copy

Author's copy

- ^ Gupta, Deepak; Suleman, Kaheer; Adada, Mahmoud; McNamara, Andrew; Harris, Justin (2019-09-09). "Improving Neural Question Generation using World Knowledge". arXiv:1909.03716 [cs.CL].

- ^ Carbou, Guillaume; Sahut, Gilles (2019-07-15). "Les désaccords éditoriaux dans Wikipédia comme tensions entre régimes épistémiques". Communication. Information Médias Théories Pratiques. 36/2. doi:10.4000/communication.10788. ISSN 1189-3788.

- ^ Halfaker, Aaron; Geiger, R. Stuart (2019-09-11). "ORES: Lowering Barriers with Participatory Machine Learning in Wikipedia". arXiv:1909.05189 [cs.HC].

Wikipedia:An article about yourself isn't necessarily a good thing

- This Wikipedia essay was first written by Sebwite in 2009. Essays are not project guidelines or policies, and may or may not have broad support among the Wikipedia commuity. This essay was written by Sebwite and 273 other editors.

Are you planning to write a Wikipedia article about yourself? Are you planning to pay for someone to write an article on your behalf? Before you proceed, please take some time to thoroughly understand the principles and policies of Wikipedia, especially one of its most important policies, the neutral point of view (NPOV) policy.

Wikipedia seeks neutrality. An article about you written by anyone must be editorially neutral. It will not take sides and will report both the good and the bad about you from verifiable and reliable sources. It will not promote you. It will just contain factual information about you from independent, reliable sources.

This is a mixed blessing.

Some accomplishment or event, good or bad, may give you notability enough to qualify for a Wikipedia article. Once you have become a celebrity, your personal life may be exposed. No one is perfect, so your faults may get reported, and overreported, and reported enough to end up on Wikipedia. Even if you have lived a life free of scandal, and your Wikipedia article is spotless, at some time in the future your first publicized mistake may well end up getting into that article. Suddenly your fame may turn into highly publicized infamy. Yes, Wikipedia is highly publicized! It is mirrored and copied all over the place. Some reporters use Wikipedia as a source for their articles, so information about the mistakes you have made which end up in the Wikipedia article about you and which are covered in independent reliable published sources may be repeated.

Background

An article about yourself is nothing to be proud of. The neutral point of view (NPOV) policy will ensure that both the good and the bad about you will be told, that whitewashing is not allowed, and that the conflict of interest (COI) guideline limits your ability to edit out any negative material from an article about yourself. There are serious consequences of ignoring these, and the "Law of Unintended Consequences" works on Wikipedia.[1] If your faults are minor and relatively innocent, then you have little to fear, but coveting "your" own article isn't something to seek, because it won't be your "own" at all. Once it's in Wikipedia, it is viewed by the world and cannot be recalled.

For example, Tiger Woods is one of the most accomplished golfers, yet his possible fall from grace is mentioned in his article.[1] Michael Phelps holds the record for the most gold medals in Olympic history, but his drunk driving arrests in 2004 and 2014 and a bong photo published in 2009 are both mentioned on Wikipedia, not violating Wikipedia's BLP guidelines. Rolf Harris was regarded as a national treasure for many decades, but his arrest and prosecution for child abuse was widely reported in the mainstream and broadsheet media, and it would be against Wikipedia's neutrality policy to not include it.

Elected officials (such as heads of state), entertainers with commercialized productions, authors of published materials, and professional athletes can reasonably expect various details of their personal lives to receive coverage.

While having a Wikipedia article may make you a celebrity of some sort, be ready to have your personal life exposed. If you are seen at the side of the road being issued a speeding ticket, and that gets reported, it may end up in an article about you. If your house is foreclosed and this gets reported, it may find its way onto Wikipedia. And if you get into an argument with another person in public, someone may report that in a reliable source, and it will be fair game for Wikipedia.

So is a Wikipedia article about yourself good for a resumé? Probably not. On a resumé, you want to tell all the best about yourself. Even if one day the article looks great, it may tell something very different the next. The nature of a wiki is such that information can change at any time. So beware – your boss, your boyfriend/girlfriend, or anyone else you are trying to impress may think one thing of you on one day, and you may lose all that respect by the next. All because of well-verified information in your Wikipedia article.