Some long-overdue retractions

As the Signpost has moved from publishing every month to every two weeks every three weeks every two and a half weeks or so more frequently, we've hit our share of snips and snags, including a couple weeks ago, when a rather brashly opinionated technology report spurred about a hundred kilobytes of discussion, a big-ass thread at administrators' noticeboard slash incidents, and a currently-open request for comment linked to from WP:CENT.

In light of this most recent debacle, I've been going through old Signpost archives in order to find some editorial guidance. What I've found is grim: it turns out this is far from the only time we've made a questionable call on a hot-button issue. In fact, there we have run a great number of ill-advised pieces over the years. But under new editorship, we too have the chance to turn a new leaf. So I'd like to take a few minutes and apologize for some of the times we've gotten it wrong over the years.

Starting from the beginning.[1]

- Wikipedia:Wikipedia Signpost/Time of cold/Opinion, by Grug of Blue Rock Clan: "Hot light from stick bad"

- Basically everything in this piece was a bad call, starting out with blatant personal attacks: "MAN OF TALL TREE TRIBE BRING HOT LIGHT FROM STICK AND SHOW BLUE ROCK CLAN HOW TO MAKE. BUT PROBLEM. MAN OF TALL TREE TRIBE HATE SUN GOD AND WANT TO STEAL HOT LIGHT FROM HIM. SHOULD BE INDEFFED." This just goes to show how much of a Wild West environment Wikipedia was back in the day; Grug was later community-banned, and removed from his seat on the board of Wikimedia Afro-Eurasia, after it was revealed that he had been sockpuppeting for years to discredit hot light from stick.

- Wikipedia:Wikipedia Signpost/First consulship of Marcus Aemilius Lepidus, XVI Aprilis/News and notes, by Marcus Artorius Castus: "Why Vercingetorix had it coming"

- This is a very early Nuntium ac notas, and it shows. It doesn't hold up to modern standards at The Signpost at all: for example, there were non-free images all over the place.

- Wikipedia:Wikipedia Signpost/1633-12-14/Arbitration report, by Benedetto Lucio Anafesto: "Pseudoscience case closes with ruling against GGalilei, topic ban (finally!)"

- To be fair, at that time the political climate was a little different, and the WMF's endowment was being managed by the Camerarius Domini Papae. Still, the article was inexcusably and unduly biased against GGalilei (who even caught a siteban while defending himself in the comments).

- Wikipedia:Wikipedia Signpost/1775-05-06/Wikiproject report, by the Right Honourable Wilford Hottingston, 5th Viscount Frimsy-Wimsy-upon-Rumpcastle: "His Excellency The Most Honourable Charles, Earl Cornwallis and WP:COLONIES coordinator, on fighting POV pushing from anti-Crown extremists"

- It turns out these concerns may have been reasonable after all.

- Wikipedia:Wikipedia Signpost/1815-03-19/In the media, by Pierre de Froufrou: "Bonaparte is advancing with rapid steps, but the Corsican ogre will never enter Paris"[2]

- There was also a lot of stuff in there about him having tiny hands, which has aged poorly.

- Wikipedia:Wikipedia Signpost/1906-01-14/Deletion report, by Jebediah Ezekiel Hegginbottom: "Hoax about supposed 'flying machine' removed swiftly"

- Hegginbottom noted that "aviation experts have said that the alleged heavier-than-air 'flying machine' is in fact a 'glorified kite' and, moreover, its development raises ethical questions." To be fair, they didn't have pics.

- Wikipedia:Wikipedia Signpost/1947-11-14/From the team, by Baldy Falsman: "The Signpost's plan for pushing back against Soviet disinformation"

- Falsman, a former editor-in-chief of The Signpost and member of the Arbitration Committee during the HUAC trials, did indeed lead The Signpost to numerous accolades. However, some of his policies have failed to stand the test of time:

| “ | Anyone who wants to write for The Signpost needs to be screened for ideological sympathies and potential fifth-columnism. Fortunately, this is simple. Our process is to ask them what they think of a world in which every single person on the planet is given free access to the sum of all human knowledge. If they say something like "hell yeah!" or "based!" or "that would be nice", that's how we know they are a Communist, or a homosexual, or some other kind of freak, and we start keeping a close eye on them and their associates. | ” |

- Luckily, those dark days are over, and the English Wikipedia no longer has to deal with any accusations of people being jacked around on account of their political leanings.

- Wikipedia:Wikipedia Signpost/1992-09-01/Technology report, by Hubert Glockenspiel: "Why putting Wikipedia on the 'World Wide Web' would accomplish nothing"

- Well, I guess maybe this is true, from certain perspectives. However, many of Glockenspiel's claims were objectionable, e.g. "That's a teletype. Congratulations, techbros, you literally invented a teletype."

- Wikipedia:Wikipedia Signpost/2023-04-03/From the editor, by JPxG: "Some long-overdue retractions"

- Since this was published on April 3, the idea of doing an April Fools' Day bit makes no sense. Frankly, the whole idea was kind of stupid, especially when we have gotten hauled to AN/I over Fools' bits before, and this one was very closely skirting the line to begin with (referring, as it did, to ongoing dispute resolution processes involving The Signpost). The decision to eschew the {{humor}} template was simply asking for trouble, as was the unfunny extended meta-reference at the end. Moreover, that JPxG guy was a jackass.

Notes

- ^ Note that the stories and information posted in this article are artistic works of fiction and falsehood. Only a fool would take anything written here as fact.

- ^ While this is a funny post, and it is often claimed to be an actual headline in a French newspaper (the Moniteur) in 1815 during Napoleon's return from exile, it is likely apocryphal; its only source is an 1841 retrospective by Alexandre Dumas. No actual such headlines as he described exist during that time!

Sounding out, a universal code of conduct, and dealing with AI

Wikipedia's new sound logo

Wikipedia's new sound logo has been rolled out, as announced on Wikimedia News.

The Verge says the winner of the contest to create the sound was Thaddeus Osborne, "a nuclear engineer and part-time music producer from Virginia". Osborne describes the sound design as a combination of whirring pages and clicking keys.

Gizmodo says the sound is "cute". – B

WMF board has ratified the UCoC Enforcement Guidelines

The WMF has announced that the WMF board ratified the Universal Code of Conduct Enforcement Guidelines on 9 March 2023. This means the Enforcement Guidelines are now in force and—

may not be circumvented, eroded, or ignored by Wikimedia Foundation officers or staff nor local policies of any Wikimedia project.

A Voter Comments Report summarising community comments made as part of the recent community vote on the Enforcement Guidelines (see previous Signpost coverage) has been published as well.

The Enforcement Guidelines now in force state:

Enforcement of the UCoC by local governance structures will be supported in multiple ways. Communities will be able to choose from different mechanisms or approaches based on several factors such as: the capacity of their enforcement structures, approach to governance, and community preferences. Some of these approaches can include:

- An Arbitration Committee (ArbCom) for a specific Wikimedia project

- An ArbCom shared amongst multiple Wikimedia projects

- Advanced rights holders enforcing local policies consistent with the UCoC in a decentralized manner

- Panels of local administrators enforcing policies

- Local contributors enforcing local policies through community discussion and agreement

As for systemic failure to follow or enforce the Code, the Guidelines state:

Systemic failure to follow the UCoC

- Handled by U4C

- Some examples of systemic failure include:

- Lack of local capacity to enforce the UCoC

- Consistent local decisions that conflict with the UCoC

- Refusal to enforce the UCoC

- Lack of resources or lack of will to address issues

The "U4C" here refers to the UCoC Coordinating Committee that the WMF will form. The adoption of the Enforcement Guidelines attracted press coverage (see this issue's In the media section); the underlying Wikimedia Foundation press release is here. – AK

Policy on large language models still under development

An attempt to create a policy about AI-generated articles is happening at Wikipedia:Large language models.

The draft policy as of this writing includes reiterations of existing content policies, including no original research and verifiability. The draft adds in-text attribution is necessary

for AI generated content.

In related news, the Wikimedia Foundation has published a "Copyright Analysis of ChatGPT" (which, despite the title, also touches on the subject of AI-generated images), and on March 23 held a community call on the topic of "Artificial Intelligence in Wikimedia" (meeting notes). – B & T

Brief notes

- New user-groups: The Affiliations Committee announced the approval of this month's newest Wikimedia movement affiliate, the Wiki Advocates Philippines User Group.

- Milestones: The following Wikimedia projects reached milestones this fortnight: Kinyarwanda Wikipedia reached 5,000 articles. Pennsylvania German Wikipedia reached 2,000 articles. Angika Wikipedia reached 1,000 articles.

- Global bans: Safaque / Safaque123 / Podcaster7 / TP495 / AAComposer / Drbenzene / InitiumNovum, since 21 March 2023; Samatics, since 28 March 2023.

- Articles for Improvement: This week's Article for Improvement is Zechariah (Hebrew prophet), to be followed by St. Lawrence River on 10 April. Please be bold in helping improve these articles!

- Not deleted, again: Administrators' noticeboard/Incidents survived its fourth nomination for deletion.

Twiddling Wikipedia during an online contest, and other news

Help correct outdated or incomplete coverage about climate change

Canary Media, an affiliate of the activist non-profit RMI, reports that Wikipedia has a climatetech problem. They urge "climatetech professionals" to edit articles because "The problem is that Wikipedia is often out of date, particularly when it comes to emerging or fast-changing subjects such as clean energy and decarbonization."

WMF staffer Alex Stinson is quoted giving some good advice in a 2020 article, as well as on a Wikiproject page, including finding malicious edits, flagging bad information, and marking a missing citation. – S

Using Wikipedia to win a lottery? There are no free airline tickets

The Hong Kong International Airport sponsored free airline tickets to let tourists know that the the city was open for business after a long COVID slowdown. The plan was to give away 500,000 tickets via multiple lotteries, including 80,000 to be distributed by Cathay Pacific. According to Mothership, Cathay Pacific was to give out 12,500 of those tickets for the Singapore-Hong Kong route, to people who applied between March 2 and March 8. All you had to do to enter the contest was fill out an online form and answer some trivia questions about the history of Cathay Pacific. To find the answers (according to pageview data) about 6,000 more people than usual visited the English Wikipedia's article about Cathay Pacific on March 2. And about 5 people, using IP addresses traceable to Singapore, made about a dozen edits "to prevent others from winning". For example the founding date in the article was changed from 1946 to 1947 and then to 1949. In the first 43 minutes that registration was open 100,000 people entered the contest and the contest was closed early. A Wikipedia admin locked the article at about the same time.

Cathay Pacific named the winners on-time on March 20, as Mothership reported the outcome. It turns out the "free tickets" weren't really free because a fuel surcharge, taxes and other fees needed to be paid to get the tickets. The net you needed to pay: S$194.50 (about US$145) to get the S$474.50 tickets. – S

In brief

- Streaming satire: Hard Drive, a satirical site recently in the news for its spat with Elon Musk, "reports" that Wikipedia's biography of an up-and-coming streamer includes a controversy section saying nothing but "TBD". The site quotes a fictitious Wikipedian as saying: "These things are just inevitable; might as well save some time now."

- Universal Code of Conduct Enforcement Guidelines attract press coverage in India: See article in the Indian Express and article in The Print.

- Cochrane–Wikipedia partnership: Medical research charity Cochrane reports on its partnership with Wikipedia in 2023: "Given that so many people are consulting Wikipedia on a daily basis, we feel that Cochrane's commitment to producing and sharing high quality health evidence includes sharing that evidence where people are accessing it," they say.

- Penn State editathon: Penn State is hosting a virtual editathon focusing on Native American women.

- When to use Wikipedia: Is Wikipedia a good source? When to use the online encyclopedia – and when to avoid it – two college librarians explain Wikipedia to classic rock station WRQE. Mostly good advice, though it seems they haven't discovered the History tab yet, recommending that people use the Internet Archive to view old article versions. Also, their commentary on indigenous oral history is only relevant if the oral history isn't already recorded somewhere.

- Fools of April: A Reddit account "WikipediaHistorian" asked people not to vandalize articles this year. Good luck with that.

- Cute sound logo: A few media sources noticed the winning entry in the contest for Wikipedia's new sound logo: [1] [2] [3] [4] (See related coverage at WP:Wikipedia Signpost/2023-04-03/News and notes.)

"World War II and the history of Jews in Poland" case is ongoing

- Note: An RfC potentially limiting The Signpost's coverage of active arbitration cases was opened 17 March, and is active as of writing deadline.

World War II and the history of Jews in Poland

Wikipedia:Arbitration/Requests/Case/World War II and the history of Jews in Poland was accepted 13 March. New parties were added to the case as recently as 24 March.

Timeline relevant to the case will be (target dates according to clerks):

- Evidence phase 1 closes 6 April 2023

- Evidence phase 2: 13 April 2023 – 27 April 2023

- Analysis closes 27 April 2023

- Proposed decision to be posted by 11 May 2023

Scope: Conduct of named parties in the topic areas of World War II history of Poland and the history of the Jews in Poland, broadly construed

In accepting the case, arbitrator CaptainEek said we've received scholarly rebuke for our actions, and it is apparent that the entire Holocaust in Poland topic area is broken

, referring to an academic paper about the management of English Wikipedia's editing process on the topic (see prior Signpost coverage in In the media, issue 4, and Recent research, issue 6).

De-sysop request

An editor has requested that Arbcom de-sysop an admin, Dbachmann, at Wikipedia:Arbitration/Requests#Dbachmann.

Hail, poetry! Thou heav'n-born maid

-

Jerusalem at the centre, an O round a T

That's how you map things medievally

(Bünting Clover Leaf Map by Heinrich Bünting, a new featured picture)

Back in 2014 we did a poetical, jokey featured content report for April Fools'. We haven't done this in recent years, particularly as the monthly schedule meant that we rarely had a suitable date for it. But this year...

- Poetry rising! The comedy beckoned!

- I just wish I had started before April Second.

Featured articles

Ten articles were promoted to Featured article status this period.

- Boulton and Park, nominated by SchroCat

- Back when men were men, and women were too,

- Two gay men knew what to do.

- As Fanny and Stella they trod the boards,

- And with police they crossed their swords.

- Thekla (daughter of Theophilos), nominated by Iazyges

- Co-empress deposed, to convent she goes

- Then left to be mistress for Basil the First.

- When she fell out of favour, another's dick she did savour.

- Ain't Byzantine relations the worst?

- Goodwin Fire, nominated by Vami IV

- Burning passion is good, burning Arizona is not

- But at least no-one burned when the desert got hot.

- Battle of Saseno, nominated by Cplakidas (a.k.a. Constantine)

- In the War of Saint Sabas the Genovese grab as

- The Venetian trade convoy sails on past 'em

- They stole a great treasure, quite a bit to their pleasure

- By hiding attackers near Malta's great bastion.

- 1950–51 Gillingham F.C. season, nominated by ChrisTheDude

- When Gillingham reentered the Third Division

- They struggled to maintain their new position.

- Albert Levitt, nominated by Wehwalt

- As politician, judge, and lawyer, he's fine,

- But awful hard to sum up in the poetical line.

- Richard Roose, nominated by Serial Number 54129 (a.k.a. SN54129)

- A poisoner (perhaps: is Renaissance law fair?)

- They said he took porridge and put powder in there.

- Two people died, the eighth Henry strived to make poisoning a treason,

- Poor Richard Roose was boiled like a goose, the treason was the reason.

- Marriage License, nominated by Guerillero

- A bored official watches as a young couple signs the banns:

- Norman Rockwell once again shows why he has so many fans.

- Martinus (son of Heraclius), nominated by Iazyges

- His father's Heraclius, his cousin is his mother,

- Byzantine sexual weirdness really ain't like any other!

- Otto Klemperer, nominated by Tim riley

- He was loved by Mahler, and so Klemperer became

- A well-beloved conductor with an internat'nal fame.

Featured pictures

Six featured pictures were promoted this period, including the ones at the top and bottom of this article.

-

Rhina Aguirre by the staff photographer for the Chamber of Senators of Bolivia

Featured lists

One featured list was promoted this period.

- List of afrosoricids, nominated by PresN

- An order of placental mammals, they have yet again

- Become a featured list through the great work of PresN.

-

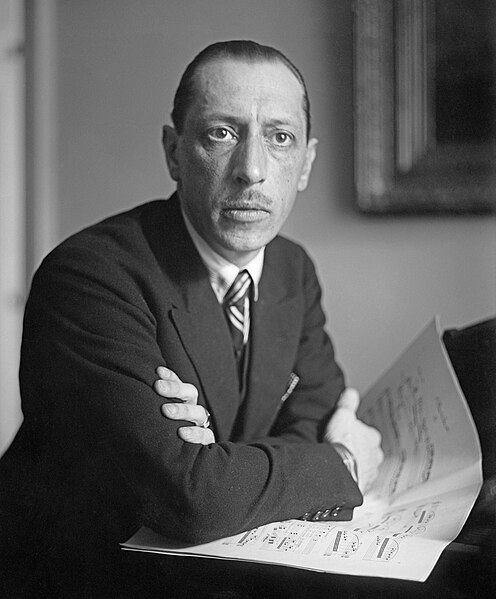

Stravinsky restored, but not by me

A talented new fellow has joined FPC

(Igor Stravinsky by Bain News Service, restored by MyCatIsAChonk)

Language bias: Wikipedia captures at least the "silhouette of the elephant", unlike ChatGPT

A monthly overview of recent academic research about Wikipedia and other Wikimedia projects, also published as the Wikimedia Research Newsletter.

"A Perspectival Mirror of the Elephant: Investigating Language Bias on Google, ChatGPT, Wikipedia, and YouTube"

This arXiv preprint[1] (which according to the authors grew out of a student project for a course titled "Critical Thinking in Data Science" at Harvard University) finds that

[...] Google and its most prominent returned results – Wikipedia and YouTube – simply reflect the narrow set of cultural stereotypes tied to the search language for complex topics like "Buddhism," "Liberalism," "colonization," "Iran" and "America." Simply stated, they present, to varying degrees, distinct information across the same search in different languages (we call it 'language bias'). Instead of presenting a global picture of a complex topic, our online searches turn us into the proverbial blind person touching a small portion of an elephant, ignorant of the existence of other cultural perspectives.

Regarding Wikipedia, the authors note it "is an encyclopedia that provides summaries of knowledge and is written from a neutral point of view", concluding that

[...] even though the tones of voice and views do not differ much in Wikipedia articles across languages, topic coverage in Wikipedia articles tends to be directed by the dominant intellectual traditions and camps across different language communities, i.e., a French Wikipedia article focuses on French thinkers, and a Chinese article stresses on Chinese intellectual movements. Wikipedia’s fundamental principles or objectives filter language bias, making it heavily rely on intellectual and academic traditions.

While the authors employ some quantitative methods to study the bias on the other three sites (particularly Google), the Wikipedia part of the paper is almost entirely qualitative in nature. It focuses on an in-depth comparison of a small set of (quite apparently non-randomly chosen) article topics across languages, not unlike various earlier studies of language bias on Wikipedia (e.g. on the coverage of the Holocaust in different languages, see our previous coverage here and here). Unfortunately, the paper fails to cite such such earlier research (which has also included quantitative results, such as those represented in the "Wikipedia Diversity Observatory", which among other things includes data on topic coverage across 300+ Wikipedia languages) – despite asserting "there has been a lack of investigation into language bias on platforms such as Google, ChatGPT, Wikipedia, and YouTube".

The first and largest part of the paper's examination of Wikipedia's coverage concerns articles about Buddhism and various subtopics, in the English, French, German, Vietnamese, Chinese, Thai, and Nepali Wikipedias. The authors indicate that they chose this topic starting out from the observation that

To Westerners, Buddhism is generally associated with spirituality, meditation, and philosophy, but people who primarily come from a Vietnamese background might see Buddhism as closely tied to the lunar calendar, holidays, mother god worship, and capable of bringing good luck. One from a Thai culture might regard Buddhism as a canopy against demons, while a Nepali might see Buddhism as a protector to destroy bad karma and defilements.

Somewhat in confirmation of this hypothesis, they find that

Compared to Google’s language bias, we find that Wikipedia articles' content titles mainly differ in topic coverage but not much in tones of voice. The preferences of topics tend to correlate with the dominant intellectual traditions and camps in different language communities.

However, the authors also observe that "randomness is involved to some degree in terms of topic coverage on Wikipedia", defying overly simplistic predictions of biases based on intellectual traditions. E.g.

Looking at the Chinese article on "Buddhism", it addresses topics like "dharma name", "cloth", and "hairstyle" that do not exist on other languages' pages. There are several potential causes for its special treatment on these issues. First, many Buddhist texts, such as the Lankavatara Sutra (楞伽经) and Vinaya Piṭaka (律藏), that address these issues were translated into Chinese during medieval China, and these texts are still widely circulated in China today. Second, according to the official central Chinese government statistics, there are over 33,000 monasteries in China, so people who are interested in writing Wikipedia articles might think it is helpful to address these issues on Wikipedia. However, like the pattern in the French article, Vietnam, Thailand, and Nepal all have millions of Buddhist practitioners, and the Lankavatara Sutra and Vinaya Piṭaka are also widely circulated among South Asian Buddhist traditions, but their Wikipedia pages do not address these issues like the Chinese article.

A second, shorter section focuses on comparing Wikipedia articles on liberalism and Marxism across languages. Among other things, it observes that the "English article has a long section on Keynesian economics", likely due to its prominent role in the New Deal reforms in the US in the 1930s. In contrast,

In the French article on liberalism, the focus is not solely on the modern interpretation of the term but rather on its historical roots and development. It traces its origins from antiquity to the Renaissance period, with a focus on French history. It also highlights the works of French theorists such as Montesquieu and Tocqueville [...]. The Italian article has a lengthy section on "Liberalism and Christianity" because liberalism can be seen as a threat to the catholic church. Hebrew has a section discussing the Zionist movement in Israel. The German article is much shorter than the French, Italian, and Hebrew ones. Due to Germany's loss in WWII, its post-WWII state was a liberal state and was occupied by the Allied forces consisting of troops from the U.S., U.K., France, and the Soviet Union. This might have influenced Germany's perception and approach to liberalism.

Among other proposals for reducing language bias on the four sites, the paper proposes that

"[Wikipedia] could potentially invite scholars to contribute articles in other languages to improve the multilingual coverage of the site. Additionally, Wikipedia could merge non-overlapping sections of articles on the same term but written in different languages into a single article, like how multiple branches of code are merged on GitHub. Like Google, Wikipedia could translate the newly inserted paragraphs into the user’s target language and place a tag to indicate its source language.

Returning to their title metaphor, the authors give Wikipedia credit for at least "show[ing] a rough silhouette of the elephant", whereas e.g. Google only "presents a piece of the elephant based on a user's query language". However, this "silhouette – topic coverage – differs by language. [Wikipedia] writes in a descriptive tone and contextualizes first-person narratives and subjective opinions as cultural, historical, or religious phenomena." YouTube, on the other hand, "displays the 'color' and 'texture' of the elephant as it incorporates images and sounds that are effective in invoking emotions." But its top-rated videos "tend to create a more profound ethnocentric experience as they zoom into a highly confined range of topics or views that conform to the majority's interests".

The papers singles out the new AI-based chatbots as particularly problematic regarding language bias:

The problem with language bias is compounded by ChatGPT. As it is primarily trained on English language data, it presents the Anglo-American perspective as truth [even when giving answers in other languages] – as if it were the only valid knowledge.

On the other hand, the paper's examination of the biases of "ChatGPT-Bing" [sic] highlights among other concerns its reliance on Wikipedia among the sources it cites in its output:

[...] all responses list Wikipedia articles as its #1 source, which means that language bias in Wikipedia articles is inevitably permeated in ChatGPT-Bing's answers.

Briefly

- See the page of the monthly Wikimedia Research Showcase for videos and slides of past presentations.

Other recent publications

Other recent publications that could not be covered in time for this issue include the items listed below. Contributions, whether reviewing or summarizing newly published research, are always welcome.

"A systematic review of Wikidata in Digital Humanities projects"

From the abstract:[2]

"A systematic review was conducted to identify and evaluate how DH [Digital Humanities] projects perceive and utilize Wikidata, as well as its potential and challenges as demonstrated through use. This research concludes that: (1) Wikidata is understood in the DH projects as a content provider, a platform, and a technology stack; (2) it is commonly implemented for annotation and enrichment, metadata curation, knowledge modelling, and Named Entity Recognition (NER); (3) Most projects tend to consume data from Wikidata, whereas there is more potential to utilize it as a platform and a technology stack to publish data on Wikidata or to create an ecosystem of data exchange; and (4) Projects face two types of challenges: technical issues in the implementations and concerns with Wikidata’s data quality."

"Leveraging Wikipedia article evolution for promotional tone detection"

From the abstract:[3]

"In this work we introduce WikiEvolve, a dataset for document-level promotional tone detection. Unlike previously proposed datasets, WikiEvolve contains seven versions of the same article from Wikipedia, from different points in its revision history; one with promotional tone, and six without it. This allows for obtaining more precise training signal for learning models from promotional tone detection. [...] In our experiments, our proposed adaptation of gradient reversal improves the accuracy of four different architectures on both in-domain and out-of-domain evaluation."

"Detection of Puffery on the English Wikipedia"

From the abstract:[4]

"Wikipedia’s policy on maintaining a neutral point of view has inspired recent research on bias detection, including 'weasel words' and 'hedges'. Yet to date, little work has been done on identifying 'puffery,' phrases that are overly positive without a verifiable source. We [...] construct a dataset by combining Wikipedia editorial annotations and information retrieval techniques. We compare several approaches to predicting puffery, and achieve 0.963 f1 score by incorporating citation features into a RoBERTa model. Finally, we demonstrate how to integrate our model with Wikipedia’s public infrastructure [at User:PeacockPhraseFinderBot] to give back to the Wikipedia editor community."

"Mapping Process for the Task: Wikidata Statements to Text as Wikipedia Sentences"

From the abstract:[5]

"The shortage of volunteers brings to Wikipedia many issues, including developing content for over 300 languages at the present. Therefore, the benefit that machines can automatically generate content to reduce human efforts on Wikipedia language projects could be considerable. In this paper, we propose our mapping process for the task of converting Wikidata statements to natural language text (WS2T) for Wikipedia projects at the sentence level. The main step is to organize statements, represented as a group of quadruples and triples, and then to map them to corresponding sentences in English Wikipedia. We evaluate the output corpus in various aspects: sentence structure analysis, noise filtering, and relationships between sentence components based on word embedding models."

Among other examples given in the paper, a Wikidata statement involving the items and properties Q217760, P54, Q221525 and P580 is mapped to the Wikipedia sentence "On 30 January 2010, Wiltord signed with Metz until the end of the season."

Judging from the paper's citations, the authors appear to have been unaware of the Abstract Wikipedia project, which is pursuing a closely related effort.

"The URW-KG: a Resource for Tackling the Underrepresentation of non-Western Writers" on Wikidata

From the paper:[6]

"[...] the UnderRepresented Writers Knowledge Graph (URW-KG), a dataset of writers and their works targeted at assessing and reducing their potential lack of representation [...] has been designed to support the following research objectives (ROs):

1. Exploring the underrepresentation of non-Western writers in Wikidata by aligning it with external sources of knowledge; [...]A quantitative overview of the information retrieved from external sources [ Goodreads, Open Library, and Google Books] shows a significant increase of works (they are 16 times more than in Wikidata) as well as an increase of the information about them. External resources include 787,125 blurbs against the 40,532 present in Wikipedia, and both the number of subjects and publishers extentively grow.

[...] the impact of data from OpenLibrary and Goodreads is more significant for Transnational writers [...] than for Western [...]. This means that the number of Transnational works gathered from external resources is higher, reflecting the wider [compared to Wikidata] preferences of readers and publishers in these crowdsourcing platforms."

References

- ^ Luo, Queenie; Puett, Michael J.; Smith, Michael D. (2023-03-28). "A Perspectival Mirror of the Elephant: Investigating Language Bias on Google, ChatGPT, Wikipedia, and YouTube". arXiv:2303.16281 [cs.CY].

- ^ Zhao, Fudie (2022-12-28). "A systematic review of Wikidata in Digital Humanities projects". Digital Scholarship in the Humanities. 38 (2): –083. doi:10.1093/llc/fqac083. ISSN 2055-7671.

- ^ De Kock, Christine; Vlachos, Andreas (May 2022). "Leveraging Wikipedia article evolution for promotional tone detection". Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers). ACL 2022. Dublin, Ireland: Association for Computational Linguistics. pp. 5601–5613. doi:10.18653/v1/2022.acl-long.384. Data

- ^ Bertsch, Amanda; Bethard, Steven (November 2021). "Detection of Puffery on the English Wikipedia". Proceedings of the Seventh Workshop on Noisy User-generated Text (W-NUT 2021). EMNLP-WNUT 2021. Online: Association for Computational Linguistics. pp. 329–333. code

- ^ Ta, Hoang Thang; Gelbukha, Alexander; Sidorov, Grigori (2022-10-23). "Mapping Process for the Task: Wikidata Statements to Text as Wikipedia Sentences". arXiv:2210.12659 [cs.CL].

- ^ Stranisci, Marco Antonio; Spillo, Giuseppe; Musto, Cataldo; Patti, Viviana; Damiano, Rossana (2022-12-21). "The URW-KG: a Resource for Tackling the Underrepresentation of non-Western Writers". arXiv:2212.13104 [cs.CL].

April Fools' through the ages

To simplify things, years in the headers will link to the documentation for all pranks that year. The Signpost coverage – where available – will be linked in the text highlighting some of the best or most controversial pranks. Since the early days of Wikipedia tended to have the biggest pranks, the second half will cover rather more years.

2004: Let's delete the main page!

We didn't have The Signpost to document Wikipedia's first April Fools, and it was fairly tame compared to later years: A proposal to delete the Main Page, an attempt to block localhost for vandalism, and other things mentioned in joking that feel like things that would later happen. We did get one rather good news item on our main page:

- Santa Claus' elves go on strike at the North Pole, they threaten that if talks don't resume by noon EST, they will become elf-employed.

...but it was shortlived, and compared to what was to come....

2005: Britannica takes over Wikipedia, fake articles get flushed down the loo.

While having an Arbitration Committee was controversial in 2005, publishing a blatant hoax as featured article on the main page and announcing Wikipedia's imminent takeover by Britannica was apparently fine and dandy. And things got more and more goofy as the day went on:

-

Just some of what today we would consider outright vandalism that struck that day. Check out the tragic deaths right next to blatant hoaxes!

Even the interface changed. The text you clicked on to "edit this page" was replaced with "vandalise this page". And then later...

-

Things got weirder from there.

Our coverage attempts to dig through this chaos. An attempt to set rules was put in place, and the original plan for this year – just using a silly article that sounded fake, but was actually real – would be used in later years, instead of inventing fancy mediæval toilet paper holders.

2006: The last hurrah of screwing with the interface; paid editing for all

More user interface shenanigans: the "delete" tab became "baleeted". Cyde changed "My watchlist" to "Stalked pages", and was blocked accordingly. Drini (later renamed Magister Mathematicae) wasn't blocked for his unprotection of the main page, though.

I'd say the meanest prank, however, was adding this to the community bulletin board:

- Special Notice: Due to generous donations by several large corporations, Wikipedia can now afford to pay editors. All editors with over 1000 edits are elible to apply. For details on how to register for the payroll, CLICK HERE.

Paid editing for all?

Our coverage is here.

2007:

The first year The Signpost missed out on any coverage. The big innovation this year was finally implementing Raul's idea from 2005 for Today's featured article:

George Washington was an early inventor of instant coffee, and worked to ensure a full supply to soldiers fighting at the front. Early on, his campaign was based in Brooklyn, but later he crossed into New Jersey toward a more profitable position. In the countryside, he demonstrated a love of wild creatures, and was often seen with a bird or a monkey on his shoulder. Washington's choice beverage was taken up by the soldiers for its psychoactive properties, even though it tasted terrible. Some thought his brewed powder could even remedy the chemical weapons then in use. But, despite this, Washington failed in his first bid for the Presidency, as papers were filed too late, and the nominator forgot to tell him about it. (more...)

Recently featured: New Carissa – Ivan Alexander of Bulgaria – Cleveland

Meanwhile, we rescinded the payments from last year. Recent changes got a new notice:

Wikipedia AnnouncementThe Wikimedia Foundation has decided there is no other option at the present than to charge people to edit the English Wikipedia. "For too long people have been free to hack this website. It's about time they paid" states Theresa Knott the new funding officer. "Allowing free access to all simply encourages vandalism. By asking for a quid an edit we stop kids vandalising, spammers spamming and edit warriors warrioring " Minor edits will naturally be cheaper, although the exact pricing details have not yet been fully worked out. Debate on this is welcome. All users should register their credit card at Wikipedia:Credit Card Registration by noon on 1.4.07. Otherwise their editing privileges will be suspended. Members of the cabel are, of course, exempt. |

2008:

Honestly, the did you know section really knocked it out of the park this year:

- ...that the 24 Hours of LeMons includes such penalties as tarring and feathering a racer's car and crushing a car via audience vote (crushing of a car pictured)?

- ...that John F. Kennedy was shot dead in an ambush by government agents who had foreknowledge of his whereabouts?

- ...that in a few villages and towns of southern France and Spain it is illegal to die, and that there are attempts to have the same law in a town in Brazil?

- ...that Weber kettle grills were actually made out of buoys cut in half?

- ...that men are able to be insured against alien impregnation?

- ...that Ben Affleck died while shoveling snow outside of his house, leaving behind an unexpectedly small estate speculated to be worth as little as US$20,000?

- ...that American entrepreneur Timothy Dexter defied the popular idiom and actually made a profit when he sold coal to Newcastle?

- ...that six latrines at Black Moshannon State Park in Pennsylvania are listed on the National Register of Historic Places?

- ...that in 1976, people reported feeling a floating sensation as they jumped in the air, caused by a Jovian–Plutonian gravitational effect (Jupiter pictured)?

- ...that Wiener sausages are named after the mathematician Norbert Wiener?

- ...that the winner of the Ernie Awards is the person who gets the loudest boos from the audience?

- ...that the 31-mile (50 km) West Rim Trail along the Grand Canyon was selected by Outside Magazine as the best hike in Pennsylvania?

- ...that although presidents of Russia, Ukraine and Kazakhstan have been requested to give technical advice about software patches in open-source computer operating systems, only the Ukrainian president did so?

- ...that James Garner sent two of his associates into a room filled with toxic chlorine gas?

- ...that Jan Wils won a gold medal in architectural design in art competitions at the 1928 Summer Olympics for his design of the Olympic Stadium in Amsterdam?

(Aside: this is one of the illustrations in Wiener sausage.

-

A short, fat Wiener sausage in 2 dimensions

...That's a wiener, alright.)

The featured article was Ima Hogg, one of those people who probably hated her parents a bit for their naming choices. To quote the article: She endeavored to downplay her unusual name by signing her first name illegibly and having her stationery printed with "I. Hogg" or "Miss Hogg".

Six administrators were blocked this year, one for making Wikipedia's tagline " "From Wikipedia, the free encyclopedia administer [sic] by people with a stick up their lavender passageway". Lovely.

Besides the above-linked article, we also had a short history of April Fools' on Wikipedia.

Finally, my favourite joke nomination at featured picture candidates, "800 x enlargement of a pixel"

2009:

Today's featured article: "The Museum of Bad Art (MOBA) is a world-renowned institution dedicated to showcasing the finest art acquired from Boston-area refuse. The museum started in a pile of trash in 1994, in a serendipitous moment when an antiques dealer came across a painting of astonishing power and compositional incompetence that had been tragically discarded."

Other jokes include a to close English Wikipedia, and the dark Terminal Event Management Policy, about what to do if the world was ending on Wikipedia, particularly useful as Skynet was approved to begin operations.

The page that collects jokes also has this hilarious, but undocumented screenshot:

2010:

We briefly covered things, but, honestly, the only things worth speaking about is the main page fun. The article was wife selling, which is kind of boring, but DYK once again ruled the roost:

- ... that researchers have identified the pictured life form which no longer lives on this planet?

- ... that The Queen was captured by the Germans in 1916?

- ... that a rain of blood in Germany foreshadowed the coming of the Black Death?

- ... that James Brown flew an F-22 Raptor and survived a fuel leak while traveling at almost the speed of sound?

- ... that despite dying in battle and being beheaded, Máel Brigte of Moray still managed to kill his opponent Sigurd the Mighty, a 10th-century Earl of Orkney, as he rode home afterwards?

- ... that the Duke of Edinburgh won a recorded 95% of the vote in a Greek head of state election, but was never appointed?

- ... that Martin Van Buren was over twenty feet wide?

- ... that residents of Castleford, England, were incensed when their council tried to eliminate Tickle Cock?

- ... that the citizens of Picoazá, Ecuador, elected foot powder as their mayor?

- ... that Cliffe Castle Museum in Keighley, Yorkshire, boasts a wife-soothing cradle (pictured)?

- ... that Bertie Ahern speaks Bertiespeak?

- ... that humpbacked elves are rarely seen because their bodies are microscopic?

- ... that Lindbergh raced an airplane from Washington to New York in under three hours, without ever leaving the ground?

- ... that Robert Louis Stevenson took a pew from South Leith Parish Church?

- ... that Elvis is still alive and teaching soccer at Neil McNeil Catholic Secondary School?

- ... that the first Territorial Governor of Montana, Sidney Edgerton, fought as a Squirrel Hunter during the American Civil War?

- ... that T. rex survives underground in Kenya?

- ... that two Irish musicians described as "tone deaf", and as "not very good" by British prime minister Gordon Brown, have been recently cited as more popular than The Beatles?

- ... that the materials used in the production of a Škoda Fabia car (pictured) in 2007 included margarine and orange sugar paste?

- ... that Tom Cruse was awarded the Medal of Honor for gallantly charging hostile Indians?

- ... that the cod Yorkshire dialect, one on't cross beams gone owt askew on treadle, in Monty Python's "Trouble at Mill" sketch actually means something?

- ... that Guinness Black Lager is a new black lager which is being test marketed in Malaysia by Diageo for sale in the west under its Guinness brand name?

- ... that the yellow morel was once a Phallus?

- ... that Leonid Malashkin was the true composer of Kodály's "Buttocks-Pressing Song"?

- ... that in October 1968, Dumbo was arrested in Las Palmas, Spain?

- ... that Wikipedia covers the whole shebang?

- ... that Perth, Western Australia, got rid of ugly men in 1948?

- ... that Ruth Belville (pictured) and her parents had a business selling people Greenwich Mean Time?

- ... that the Prada Store in Marfa, Texas, is never open?

- ... that Professor Dirk Obbink is an expert on material from garbage heaps and charred remains?

- ... that the Ilkley Museum in Yorkshire, England, is a notable habitat for Brunus edwardii?

- ... that the American television show Glee was written with the aid of Screenwriting for Dummies?

- ... that Frank Hansford-Miller, founder of the English National Party, emigrated to Australia?

- ... that "Everything in Sussex is a She except a Tom Cat and she's a He"?

- ... that buttock mail was a form of punishment for fornication, an alternative to the stool of repentance?

- ... that William Shakespeare was nicknamed "The Merchant of Menace"?

More next issue!

Sus socks support suits, seems systemic

The financial sector of the economy has taken a beating over the last few weeks. Three of the larger US mid-sized banks, Silicon Valley Bank (SVB), Signature Bank and Silvergate Capital collapsed or closed their business in March. They had all been important sources of dollars for cryptocurrency traders. The run on SVB was the "largest bank run in modern U.S. history".

Outside the US, Credit Suisse, one of the thirty most systemically important banks in the world, had to be bailed out by another systemically important Swiss bank, UBS, with the help of the Swiss central bank.

Fortunately, the threat of bank runs seems to be over. The US stock market even went up in March. But the finance sector has one eternal problem – the only product it can sell is trust. Bank depositors need to trust that they can get their money back at a moment's notice. Stock market traders should know that buying stocks is risky, but they need to trust that they will be treated fairly by corporate management and when the time comes to sell the stocks. Without trust, there is no financial sector.

So should we trust banks? Do they lie to us? This article examines this question from a special Wikipedia point of view. On the English-language Wikipedia, do banks follow Wikipedia's rules on paid editing? Or are the articles about banks riddled with falsehoods placed there by sock puppets? I examine the articles about the thirty most systemically important banks in the world, as listed by the Financial Stability Board.

The answers are not uniform for all thirty banks. On average, each of the bank articles examined has been edited by 17.5 socks. The range is very wide however. The article on France's Groupe BPCE was edited by only one blocked sock, the lowest number. The article on America's Goldman Sachs was edited by 55 blocked socks, the highest number. So it appears that at least some of the world's systemically important banks might have been breaking our rules.

Method

This is not an academic paper – simply a quick overview of an important recent question – but the method used should be explained so that the reader can better follow the evidence presented in the following table and the explanations below it. I've used the same method before in articles about the The oligarchs' socks last year and The "largest con in corporate history"? last month. It's just about counting the number of banned or blocked editors who have edited an article and determining how many of those editors were blocked as sock puppets (users who use multiple accounts to deceive) and how many of those socks were blocked or banned for reasons likely to indicate paid editing. In this case I looked for wording in the block summary or in the sockpuppet investigation such as "undeclared paid editing" or UPE, "terms of use" or ToU, "conflict of interest" or COI. I also included those socks who were noted as being blocked by checkusers which indicates a serious case of socking, where the sock can only be unblocked by another checkuser. The first step – finding the editors who have been banned or blocked on each article – is easily automated. The remaining steps sometimes involve the use of judgement.

The bank articles examined are about the thirty most systemically important banks in the world. These banks are all active internationally, are generally large and well known, and are involved in some of the more complex and controversial areas of the banking business, such as derivatives trading. They are selected by the Financial Stability Board because they present the largest risk to the global economy if they were to fail. They should also be the most trustworthy banks in the world, if only because they are the most tightly regulated, both domestically and internationally.

The table below starts with Risk Class in the first column (officially, the Financial Stability Board calls this Buckets). JP Morgan Chase should be viewed as the most important of the 30 systemically important banks listed, should it fail. The fourth column gives the total number of editors blocked or banned who have edited the article. This number includes blocked bots, editors blocked for vandalism, and for many other wiki-sins likely unrelated to paid editing. The fifth column is the most important. It includes sock puppets blocked for paid editing or conflict of interest violations, plus official checkuser blocks. The sixth column includes other socks who may or may not be suspected of paid editing.

Many sock puppets and sock farms have edited several of these articles, suggesting that there might be a network of socks operating in banking sector articles. The seventh column lists several socks who have attracted my attention, mostly for the number of these articles they have edited. But others are also listed, such as Russavia and Eostrix, who have become infamous on Wikipedia for their editing or other actions. Similarly I have listed some of the sock farms (large collections of sock puppets apparently working together) in the final column.

| Risk class | Country | Bank | Total blocked or banned |

COI, UPE, or checkuser blocked editors |

Other blocked socks | Selected socks | Selected sock farms |

|---|---|---|---|---|---|---|---|

| 4 | JP Morgan Chase | 84 | 11 | 20 | Anandmoorti Cyberfan195 Kkm010 LivinRealGüd |

JayJasper MP1440 Rock5410 VentureKit | |

| 3 | Bank of America | 125 | 21 | 28 | Anandmoorti Coffeedrinker115 Cyberfan195 LivinRealGüd WikiDon |

JayJasper MP1440 VentureKit Wikiwriter700 Yoodaba | |

| 3 | Citigroup | 88 | 12 | 28 | Anandmoorti Coffeedrinker115 Cyberfan195 Kkm010 Pig de Wig |

JayJasper MP1440 Rock5410 VentureKit Wikiwriter700 | |

| 3 | HSBC | 72 | 12 | 21 | Anandmoorti Coffeedrinker115 Kkm010 WikiDon |

GoldDragon JayJasper VentureKit Yoodaba | |

| 2 | Bank of China | 32 | 2 | 11 | Kkm010 Russavia |

Yoodaba | |

| 2 | Barclays | 63 | 7 | 9 | Coffeedrinker115 Cyberfan195 Kkm010 |

Rock5410 | |

| 2 | BNP Paribas | 38 | 8 | 9 | Avaya1 Cyberfan195 Kkm010 |

JayJasper Yoodaba | |

| 2 | Deutsche Bank | 65 | 21 | 17 | Cyberfan195 CLCStudent Kkm010 LivinRealGüd |

Excel23 MP1440 VentureKit Wikiwriter700 Yoodaba | |

| 2 | Goldman Sachs | 108 | 28 | 27 | Cyberfan195 CLCStudent Glaewnis Kkm010 LivinRealGüd Russavia |

AlexLevyOne JayJasper MP1440 VentureKit Wikiwriter700 Yoodaba | |

| 2 | Industrial and Commercial Bank of China | 27 | 3 | 10 | Cyberfan195 Kkm010 Russavia |

Yoodaba | |

| 2 | Mitsubishi UFJ Financial Group | 16 | 1 | 2 | Cyberfan195 Kkm010 |

||

| 1 | Agricultural Bank of China | 26 | 1 | 11 | Cyberfan195 Kkm010 Russavia |

||

| 1 | BNY Mellon | 20 | 5 | 3 | Cyberfan195 Kkm010 |

JayJasper | |

| 1 | China Construction Bank | 19 | 0 | 6 | Cyberfan195 Kkm010 |

JayJasper | |

| 1 | Credit Suisse | 30 | 6 | 5 | Cyberfan195 Kkm010 LivinRealGüd |

MP1440 | |

| 1 | Groupe BPCE | 3 | 0 | 1 | Bitholov | ||

| 1 | Crédit Agricole | 13 | 0 | 2 | Cyberfan195 Kkm010 |

||

| 1 | ING | 38 | 4 | 22 | Anandmoorti Cyberfan195 Kkm010 |

Rock5410 VentureKit | |

| 1 | Mizuho Financial Group | 17 | 2 | 4 | Cyberfan195 Kkm010 |

||

| 1 | Morgan Stanley | 44 | 8 | 12 | CLCStudent Cyberfan195 Kkm010 LivinRealGüd |

MP1440 VentureKit Wikiwriter700 Yoodaba | |

| 1 | Royal Bank of Canada | 28 | 6 | 9 | Cyberfan195 Eostrix Torontopedia |

GoldDragon Yoodaba | |

| 1 | Banco Santander | 36 | 7 | 10 | CLCStudent Cyberfan195 Kkm010 |

VentureKit | |

| 1 | Société Générale | 32 | 6 | 8 | Cyberfan195 Glaewnis Kkm010 |

MP1440 | |

| 1 | Standard Chartered | 31 | 5 | 5 | Cyberfan195 Kkm010 |

Rock5410 VentureKit | |

| 1 | State Street | 20 | 3 | 2 | Cyberfan195 LivinRealGüd Pig de Wig |

VentureKit Yoodaba | |

| 1 | Sumitomo Mitsui | 12 | 3 | 1 | Cyberfan195 | ||

| 1 | Toronto-Dominion Bank | 32 | 8 | 4 | Cyberfan195 | Excel23 MP1440 | |

| 1 | UBS | 51 | 9 | 10 | Cyberfan195 Kkm010 LivinRealGüd |

JayJasper John254 | |

| 1 | UniCredit | 14 | 0 | 3 | Cyberfan195 | ||

| 1 | Wells Fargo | 76 | 11 | 26 | CLCStudent Cyberfan195 Kkm010 LivinRealGüd Pig de Wig |

Excel23 JayJasper MP1440 Rock5410 Yoodaba |

Conclusions

The table shows a range of sock puppet editing among these articles on systemically important banks. Some banks such as the French banks Groupe BPCE and Crédit Agricole, Italy's UniCredit, and the China Construction Bank show no sock puppeting by the most likely paid editing socks. On the other hand, three banks, America's Goldman Sachs, the Bank of America, and Germany's Deutsche Bank, have had over 20 socks of this type editing their articles. In general, with some exceptions, American banks have had the most edits by the most likely paid editing socks. Chinese and Japanese banks, along with the above French and Italian banks have the fewest socks of this type editing the articles about them. The two Swiss banks in the news, Credit Suisse and UBS, are in the broad middle ground with only six and nine socks of this type editing the articles about them.

The articles on banks in the highest two risk classes tend to have higher indications of socking than other classes and the articles about the lowest risk class have the lowest indications of socking, with the possible exception of Wells Fargo.

The seventh column shows a selected group of editors who have edited the articles and been blocked for socking. Glaewnis – whose block summary reads "UPE – appeal is only to the Arbitration Committee" has edited two of these articles. Kkm010 who edited several articles on the Adani group, edited at least 23 of these articles. Several other now blocked socks edited multiple articles in this list.

Perhaps the most interesting column in the table is the final one showing the sock farms who edited multiple articles. The Yoodaba sock farm edited at least 11 of these articles. They are known for editing business articles, especially finance articles, as well as political articles. The JayJasper and VentureKit sock farms edited almost as many.

We remind our readers that no examination based purely on Wikipedia's edit history can prove or disprove whether an editor has been paid to edit articles. Nevertheless, we can say that we have little or no reason to suspect those twelve banks which have fewer than five editors listed in column 5 of paying for Wikipedia editing. Similarly, we might say that the three banks with more than twenty editors listed in column 5 are the most likely among these thirty banks to have paid for Wikipedia editing.

None of this evidence can be taken as final proof of any rules being broken, but there certainly is some interesting evidence.