In computing and telecommunications, a character is a unit of information that roughly corresponds to a grapheme, grapheme-like unit, or symbol, such as in an alphabet or syllabary in the written form of a natural language.[1]

Examples of characters include letters, numerical digits, common punctuation marks (such as "." or "-"), and whitespace. The concept also includes control characters, which do not correspond to visible symbols but rather to instructions to format or process the text. Examples of control characters include carriage return and tab as well as other instructions to printers or other devices that display or otherwise process text.

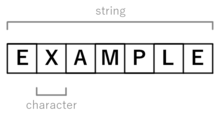

Characters are typically combined into strings.

Historically, the term character was used to denote a specific number of contiguous bits. While a character is most commonly assumed to refer to 8 bits (one byte) today, other options like the 6-bit character code were once popular,[2][3] and the 5-bit Baudot code has been used in the past as well. The term has even been applied to 4 bits[4] with only 16 possible values. All modern systems use a varying-size sequence of these fixed-sized pieces, for instance UTF-8 uses a varying number of 8-bit code units to define a "code point" and Unicode uses varying number of those to define a "character".

- ^ Cite error: The named reference

MW_Definitionwas invoked but never defined (see the help page). - ^ Cite error: The named reference

Dreyfus_1958_Gamma60was invoked but never defined (see the help page). - ^ Cite error: The named reference

Buchholz_1962was invoked but never defined (see the help page). - ^ Cite error: The named reference

Intel_1973_MCS-4was invoked but never defined (see the help page).