Eliezer Yudkowsky | |

|---|---|

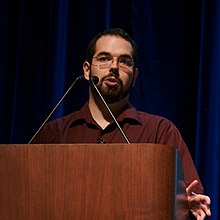

Yudkowsky at Stanford University in 2006 | |

| Born | Eliezer Shlomo[a] Yudkowsky September 11, 1979 |

| Organization | Machine Intelligence Research Institute |

| Known for | Coining the term friendly artificial intelligence Research on AI safety Rationality writing Founder of LessWrong |

| Website | www |

Eliezer S. Yudkowsky (/ˌɛliˈɛzər jʌdˈkaʊski/ EL-ee-EZ-ər yud-KOW-skee;[1] born September 11, 1979) is an American artificial intelligence researcher[2][3][4][5] and writer on decision theory and ethics, best known for popularizing ideas related to friendly artificial intelligence.[6][7] He is the founder of and a research fellow at the Machine Intelligence Research Institute (MIRI), a private research nonprofit based in Berkeley, California.[8] His work on the prospect of a runaway intelligence explosion influenced philosopher Nick Bostrom's 2014 book Superintelligence: Paths, Dangers, Strategies.[9]

Cite error: There are <ref group=lower-alpha> tags or {{efn}} templates on this page, but the references will not show without a {{reflist|group=lower-alpha}} template or {{notelist}} template (see the help page).

- ^ "Eliezer Yudkowsky on “Three Major Singularity Schools”" on YouTube. February 16, 2012. Timestamp 1:18.

- ^ Silver, Nate (April 10, 2023). "How Concerned Are Americans About The Pitfalls Of AI?". FiveThirtyEight. Archived from the original on April 17, 2023. Retrieved April 17, 2023.

- ^ Ocampo, Rodolfo (April 4, 2023). "I used to work at Google and now I'm an AI researcher. Here's why slowing down AI development is wise". The Conversation. Archived from the original on April 11, 2023. Retrieved June 19, 2023.

- ^ Gault, Matthew (March 31, 2023). "AI Theorist Says Nuclear War Preferable to Developing Advanced AI". Vice. Archived from the original on May 15, 2023. Retrieved June 19, 2023.

- ^ Cite error: The named reference

:1was invoked but never defined (see the help page). - ^ Russell, Stuart; Norvig, Peter (2009). Artificial Intelligence: A Modern Approach. Prentice Hall. ISBN 978-0-13-604259-4.

- ^ Leighton, Jonathan (2011). The Battle for Compassion: Ethics in an Apathetic Universe. Algora. ISBN 978-0-87586-870-7.

- ^ Kurzweil, Ray (2005). The Singularity Is Near. New York City: Viking Penguin. ISBN 978-0-670-03384-3.

- ^ Ford, Paul (February 11, 2015). "Our Fear of Artificial Intelligence". MIT Technology Review. Archived from the original on March 30, 2019. Retrieved April 9, 2019.