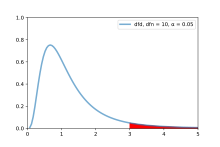

An F-test is any statistical test used to compare the variances of two samples or the ratio of variances between multiple samples. The test statistic, random variable F, is used to determine if the tested data has an F-distribution under the true null hypothesis, and true customary assumptions about the error term (ε).[1] It is most often used when comparing statistical models that have been fitted to a data set, in order to identify the model that best fits the population from which the data were sampled. Exact "F-tests" mainly arise when the models have been fitted to the data using least squares. The name was coined by George W. Snedecor, in honour of Ronald Fisher. Fisher initially developed the statistic as the variance ratio in the 1920s.[2]

- ^ Berger, Paul D.; Maurer, Robert E.; Celli, Giovana B. (2018). Experimental Design. Cham: Springer International Publishing. p. 108. doi:10.1007/978-3-319-64583-4. ISBN 978-3-319-64582-7.

- ^ Lomax, Richard G. (2007). Statistical Concepts: A Second Course. p. 10. ISBN 978-0-8058-5850-1.