An increase in active Wikipedia editors

According to one possibly over-simplistic measure, the core Wikimedia community, and in particular the core community on the English Wikipedia, has recently stopped declining and might even have started to grow again.

| Month | 2014 | 2015 | change |

|---|---|---|---|

| January | 3,232 | 3,312 | 80 |

| February | 2,957 | 3,051 | 94 |

| March | 3,131 | 3,309 | 178 |

| April | 2,979 | 3,156 | 177 |

| May | 3,051 | 3,223 | 172 |

| June | 2,981 | 3,245 | 264 |

| July | 3,024 | 3,399 | 375 |

| Month | 2014 | 2015 | change |

|---|---|---|---|

| January | 10,331 | 10,625 | 294 |

| February | 9,508 | 9,779 | 287 |

| March | 9,936 | 10,446 | 510 |

| April | 9,533 | 9,986 | 453 |

| May | 9,689 | 10,075 | 386 |

| June | 9,276 | 9,891 | 615 |

| July | 9,420 | 10,280 | 860 |

For some years now the English Wikipedia and the Wikimedia movement generally have been losing active editors faster than they have been recruiting them.

But one interesting indicator has now started to climb and indicates that the core community may actually be growing again. Though a range of other indicators from the appointment of new admins on the English wikipedia, the number of new accounts created, and the number of editors doing more than five edits per month are still flat or in decline.

The number of editors saving more than 100 edits each month is a long-standing metric published about Wikipedia and other WMF projects. For seven consecutive months, from January to July 2015, that indicator has been positive on both the English Wikipedia community and the whole Wikimedia project—though the situation is more complex on some other sites, such as the German Wikipedia.

We know there are seasonal events that affect the community, and months themselves vary in length, so February 2015 was shorter than January or March; but more editors were contributing more than 100 edits that month than in February 2014; similarly, in January 2015 there were more active editors than in January 2014, a trend that has now run for seven months. Last month, 12,349 editors made more than 100 edits across all projects, 10,280 editors across all versions of Wikipedia, and 3,399 editors on the English Wikipedia, as opposed to 11,257, 9,420, and 3,024 editors respectively in July 2014.

The matter has been discussed on the research mailing list, Wiki-research-l, during the past two weeks.

As with any data over time, there is always the risk that this could just be anomalous, but Wikimedia Foundation data analyst Erik Zachte has now said of the phenomenon: "The growth seems real to me." Zachte has also pointed to the late 2014 speed-up of editing on the Wikimedia sites as a potential contributor to the increase. Implementing HHVM speeded up the saving of edits, which should logically have more impact on wiki gnomes doing lots of small edits than on editors who make just a few saves per hour.

Another theory suggested on the research list and elsewhere has attributed the increase to the improvements to Visual Editor, though with barely ten percent of the most active editors on English Wikipedia using it, it is unlikely to be a major or sole reason for the apparent increase.

The different leadership style of new Foundation executive director Lila Tretikov may be bearing fruit, in terms of better relations between the Foundation and the most active editors.

There is also some concern that Editors saving over 100 edits per month is a simplistic metric; for example, it will include users of highly automated tools such as AutoWikiBrowser, STiki, or Huggle who may achieve that edit count in less than an hour per month, but omits an editor who spends an evening every week writing or rewriting one or two articles, but who might only save an edit every half an hour in that evening.

Should the trend continue, and assuming that someone doesn't find a software bug that has caused the anomaly, future lines of analysis could include examining how much of the increase is due to fewer editors leaving, more inactive editors returning, more new editors joining, and a greater number of casual editors increasing their editing frequency to more than 100 edits per month.

August figures are expected in about a month. It will be very interesting to see whether the trend continues.

Russia temporarily blocks Wikipedia

Russian government blocks Wikipedia ... for a few hours

The Russian Wikipedia has been the target of official government ire and censorship previously. The latest incident originated in the small village of Chyorny Yar, where on June 26 a prosecutor obtained a court order demanding the deletion of that Wikipedia's article on charas, which the English Wikipedia defines as "a hashish form of cannabis ... made from the resin of the cannabis plant." In Russia, telecommunications and official censorship are overseen by Roskomnadzor, whose duties include censoring pages regarding the use and production of illegal drugs. Roskomnadzor determined that the page on charas should be removed by August 21 or the Russian Wikipedia would be blocked in that country. According to Sputnik, a government-owned news service, a Roskomnadzor official told the newspaper Izvestia:

| “ | Roskomnadzor understands the importance of Wikipedia for society. But it goes like this: today it 'academically' writes about drugs, tomorrow 'academically' about forms of suicide, and the day after tomorrow publishes any kind of banned content, but with 'academic' sources. | ” |

Both sides complained about a lack of communication. Executive Director of Wikimedia Russia, Stanislav Kozlovskiy, told the Washington Post that in the past there was "dialogue" with government regulators concerning problems, but not in this case: "We tried to call them but were told that the press officer is on vacation and no one else is authorized to talk to us. They preferred to communicate via statements on the Internet instead.” According to Sputnik, Roskomnadzor head Vadim Ampelonskiy told Izvestia they had also attempted contact. "We were unpleasantly surprised when ... Kozlovskiy, instead of implementing the law, began a large-scale media campaign." The media campaign resulted in a large spike in traffic to the charas article (see figure right).

The entire encyclopedia would have to be blocked because of the recent implementation by the Wikimedia Foundation of the HTTPS protocol on all Wikimedia projects (see previous Signpost coverage). Kozlovskiy told the Post that Russian internet providers do not have the "expensive equipment" needed to block individual pages on sites using HTTPS. Parker Higgins of the Electronic Frontier Foundation told The Verge that "One of the arguments that advocates have made in favor of HTTPS is that it changes the calculus around censoring individual pages." He said that HTTPS requires that governments engaging in censorship make an "all or nothing" decision about whether to block an entire site, or to not engage in censorship at all.

The Verge quoted two Russian journalists about the possible reasons behind the block. Nikolay Kononov, editor-in-chief of SecretMag.ru, said "I think they're trying to show they can ban whatever they want, whenever they want. It's a show of intimidation, like two boxers circling each other in a ring." Investigative journalist Andrei Soldatov suggested that it may be part of an attempt to force the encyclopedia to abandon HTTPS, which he noted is impenetrable by SORM, the Russian internet surveillance system. If so, Wikimedians are unbowed. Kozlovskiy told the Post that “We are not going to stop using the https protocol to make it easier for Roskomnadzor to censor Wikipedia.”

Global Voices Online reported that Russian Wikipedians debated on how to respond, with suggestions ranging from "complete compliance ... to complete defiance". The article on charas was not deleted, but it was moved to charas (drug substance) and the original article title became a disambiguation page which included links to a number of other articles, including an Asian river and a grape. Because the court order specified a specific URL, Global Voices Online speculated that Russian Wikipedia editors might have "outsmart[ed]" Roskomnadzor.

The Russian Wikipedia was blocked on August 24, but the block was only in place very briefly, and some internet providers had not instituted the block yet. Sputnik reported that Ampelonskiy told Izvestia that "Wikipedia was saved by FSKN," the Federal Drug Control Service of Russia. He said that FSKN certfied that the article was no longer in violation of the law. He said

| “ | We highly value the efforts the Wikipedia community made on Saturday and Sunday to change the text. The first version of the 'Charas' article did not even have one corroborative source, so it was not even in accordance with the rules of Wikipedia itself ... The text was completely reworked by the editors, and really became academic and based on science. | ” |

While this threat may be over, at least temporarily, Sputnik ominously reports that "Roskomnadzor is waiting for Wikipedia to change the content of three articles; on 'self-immolation,' 'suicide,' and 'ways of committing suicide,' which were declared against the law by Rospotrebnadzor, the federal watchdog for consumer protection." G

In brief

- Shapps emails deleted: The Register (August 19) and The Independent (August 23) report that Wikimedia UK has told politician Grant Shapps that certain internal emails pertaining to him that Shapps had requested sight of under the Data Protection Act had been "deleted in the normal course of business, before the date of your Subject Access Request". Shapps opined to The Independent that the deletion of the emails was "highly suspect". Wikimedia UK did supply some 80 pages of other material to Shapps, some of it heavily censored. Among the uncensored paragraphs is a comment from WMUK staff that "We should be glad that Shapps has a pretty safe seat, because if he lost his seat, we would be open to the accusation that the charity had acted in a partisan manner during an election period." Shapps retained his seat, but lost the party chairmanship in a move widely interpreted as a demotion. (See last month's Signpost coverage.) AK

Wikimania—can volunteers organize conferences?

Wikimania is the annual international conference for Wikimedia contributors. About a thousand people convene for the three-day main conference, in which five conference tracks are ongoing for eight hours. Conference tracks cover such topics as presenting individuals’ projects, reviewing community organizing plans, promoting access to information sources, developing tutorial infrastructure, legal issues, software demonstrations, regional outreach, metrics reporting, and reviewing research. Before the main conference there is a two-day preconference, termed a hackathon, in which people meet in small groups for meetings, workshops, training, and more personal discussion. I went to the conference in DC in 2012, Hong Kong in 2013, London in 2014, and Mexico City in 2015.

Unfortunate change of venue

The Mexico City conference was supposed to be held at a the Vasconcelos Library but instead was held at a Hilton Hotel. Wikipedians love libraries and in the election process which chose Mexico as the host city, a major factor persuading the community to choose Mexico was the organizing team’s enthusiasm for the library. Two months before the conference happened the venue was changed. I'd not noticed the announcement of that change, and was surprised to learn of it quite close to the event. Reasons cited for the change were the inability to secure hotel accommodation close enough for attendees, and uncertainty about the library's Wi-Fi capacity.

These things may be so, and perhaps the library was always an inappropriate choice of venue; but I regret that so many volunteers did so much work for about a year planning an event at this library only to suddenly change. How much volunteer work was expended in the original plan? Why was that venue not sooner identified as inappropriate? Considering that volunteers are supposed to organize things like venue location—was there some way that volunteer labor was insufficient to accomplish the task, and could the paid staff which did the emergency moving of the event have been diligent in the original assessment and saved volunteer time?

Gradually changing circumstances

The mythology around the Wikimedia movement is that volunteers do everything. In reality, paid staff do a lot and serve in the most essential roles. The mythology partly developed because from 2001 to 2008, the Foundation and the community had almost no money, and no external organizations were funding Wikimedia contributors. Since about 2008 the situation has changed a lot, but there are few evaluations of the changes, and still fewer publications about the changes. From the WMF's perspective, their funding has gone from nothing in 2001 to more than US$65 million this year. I mention this in my “Value of a Wikipedian” post.

Another change is that more organizations are willing to hire their own Wikipedians. I was the first person hired to do Wikipedia work full-time indefinitely. It was a crazy concept at the time, and many would still say that it's a strange idea; but nowadays a lot of organizations are doing it. Since moving to New York I've come to realize that a lot of editing in television- and movie-related articles is done by paid editors, and this is especially taboo. Still, on Wikipedia there is a lot of demand for good information on popular television shows, and people seem to appreciate Wikipedia’s coverage of this. For many shows there are enough fans to appreciate reading the content on Wikipedia if paid staff put it there. In a lot of ways, paid contributions are creeping into Wikipedia without there being any history of community discussion to address the implications.

What roles are appropriate for volunteers?

I say this to give some context to what in any other nonprofit movement wouldn't be an issue. Wikimania is imagined to be a community-run event, but leaving a conference entirely to volunteers is too burdensome for the volunteers and too risky for the community movement. There is a community memory that in 2010 in Poland, the volunteers managing the Wikimania conference became overwhelmed. As the story goes, the Wikimedia Foundation stepped in and had staff take over some essential roles during the conference and hired local event coordinators to make it go well. In 2011 the conference in Israel went well because the Israeli chapter is known for good business sense, having an office with good fundraising and management practices, and otherwise being a volunteer organization with effective staff support. In 2012 the Wikimania coordinators in DC paid US$30,000 to hire an event consultant, and the WMF granted that because event consultant is a role that was available for hire in the US, and because they actually managed finance, legal contracts, and event coordination while giving volunteers final sign-off on everything without having a cozy relationship with them.

In 2013 the volunteers in Hong Kong came in for a lot criticism for not reporting the finances of the conference—see for example the Signpost report “Hong Kong’s Wikimania 2013—failure to produce financial statement raises questions of probity“. I know that Hong Kong didn't hire an event planner in the way that one was hired for DC, and in my opinion if they had, and if their event planner had managed their accounting, then there would have been no community objection to their reporting of the event. Based on my incomplete information, had the Hong Kong team not depended on volunteers to do accounting—which can be tedious and time-consuming for a volunteer to undertake for such a large event—and instead asked for funding for a consultant to produce the report and accounting, they would have secured the money and high praise for their management of the event.

In other respects, I think it was the best-managed Wikimania I've attended. They managed to have volunteers everywhere greeting everyone at so many parts of the process, and the volunteers collectively seemed to me like a trained army that was on the edge of all activity continually directing me into the experience they had designed and kept on a tight schedule. The London conference was great, but then also, the London Wikimedia chapter is the second-best funded after Germany and has about 10 staff. They also managed the conference in an expensive conference venue that required its own staff be funded to coordinate the event, in contrast to for example the DC and Hong Kong events in universities, which depended heavily on volunteers to complement the few staff services and the complete Hilton services in Mexico.

In 2014 I helped organize WikiConference USA in New York with other volunteers. Organizing conference programming was a fun activity for volunteers—doing event management was tedious. For us volunteers, we liked advertising the event in some channels, reviewing program submissions, soliciting for scholarship applications and reviewing them, and recruiting volunteers to be on hand for the day of the event. Some of the duties we didn't enjoy, and which we would have preferred to turn over to paid staff, included negotiating the event with the venue and caterers; managing the written agreements about finance and safety; coordinating a travel team to dispense money for scholarship recipients; the accounting; the metrics part of the grant-reporting to the Foundation; comprehensive communication in the manner of communications professionals as opposed to the style of grassroots volunteers; and responding to harassment (a stalker during that event managed to spoil the mood of the attendees). We managed the conference for about US$30,000 because the venue was a school, which donated what elsewhere would have cost some $60,000. About $10,000 of the $30,000 was the food and incidentals, and the other $20,000 was for travel scholarships. There were about 10 of us on the organizing team and I suppose we met in person about 30 hours each to plan the event plus maybe as much time alone doing things online. This was for a three-day conference for about 300–500 people. Wikimania is no doubt on the same or larger scale.

Is it worth having volunteers spend their time in this way? The money is less of an object these days. Volunteer time is scarce, and anyone who would consider volunteering to convene a Wikimedia conference is likely to also be a person whose time could be spent where expertise is scarce, like actually presenting Wikimedia culture instead of only creating a space for others to do this. Professional event coordinators are at least two to three times more efficient in organizing events than a volunteer team would be, and will anticipate bureaucratic reporting standards intuitively when volunteers might not anticipate the need at all.

Until now, Wikimania conferences have been held based on an Olympic-style bidding process in which groups of volunteers in different cities around the world bid for the right to host the conference. The outcome of the bid is that they get something like $300,000 to host the conference, with more money coming for special needs on request and constituting maybe $100,000 more. The restriction is that volunteers are discouraged from hiring paid staff to present the conference, and the event is expected to be as volunteer-run as possible. I wonder if the Foundation might consider the history of difficulties, and rethink the idea that volunteers should present conferences.

I think it would be more reasonable for the WMF to hire event staff to manage almost all parts of the event, if only to free the volunteers’ time for more personal engagement. A local Wikipedia team should coordinate some hospitality functions, like staffing the registration desk, having volunteers around to answer questions about the neighborhood, in selecting the keynote speakers and scheduling programming, and in recruiting Wikipedians to participate. Historically an online volunteer committee has selected the program submissions to be featured and has selected scholarship recipients. I want those roles to continue, but as for event coordination—paid staff ought to be used.

Multinational hotel accommodation

I worry about two side issues.

One is that the Hilton Hotel is an expensive American hotel with uncompromising business ethics. They charge about $300 a night for rooms, so for the ~100 scholarship recipients and some 100 WMF staff who attended the conference, this was about the rate paid for five nights. $300 × 200 people × five nights is $300,000, which is the typical conference scale and probably about the price including venue space, catering, and the negotiation of rate. It bothers me that this money went to an American company and not to a local business. It also bothers me that this rate is so far removed from the local economy. A recent economic report says 46% of people in Mexico made less than $157 per month, so one night in this hotel costs the equivalent of about two months' wages. In Mexico City, 76% of people make $157 or less. How did the local Wikipedia contributors feel about hosting a conference in a venue so far removed from local culture and norms? How would the international guests have felt to stay in a local hotel instead of an American one?

Paid vs. volunteer presentations

The other issue is that almost all of the conference presentations were showcasing the work of paid staff, when many people think of the Wikimedia movement as a volunteer initiative. There were five days of conference. The first two were hackathon days, in which WMF staff controlled everything in the schedule. This was the first year that that had happened. There were lots of empty rooms reserved, and people could meet during the first two days, and scholarship recipients were present, but posting to the schedule was prohibited. In the other three days of the conference, I counted 150 talks. Among these, 48 were presentations by WMF staff. The Foundation didn't participate in Spanish-language talks, of which there were 26. So 39% of the English-language talks were paid presentations by WMF staff. Another 50 of the English-language talks were by people who were paid to present by some organization other than the WMF (including chapter staff or paid Wikipedians like me), so that really just leaves (150 − 48 − 26 − 50 = ) 26 English-language talks, or about 16%, that were presented by volunteers in the three days available to the community.

I'm grateful to the volunteers who contributed to put this conference on; but I'd have preferred that the Wikimedia volunteer community fill most of the speaking slots—perhaps 66% of them. I want to emphasize volunteers, because the community and the Foundation put so much emphasis on volunteer contributions. I think there's a perception that the community speaks for itself, but somehow this year the community was mostly just the audience. At the very least, I'd like to see future Wikimanias advertise which talks are presented by volunteers, WMF staff, or others.

Lane Rasberry is Wikipedian-in-residence at Consumer Reports. This article originally appeared on the author's blog and is republished here with his permission.

Re-imagining grants

Changes are coming to grantmaking

Most fundraising in the Wikimedia movement is handled directly by the Wikimedia Foundation (Wikimedia Germany also raises significant funding, much of which is forwarded to the Foundation). Though declining readership numbers have brought concerns about the future, the Foundation's fundraising has continued its success: this financial year's $58.5 million target was reached just halfway through the year. Part of these funds, mostly garnered through annual fundraisers, pay for the operations of the servers and of the Foundation itself; and part of it returns to the movement through one of the Wikimedia Foundation's four grantmaking operations.

The grantmaking system in place today came about as a result of a broader discussion about movement roles that took place in 2012–13. There are four kinds of Wikimedia grants: Travel and Participation Grants, which fund individuals representing the movement at primarily non-Wikipedian events; Individual Engagement Grants, which fund individual or small-group research projects related to the Wikimedia movement; Project and Event Grants, for projects and events conducted by individuals and groups; and Annual Plan Grants, which provide annual-cycle funding to "eligible" affiliates such as the largest chapters.

Community grant-making is a complex and inherently political process. The Wikimedia community is a large and divisive place—one in which organic and systematic growth vie with each other. A variety of funding schemes have been tried, to target a variety of needs emerging at a variety of times and garnering a variety of results. Each process has its own adherents, its own community, and its own review body, resulting in a large number of complicated but important details difficult to penetrate for all but the most experienced onlookers.

So it is significant news that this week the Foundation's fundraising team put forward an IdeaLab proposal aiming for a complete refresh of the system as it exists today (the IdeaLab is the WMF's central fundraising incubator for providing community review ahead of grant submissions). The proposal lists three weaknesses in the current system:

| “ | People with ideas don’t know how to get the support they need. It is difficult for people with ideas to know where to get money and support for their ideas. Once they get started, a clear path with support for growing successful programs or technology is often missing.

Processes are too complicated and rigid. Each program has different processes for getting money and support, and there are both gaps and overlap between these programs. We need to make a lot of exceptions to ensure everyone gets what they need. Most requests that need an exception get pushed to Project and Event Grants where systems aren't designed to handle them. Committees are overwhelmed with current capacity. Committees reviewing the widest range of grants aren't able to give all requests a quality review. The most robust committee processes are time-intensive and won't be able to scale as the number of requests grow. |

” |

The proposal prescribes replacing the current fourfold system with a three multi-tiered platforms. First, there would be project grants for both individuals and smaller organizations; these would consist of seed funds for experimental purposes and growth funds to sustain growing projects. Second, there would be event grants, which would fall into three subcategories: travel support for event attendance, micro funds for small community events, and logistical support (the case study is ordering pizza and stickers for a local meetup), and large event support for large conferences—up to and including, it seems, the annual international Wikimania itself. Third, annual plan grants for affiliates would continue, but would now deal with two categories: a rigorous system for larger bids; and a simpler process for smaller bids (provisionally capped at US$100,000 and one FTE staff member employed under the grant).

How can the community participate in the dialogue? A significant reworking of fundraising is an immensely complicated process to engage in—so much so that the IdeaLab proposal comes with not only its own calender but an entire page on how to direct feedback. An FAQ has been provided, which attempts to answer common questions. The consultation is scheduled to last until 7 September, with the requisite changes discussed expected to start to come into effect from 31 October, when the APG process split would be piloted, through 2016. For further discussion see the talk page. For more information on how grants are managed and disbursed, start here.

![]() For more Signpost coverage on grantmaking see our grantmaking series.

For more Signpost coverage on grantmaking see our grantmaking series.

Brief notes

- Coding Da Vinci: As a result of the annual Coding Da Vinci data hackathon, held this year at the Jewish Museum in Berlin, an additional 600,000 files are now available on Commons. The effort's team published a Wikimedia Blog post summarizing their efforts and outcomes.

- Ranking Featured articles: A new stats dump went up this week containing English Wikipedia featured articles ranked by page-views. A similar ranking is also available for good articles.

- Content translation at Wikimania: The language engineering team at the WMF posted a blog post on the outcomes of their language engineering feedback at Wikimania.

- Conflicting banners: There was an interesting discussion this week concerned scheduling conflicts between banners. A CentralNotice banner is the most effective tool available to both the Wikimedia Foundation's fundraising team and various sufficiently large community efforts seeking to spread information or a message, and this week a conflict arose on the Italian Wikipedia between a fundraising banner and one created for Wiki Loves Monuments. See the resulting discussion for details.

- Wiki-Edu: Wiki-Edu published their July Report this week.

Out to stud, please call later

Featured articles

Four featured articles were promoted this week.

- American Pharoah (nominated by Montanabw, Vesuvius Dogg, Tigerboy1966, and Froggerlaura) A bay colt with a faint star on his forehead, American Pharoah is so named because his breeder and owner, Ahmed Zayat, is of Egyptian–American background. The mispelling of the name was allegedly the result of holding a competition on social media; the winning name was copied and pasted into an email sent to The Jockey Club, although the submitter claimed she knew how to spell "Pharaoh" and someone else must have transposed the vowels. American Pharoah won the three big US races in May and June 2015 at the age of three and a bit, and he was awarded the American Triple Crown, which hadn't been won since 1978. He retires at the end of this year, and aims to spend his retirement eating grass and doing elementary math. His owner has other ideas… stud life!

- Fremantle Prison (nominated by Evad37) Fremantle Prison in the port of Fremantle, Western Australia, was built between 1851 and 1859 using convict labour. Originally housing prisoners transported from the British Isles, it was handed over to the colonial administration in 1886 to incarcerate locally sentenced men and women. Since its closure in 1991, three years after a riot caused substantial damage, the prison has been developed as a tourist attraction. It is a complex of cell blocks, a gatehouse, perimeter walls, cottages, and tunnels- these were sunk into the limestone rock on which the prison was built, and were used to supply water to the town from an aquifer. Convicts were required to pump the water by hand into a reservoir; known as "cranking", this task was used as a punishment for recalcitrants. Prisoners were often ordered to be flogged with a cat-o-nine-tails, but this punishment was unpopular with the prison staff- despite promises of extra pay, about a third of floggings weren't carried out. The last was in 1943, and the last hanging in 1964. The make-up of the prison population gradually changed. At the time of the gold rushes in the 1890s, there were a majority of white short-time prisoners; by the 1980s the numbers of those sentenced for violent crimes had increased, and about a third of inmates were Aborigines.

- Last Gasp (Inside No. 9) (nominated by J Milburn) The Last Gasp was an episode of the comedy series Inside No. 9. A seriously ill young girl receives a visit from a singer, as arranged by a charity. The singer appears to die whilst blowing up a balloon. The parents think that the singer's "last gasp" imprisoned in the balloon might have some monetary value. However the singer isn't dead, so his assistant suffocates him with a pillow. Meanwhile the girl attaches the balloon to another helium-filled balloon and releases the two from her bedroom window. Eugene Wat de Omgang, TV critic of South African newspaper The Star described the episode as "hilarious ... I caught myself gasping more than once as its foul contents unfolded".

- Serpens (nominated by StringTheory11) The constellation Serpens is associated in star atlases with the constellation Ophiuchus- the latter represents the god of medicine, Asclepius, who showed kindness to a snake. It licked his ears clean and taught him some secret knowledge. Serpens is divided in two parts by Ophiuchus; the snake winds itself through the legs of Asclepius. It contains all kinds of stuff, including the beautiful Hoag's Object, a classic ring galaxy with a high degree of symmetry.

Featured lists

- Black and white

-

Albert Reiss in front of the Metropolitan Opera House stage entrance

-

Pretty Nose

-

Henry M. Mathews - Brady-Handy

Four featured lists were promoted this week.

- List of Attorneys General of West Virginia (nominated by West Virginian) The attorney general of West Virginia is a citizen of that state, aged 25 or over, who is elected or appointed to the position as an "executive department-level state constitutional officer". They have to live in Charleston, do all kinds of legal stuff on behalf of the state, and sit on 13 different committees. A prerequisite for the position is that they have to know what "preneed burial statutes" are. For all this work, they're paid $95,000 a year (2012 rates). Since the post was created in 1863, there have been 34 incumbents.

- List of Local Nature Reserves in Hertfordshire (nominated by Dudley Miles) A Local Nature Reserve (or local nature reserve for those of you who like things lower-cased) is a small patch of the Great British countryside which is under the stewardship or protection of a local authority. The sites are regarded as having local scientific interest, and where one can "derive great pleasure from the peaceful contemplation of nature." Here's a list of 42 LNRs in Hertfordshire, a county on the north border of Greater London. "Herts- you don't know where it is, but you know you want to be there"© Hertfordshire Tourist Board 1993.

- List of awards and nominations received by Leonardo DiCaprio (nominated by FrB.TG) Leonardo DiCaprio is an American actor, who started his career by appearing in Santa Barbara (1990), a TV soap opera described by one critic as being "filmed inside a wardrobe". DiCaprio came out of the wardrobe to appear in films across a wide range of genres; for his work he's received "34 awards from 136 nominations", but no Oscars. In 2014 he received the Clinton Global Citizen Award, which somehow isn't in the list. In the same year Leonardo was also given a cast-iron "Oscar" statue and made an honorary member of the Chamber Theater in Chelyabinsk, Russia. They also offered him a non-speaking part as a servant in Oleg and Vladimir Presniakov's play Plennye Dukhi (Captive Spirits). Guess it's back to the wardrobe, Leo.

- List of songs recorded by Lana Del Rey (nominated by Littlecarmen) Lana Del Rey has covered the whole alphabet (with the exception of E, X and Z) in her catalog of songs. We look forward to her covers of "Eye of the Tiger", "Xanadu", and "Zombie". Described as a "torch singer of the internet era", Del Rey says she chose her musical identity because it "reminded [her] of the glamour of the seaside". The smell of rotting seaweed, shingle piercing your flipflops, seagulls pinching your fish'n'chips, rain, rain, rain ... down on the west coast.

Featured pictures

-

Louis de France, Dauphin

-

Madonna

Twenty-four featured picturess were promoted this week.

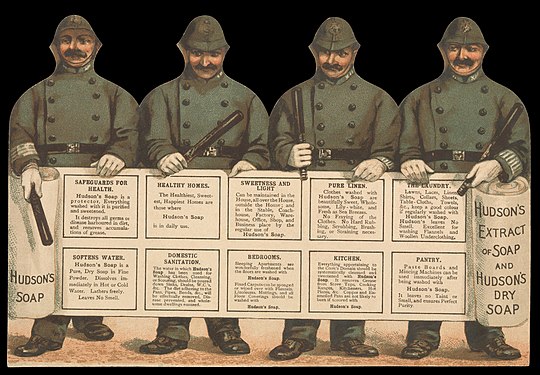

- Hudson's Soap advertisement reverse, obverse (created by Hudson's Soap, digitized by the Wellcome Trust, restored by Adam Cuerden, nominated by Crisco 1492) Robert Spear Hudson was an apothecary in West Bromwich near Brummagem in the late 1830s who hit upon the idea of grinding up bars of soap and selling the result as "soap powder". His nascent business was helped by the abolition of tax on soap in 1853, and a "rapidly increasing demand for domestic soap products" (the use of coal for industrial and domestic energy produced staggering amounts of pollution and filth). Hudson advertised his product widely with posters and shopcards designed by professional artists; in the standard of advertising and the level of promotion he was well ahead of his competitors.

- Albert Reiss in front of the Metropolitan Opera House stage entrance (created by Bain News Service; restored by Adam Cuerden nominated by Alborzagros) Albert Reiss (1870–1940) was a German opera-singer, with a splendid career in the Metropolitan Opera, especially singing in Richard Wagner's operas. The photograph shows Albert Reiss in 1910 at the stage entrance of the Met.

- Gilbert Duprez as Gaston in Giuseppe Verdi's Jérusalem (created by Alexandre Lacauchie; restored and nominated by Adam Cuerden) Gilbert Duprez was a French tenor, who could manage a high C with a full voice from the chest (other tenors hit the high Cs in a falsettone register "from the head"). Here he is shown singing the role of Gaston, the viscount of Béarn, in Verdi's Jérusalem, .

- Annika Beck (created by Diliff; nominated by Crisco 1492) Annika Beck is a German tennis player, currently ranked at number 53 in the singles ratings, having begun 2012 at number 234. She is right-handed, her favourite shot is forehand and her favourite surface is hard. Beck's favourite movie is Inception with Leonardo DiCaprio.

- Albert Aurier (created by uncredited photographer, restoration by Jebulon; nominated by Alborzagros) G. Albert Aurier (1865 –1892) was a Symbolist poet, art critic and painter, who died of typhus. He had a significant collection of van Goghs and works by other contemporaries. Apparently some paintings of Aurier's were exhibited in 1960, but these seem to be unknown today, and he is now better known as an art critic.

- Black-tailed Godwit (created by User:Merops; nominated by Alborzagros) The black-tailed godwit is a rather large wading shorebird. Its range is from western and central Europe to central Asia and Asiatic Russia. With long legs and a long bill, the godwit lives and breeds around freshwater lakes, flooded areas, damp meadows and moorlands. Its call sounds like weeka weeka weeka. Have you noticed that birds never sneeze?

- Pretty Nose (created by Laton Alton Huffman; nominated by Brandmeister) Pretty Nose was an Arapaho or Cheyenne woman war chief who participated in the Battle of Little Big Horn in 1876. Her identification as Arapaho was made "on the basis of her red, black and white beaded cuffs" in the photo. Born circa 1850, Pretty Nose was still alive in 1951 when she saw her grandson return from the Korean War.

- Aviat Eagle II 2 (created by Julian Herzog; nominated by Crisco 1492) Aviat Eagle II is a small aerobatic sporting biplane aircraft. The Aviat Eagle II, produced in the United States since the late 1970s to the mid-1990s, is a kind of IKEA aircraft to make at home from assembly kits. It's not actually made by IKEA, else it would be called FANTADIG.

- Woman with a Parasol – Madame Monet and Her Son (created by Claude Monet; nominated by Pine) French artist Claude Monet painted this backlit and breezy portrait of Madame Monet and their son in a few hours one windy day. No doubt there's all kinds of entomological specimens and windborne crud stuck to the canvas.

- Danaus genutia (created by Vengolis; nominated by Crisco 1492) Danaus genutia is one of the commonest butterflies in India, known as the common tiger or striped tiger. The stripes signal that they are unpleasant to smell and taste, as generally black and yellow stripes do- a warning signal in the animal kingdom. They are soon released when caught by predators. The common tiger occurs in south east Asia, Australia and India.

- Madonna (Edvard Munch) and Madonna (Edvard Munch) (created by Edvard Munch; nominated by Crisco 1492) We've got the munchies this week, with two variations on the same theme by downbeat Norse painter Edvard Munch. He revisited the theme of a half-naked woman with eyes closed and hands behind her back several times between 1892 and 1895. There are various "art critic" interpretations of the meaning behind the image, and you can make up one yourself if you like- it'll be just as valid.

- Louis, Grand Dauphin (created by Hyacinthe Rigaud; nominated by Crisco 1492) Louis of France (1661–1711) was the eldest son and heir of Louis XIV, King of France. As with all the heirs to the French throne of the time, he was called the Dolphin or Dauphin, one of the things that are a bit exotic and weird about the French royal family. There are also grades in the Dauphin titles. He became known as Le Grand Dauphin after the birth of his own son, Le Petit Dauphin. But Louis never became king, because his father survived him. Probably it would have worked out better (see French Revolution for details) if they called their offspring Eagle or Lion, or maybe even Le Grande Fromage. After all, to paraphrase de Gaulle, who better to rule a country with 246 cheeses?

- Jean Metzinger, Danseuse au café (created by Jean Metzinger; nominated by Pine) Dancer in a café is an abstract Cubists painting from 1912 by Jean Metzinger (1883–1956). It was considered as "barbaric" art when exhibited. The painting depicts a woman dancing in a café, wearing an elaborate gown, made in embroidered green silk velvet and a chiffon caped evening gown and she is holding a bouquet of flowers in her hand. In her right hand. The rest of the painting is subdivided in multiple facets and planes, presenting simultaneously parts of the café scene, the very idea behind the abstract painting, from the beginning...

- Rock ptarmigan on Mount Tsubakuro (created by Daisuke Tashiro; nominated by Bruce1ee) The rock ptarmigan is a cold-climate loving gamebird from the grouse family, that prefers high and barren habitats. They are the official bird of Toyama Prefecture in Japan and the official game bird for the province of Newfoundland and Labrador, Canada. Its feathers are brown in summer and white in winter. Rock ptarmigan meat is on the menu for festive meals in Icelandic cuisine, with dishes such as steiktar rjúpur, which is ptarmigan fried and then boiled in milk and water for an hour and a half. We're told that rock ptarmigan tastes like hare, and that hare tastes like Bambi's mother apparently.

- Micrometer (created by Lucasbosch; nominated by Armbrust) A very nice micrometer, measuring up to 25 millimetres. The object to be measured is placed between the two anvils, and the free anvil screwed finger-tight. Readings are taken from the linear scale, with the circular scale providing the fine measurement.

- Gold 20-stater of Eucratides (created by Yann; nominated by Yann) Eucratides the Great loved coins and loved himself depicted on coins. He was an important Greco-Bactrian king. He engaged in wars against the Indo-Greek kings, the Hellenistic rulers in northwestern India. Eucratides went so far as the Indus, until the twist of fate made him loose. He was defeated and returned to Bactria.

- Il suonatore di liuto (created by Orazio Gentileschi; nominated by Hafspajen) This painting by Italian artist Orazio Gentileschi shows a young woman playing a nineteen-stringed lute. Other instruments and the open songbook suggest that she may be tuning the lute in preparation for a concert.

- Polypogon monspeliensis (created by Morray; nominated by Alborzagros) Polypogon monspeliensis, or annual rabbitsfoot grass, is a soft, fluffy annual grass native to southern Europe.

- Marian Dawkins (created by Royal Society; nominated by Crisco 1492) Marian Dawkins is a biologist who specialises in the study of animal behaviour and welfare. She believes that the primary focus of animal welfare should be the feelings on the animals themselves, but thinks that the question of whether animals have consciousness may not be answerable. Instead we should focus on their needs and wants.

- Switzerland – Five Francs (1914) (created by the Swiss National Bank; nominated by Godot13) The Swiss franc is the currency of Switzerland and Liechtenstein; this 5 franc note was issued by the Swiss National Bank. It bears three of the four national languages of the confederation, Romansh only becoming an official language in 1996. The note portrays William Tell with a rather clunky crossbow.

- Sameer Khan (created by Mydreamsparrow; nominated by Mydreamsparrow) Sameer Khan is a fashion choreographer who also trains contestants for beauty pageants such as WTN Miss Mangalore. He is "known for his unique style of bringing elegance in anything that is fashion." Hey, we're just repeating what's in the article, y'know?

- Achillea millefolium (created by PetarM; nominated by PetarM) Achillea millefolium or yarrow is a common perennial plant found in grasslands, gardens and open forests. The plant doesn't look very decorative and it was traditionally used as a medicinal plant. Nursery gardens nowadays have cultivars that are grown for decorative purposes as ornamental plants, mostly in colours like lemon and golden yellow, pink, red, and apricot. No green, black, or blue varieties are available.

-

I am using their products, Hudson's Soap is the best!

Reinforcing Arbitration

Back in December last year, one of the remedies in the Interactions at GGTF case was to have Eric Corbett topic-banned from the Gender gap task force (GGTF). This has resulted in his being blocked multiple times for violating the topic ban. A discussion following one of the blocks placed on him, however, has resulted in a decision to make amendments and clarifications to the text of both the Discretionary sanctions and the Arbitration enforcement pages.

Background

What first started the arbitration enforcement case was a comment made by Eric Corbett on his own talk page. This comment was discussed on WP:AE and was closed by Black Kite with no action taken. GorillaWarfare, however, blocked Eric Corbett for a month. This action was later taken up at the Arbitrator's noticeboard, with the discussion being closed by Reaper Eternal to have Eric Corbett unblocked and the consensus being seen as GorillaWarfare's block being seen as "a bit out of process". The case was later opened the next day, June 29.

The end result

After nearly two months of gathering evidence and much deliberation, on August 24 the case was closed. With the closure, two facts were agreed upon. The first being that Eric Corbett's comment was the cause of the dispute. The second, and more importantly, it was found that GorillaWarfare's actions "fell foul of the rules set out in Wikipedia:Arbitration Committee/Discretionary sanctions#Appeals and modifications and in Wikipedia:Administrators#Reversing another administrator's action, namely the expectation that administrative actions should not be reversed without [...] a brief discussion with the administrator whose action is challenged." As well, the case found that Reaper Eternal violated Wikipedia:Arbitration Committee/Discretionary sanctions#Appeals and modifications, which requires, "for an appeal to be successful, a request on the part of the sanctioned editor and the clear and substantial consensus of [...] uninvolved editors at AN."

Because of these findings, the remedy for solving the issues the case brought up was to delegate the drafters of the case to amend and clarify both WP:ACDS and WP:AE. What will that mean for the future of ArbCom? While nothing is certain for now, it is at least expected that the discretionary sanctions page will look completely different from its current state soon. Though it is possible more cases like this one will be brought up again. We'll just have to wait and see to figure out the impact this case will have.

OpenSym 2015 report; PageRank and wiki quality; news suggestions; the impact of open access

A monthly overview of recent academic research about Wikipedia and other Wikimedia projects, also published as the Wikimedia Research Newsletter.

OpenSym 2015

OpenSym, the eleventh edition of the annual conference formerly known as WikiSym, took place on August 19 and 20 at the Golden Gate Club in the Presidio of San Francisco, USA, followed by an one-day doctoral symposium. While the name change (enacted last year) reflects the event's broadened scope towards open collaboration in general, a substantial part of the proceedings (23 papers and posters) still consisted of research featuring Wikipedia (8) and other wikis (three, two of them other Wikimedia projects: Wikidata and Wikibooks), listed in more detail below. However, it was not represented in the four keynotes, even if some of their topics did offer inspiration to those interested in Wikimedia research. For example, in the Q&A after the keynote by Peter Norvig (Director of Research at Google) about machine learning, Norvig was asked where such AI techniques could help human Wikipedia editors with high "force multiplication". He offered various ideas for applications of a "natural language processing pipeline" to Wikipedia content, such as automatically suggesting "see also" topics, potentially duplicate article topics, or "derivative" article updates (e.g. when an actor's article is updated with an award won, the list of winners of that award should be updated too). The open space part of the schedule saw very limited usage, although it did facilitate a discussion that might lead to a revival of a Wikitrust-like service in the not too distant future (similar to the existing Wikiwho project).

As in previous years, the Wikimedia Foundation was the largest sponsor of the conference, with the event organizers' open grant application supported by testimonials by several Wikimedians and academic researchers about the usefulness of the conference over the past decade. This time, the acceptance rate was 43%. The next edition of the conference will take place in Berlin in August 2016.

An overview of the Wikipedia/Wikimedia-related papers and posters follows, including one longer review.

- "Wikipedia in the World of Global Gender Inequality Indices: What The Biography Gender Gap Is Measuring" (poster)[1]

- "Peer-production system or collaborative ontology engineering effort: What is Wikidata?"[2] presented the results of an extensive classification of edits on Wikidata, touching on such topics as the division of labor (i.e. the differences in edit types) between bots and human editors. Answering the title question, the presentation concluded that Wikidata can be regarded as a peer production system now (i.e. an open collaboration, which is also more accessible for contributors than Semantic MediaWiki), but could veer into more systematic "ontology engineering" in the future.

- "The Evolution Of Knowledge Creation Online: Wikipedia and Knowledge Processes"[3]: This poster applied evolution theory to Wikipedia's knowledge processes, using the "Blind Variation and Selective Retention" model.

- "Contribution, Social networking, and the Request for Adminship process in Wikipedia "[4]: This poster examined a 2006/2007 dataset of admin elections on the English Wikipedia, finding that the optimal numbers of edits and talk page interactions with users to get elected as Wikipedia admin fall into "quite narrow windows".

- "The Rise and Fall of an Online Project. Is Bureaucracy Killing Efficiency in Open Knowledge Production?"[5] This paper compared 37 different language Wikipedias, asking which of them "are efficient in turning the input of participants and participant contributions into knowledge products, and whether this efficiency is due to a distribution of participants among the very involved (i.e., the administrators), and the occasional contributors, to the projects’ stage in its life cycle or to other external variables." They measured a project's degree of bureaucracy using the numerical ratio of the number of admins vs. the number of anonymous edits and vs. the number of low activity editors. Among the findings summarized in the presentation: Big Wikipedias are less efficient (partly due to negative economies of scale), and efficient Wikipedias are significantly more administered.

- "#Wikipedia on Twitter: Analyzing Tweets about Wikipedia": See the review in our last issue

- "Page Protection: Another Missing Dimension of Wikipedia Research":[6] Following up on their paper from last year's WikiSym where they had urged researchers to "consider the redirect"[7] when studying pageview data on Wikipedia, the authors argued that page protection deserves more attention when studying editing activity - it affects e.g. research on breaking news articles, as these are often protected. They went through the non-trivial task of reconstructing every article's protection status at a given moment in time from the protection log, resulting in a downloadable dataset, and encountered numerous inconsistencies and complications in the process (caused e.g. by the combination of deletion and protection). In general, they found that 14% of pageviews are to edit-protected articles.

- "Collaborative OER Course Development – Remix and Reuse Approach"[8] reported on the creation of four computer science textbooks on Wikibooks for undergraduate courses in Malaysia.

- "Public Domain Rank: Identifying Notable Individuals with the Wisdom of the Crowd"[9] "provides a novel and reproducible index of notability for all [authors of public domain works who have] Wikipedia pages, based on how often their works have been made available on sites such as Project Gutenberg (see also earlier coverage of a related paper co-authored by the author: "Ranking public domain authors using Wikipedia data")

"Tool-Mediated Coordination of Virtual Teams"

Review by Morten Warncke-Wang

"Tool-Mediated Coordination of Virtual Teams in Complex Systems"[10] is the title of a paper at OpenSym 2015. The paper is a theory-driven examination of edits done by tools and tool-assisted contributors to WikiProjects in the English Wikipedia. In addition to studying the extent of these types of edits, the paper also discusses how they fit into larger ecosystems through the lens of commons-based peer production[supp 1] and coordination theory.[supp 2]

Identifying automated and tool-assisted edits in Wikipedia is not trivial, and the paper carefully describes the mixed-method approach required to successfully discover these types of edits. For instance, some automated edits are easy to detect because they're done by accounts that are members of the "bot" group, while tool-assisted edits might require manual inspection and labeling. The methodology used in the paper should be useful for future research that aims to look at similar topics.

Measuring Wiki Quality with PageRank

Review by Morten Warncke-Wang and Tilman Bayer

A paper from the WETICE 2015 conference titled "Analysing Wiki Quality using Probabilistic Model Checking"[11] studies the quality of enterprise wikis running on the MediaWiki platform through a modified PageRank algorithm and probabilistic model checking. First, the paper defines a set of five properties describing quality through links between pages. A couple of examples are "temples", articles which are disconnected from other articles (akin to orphan pages in Wikipedia), and "God" pages, articles which can be immediately reached from other pages. A stratified sample of eight wikis was selected from the WikiTeam dump, and measures extracted using the PRISM model checker. Across these eight wikis, quality varied greatly, for instance some wikis have a low proportion of unreachable pages, which is interpreted as a sign of quality.

The methodology used to measure wiki quality is interesting as it is an automated method that describes the link structure of a wiki, which can be turned into a support tool. However, the paper could have been greatly improved by discussing information quality concepts and connecting it more thoroughly to the literature, research on content quality in Wikipedia in particular. Using authority to measure information quality is not novel, in the Wikipedia-related literature we find it in Stvilia's 2005 work on predicting Wikipedia article quality[supp 3], where authority is reflected in the "proportion of admin edits" feature, and in a 2009 paper by Dalip et al.[supp 4] PageRank is part of their set of network features, a set that is found to have little impact on predicting quality. While these two examples aim to predict content quality, whereas the reviewed paper more directly measures the quality of the link structure, it is a missed opportunity for a discussion on what encompasses information quality. This discussion of information quality and how high quality can be achieved in wiki systems is further hindered by the paper not properly defining "enterprise wiki", leaving the reader wondering if there is at all much of an information quality difference between these and Wikimedia wikis.

The paper builds on an earlier one that the authors presented at last year's instance of the WETICE conference, where they outlined "A Novel Methodology Based on Formal Methods for Analysis and Verification of Wikis"[12] based on Calculus of communicating systems (CCS). In that paper, they also applied their method to Wikipedia, examining the three categories "Fungi found in fairy rings", "Computer science conferences" and "Naval battles involving Great Britain" as an experiment. Even though these only form small subsets of Wikipedia, computing time reached up to 30 minutes.

"Automated News Suggestions for Populating Wikipedia entity Pages"

A paper accepted for publication at the 2015 Conference on Information and Knowledge Management (CIKM 2015) by scientists from the L3S Research Center in Hannover, Germany that suggests news articles for Wikipedia articles to incorporate.[13] The paper builds on prior work that examines approaches for automatically generating new Wikipedia articles from other knowledge bases, accelerating contributions to existing articles, and determining the salience of new entities for a given text corpus. The paper overlooks some other relevant work about breaking news on Wikipedia,[supp 5] news citation practices,[supp 6] and detecting news events with plausibility checks against social media streams.[supp 7]

Methodologically, this work identifies and recommends news articles based on four features (salience, authority, novelty, and placement) while also recognizing that the relevance for news items to Wikipedia articles changes over time. The paper evaluates their approach using a corpus of 350,000 news articles linked from 73,000 entity pages. The model uses the existing news, article, and section information as ground truth and evaluates its performance by comparing its recommendations against the relations observed in Wikipedia. This research demonstrates that there is still a substantial amount of potential for using historical news archives to recommend revisions to existing Wikipedia content to make them more up-to-date. However, the authors did not release a tool to make these recommendations in practice, so there's nothing for the community to use yet. While Wikipedia covers many high-profile events, it nevertheless has a self-focus bias towards events and entities that are culturally proximate.[supp 8] This paper shows there is substantial promise in making sure all of Wikipedia's articles are updated to reflect the most recent knowledge.

"Amplifying the Impact of Open Access: Wikipedia and the Diffusion of Science"

Review by Andrew Gray

This paper, developed from one presented at the 9th International Conference on Web and Social Media, examined the citations used in Wikipedia and concluded that articles from open access journals were 47% more likely to be cited than articles from comparable closed-access journals.[14] In addition, it confirmed that a journal's impact factor correlates with the likelihood of citation. The methodology is interesting and extensive, calculating the most probable 'neighbors' for a journal in terms of subject, and seeing if it was more or less likely to be cited than these topical neighbors. The expansion of the study to look at fifty different Wikipedias, and covering a wide range of source topics, is welcome, and opens up a number of very promising avenues for future research - why, for example, is so little scholarly research on dentistry cited on Wikipedia, compared to that for medicine? Why do some otherwise substantially-developed Wikipedias like Polish, Italian, or French cite relatively few scholarly papers?

Unfortunately, the main conclusion of the paper is quite limited. While the authors do convincingly demonstrate that articles in their set of open access journals are cited more frequently, this does not necessarily generalise to say whether open access articles in general are - which would be a substantially more interesting result. It has previously been shown that as of 2014, around half of all scientific literature published in recent years is open access in some form - that is, a reader can find a copy freely available somewhere on the internet.[supp 9] Of these, only around 15% of papers were published in the "fully" open access journals covered by the study. This means that almost half of the "closed access" citations will have been functionally open access - and as Wikipedia editors generally identify articles to cite at the article level, rather than the journal level, it makes it very difficult to draw any conclusions on the basis of access status. The authors do acknowledge this limitation - "Furthermore, free copies of high impact articles from closed access journals may often be easily found online" - but perhaps had not quite realised the scale of 'alternative' open access methods.

In addition, a plausible alternative explanation is not considered in the study: fully open access journals tend to be younger. Two-thirds of those listed in Scopus have begun publication since 2005, against only around a third of closed-access titles, which are more likely to have a substantial corpus of old papers. It is reasonable to assume that Wikipedia would tend towards discussing and citing more recent research (the extensively-discussed issue of "recentism"). If so, we would expect to see a significant bias in favour of these journals for reasons other than their access status.

Early warning system identifies likely vandals based on their editing behavior

- Summary by Srijan Kumar, Francesca Spezzano and V.S. Subrahmanian

“VEWS: A Wikipedia Vandal Early Warning System” is a system developed by researchers at University of Maryland that predicts users on Wikipedia who are likely to be vandals before they are flagged for acts of vandalism.[15] In a paper presented at KDD 2015 this August, we analyze differences in the editing behavior of vandals and benign users. Features that distinguish between vandals and benign users are derived from metadata about consecutive edits by a user and capture time between consecutive edits (very fast vs. fast vs. slow), commonalities amongst categories of consecutively edited pages, hyperlink distance between pages, etc. These features are extended to also use the entire edit history of the user. Since the features only depend on the meta-data from an editor’s edits, VEWS can be applied to any language Wikipedia.

For their experiments, we used a dataset of about 31,000 users (representing a 50-50 split of vandals and benign users), since released on our website. All experiments were done on the English Wikipedia. The paper reports an accuracy of 87.82% with a 10-fold cross validation, as compared to a 50% baseline. Even with the user’s first edit, the accuracy of identifying the vandal is 77.4%. As seen in the figure, predictive accuracy increases with the number of edits used for classification.

Current systems such as ClueBot NG and STiki are very efficient at detecting vandalism edits in English (but not foreign languages), but detecting vandals is not their primary task. Straightforward adaptations of ClueBot NG and STiki to identify vandals yields modest performance. For instance, VEWS detects a vandal on average 2.39 edits before ClueBot NG. Interestingly, incorporating the features from ClueBot NG and STiki into VEWS slightly improves the overall accuracy, as depicted in the figure. Overall, the combination of VEWS and ClueBot NG is a fully automated vandal early warning system for English language Wikipedia, while VEWS by itself provides strong performance for identifying vandals in any language.

"DBpedia Commons: Structured Multimedia Metadata from the Wikimedia Commons"

Review by Guillaume Paumier

DBpedia Commons: Structured Multimedia Metadata from the Wikimedia Commons is the title of a paper accepted to be presented at the upcoming 14th International Semantic Web Conference (ISWC 2015) to be held in Bethlehem, Pennsylvania on October 11-15, 2015.[16] In the paper, the authors describe their use of DBpedia tools to extract file and content metadata from Wikimedia Commons, and make it available in RDF format.

The authors used a dump of Wikimedia Commons's textual content from January 2015 as the basis of their work. They took into account "Page metadata" (title, contributors) and "Content metadata" (page content including information, license and other templates, as well as categories). They chose not to include content from the Image table ("File metadata", e.g. file dimensions, EXIF metadata, MIME type) to limit their software development efforts.

The authors expanded the existing DBpedia Information Extraction Framework (DIEF) to support special aspects of Wikimedia Commons. Four new extractors were implemented, to identify a file's MIME type, images in a gallery, image annotations, and geolocation. The properties they extracted, using existing infobox extractors and the new ones, were mapped to properties from the DBpedia ontology.

The authors boast a total of 1.4 billion triples inferred as a result of their efforts, nearly 100,000 of which come from infobox mappings. The resulting datasets are now included in the DBpedia collection, and available through a dedicated interface for individual files (example) and SPARQL queries.

It seems like a missed opportunity to have ignored properties from the Image table. This choice caused the authors to re-implement MIME type identification by parsing file extensions themselves. Other information, like the date of creation of the file, or shutter speed for digital photographs, is also missing as a consequence of this choice. The resulting dataset is therefore not as rich as it could have been; since File metadata is stored in structured format in the MediaWiki database, it would arguably have been easier to extract than the free-form Content metadata the authors included.

It is also slightly disappointing that the authors didn't mention the CommonsMetadata API, an existing MediaWiki interface that extracts Content metadata like licenses, authors and descriptions. It would have been valuable to compare the results they extracted with the DBpedia framework with those returned by the API.

Nonetheless, the work described in the paper is interesting in that it focuses on a lesser-known wiki than Wikipedia, and explores the structuring of metadata from a wiki whose content is already heavily soft-structured with templates. The resulting datasets and interfaces may provide valuable insights to inform the planning, modeling and development of native structured data on Commons using Wikibase, the technology that powers Wikidata.

Briefly

- Wikipedia in education as an acculturation process: This paper[17] looks at the benefits of using Wikipedia in the classroom, stressing, in addition to the improvement in writing skills, the importance of acquiring digital literacy skills. In other words, by learning how to edit Wikipedia students acquire skills that are useful, and perhaps essential, in today's world, such as ability to learn about online project's norms and values, how to deal with trolls, how to work with other in collaborative online projects, etc. The authors discuss those concepts through the acculturation theory and develop their views further through the grounded theory methodology. They portray learning as an acculturation process that occurs when two independent cultural systems (Wikipedia and academia) come into contact.

Other recent publications

A list of other recent publications that could not be covered in time for this issue – contributions are always welcome for reviewing or summarizing newly published research.

- "Depiction of cultural points of view on homosexuality using Wikipedia as a proxy"[18]

- "The Sum of All Human Knowledge in Your Pocket: Full-Text Searchable Wikipedia on a Raspberry Pi"[19]

- "Wikipedia Chemical Structure Explorer: substructure and similarity searching of molecules from Wikipedia" [20]

References

- ^ Maximilian Klein: Wikipedia in the World of Global Gender Inequality Indices: What The Biography Gender Gap Is Measuring. OpenSym ’15, August 19 - 21, 2015, San Francisco, CA, USA http://www.opensym.org/os2015/proceedings-files/p404-klein.pdf / http://notconfusing.com/opensym15/

- ^ Claudia Müller-Birn , Benjamin Karran, Markus Luczak-Roesch, Janette Lehmann: Peer-production system or collaborative ontology engineering effort: What is Wikidata? OpenSym ’15, August 19 - 21, 2015, San Francisco, CA, USA http://www.opensym.org/os2015/proceedings-files/p501-mueller-birn.pdf

- ^ Ruqin Ren: The Evolution Of Knowledge Creation Online: Wikipedia and Knowledge Processes. OpenSym ’15, August 19 - 21, 2015, San Francisco, CA, USA. http://www.opensym.org/os2015/proceedings-files/p406-ren.pdf

- ^ Romain Picot Clemente, Cecile Bothorel, Nicolas Jullien: Contribution, Social networking, and the Request for Adminship process in Wikipedia. OpenSym ’15, August 19 - 21, 2015, San Francisco, CA, USA http://www.opensym.org/os2015/proceedings-files/p405-picot-clemente.pdf

- ^ Nicolas Jullien, Kevin Crowston, Felipe Ortega: The Rise and Fall of an Online Project. Is Bureaucracy Killing Efficiency in Open Knowledge Production? OpenSym ’15, August 19 - 21, 2015, San Francisco, CA, USA http://www.opensym.org/os2015/proceedings-files/p401-jullien.pdf slides

- ^ Benjamin Mako Hill, Aaron Shaw: Page Protection: Another Missing Dimension of Wikipedia Research. OpenSym ’15, August 19 - 21, 2015, San Francisco, CA, USA. http://www.opensym.org/os2015/proceedings-files/p403-hill.pdf / downloadable dataset

- ^ Benjamin Mako Hill, Aaron Shaw: Consider the Redirect: A Missing Dimension of Wikipedia Research. OpenSym ’14 , Aug 27-29 2014, Berlin, Germany. http://www.opensym.org/os2014/proceedings-files/p604.pdf

- ^ Sheng Hung Chung, Khor Ean Teng: Collaborative OER Course Development – Remix and Reuse Approach. OpenSym ’15, August 19 - 21, 2015, San Francisco, CA, USA. http://www.opensym.org/os2015/proceedings-files/c200-chung.pdf

- ^ Allen B. Riddell: Public Domain Rank: Identifying Notable Individuals with the Wisdom of the Crowd. OpenSym ’15, August 19 - 21, 2015, San Francisco, CA, USA. http://www.opensym.org/os2015/proceedings-files/p300-riddell.pdf

- ^ Gilbert, Michael; Zachry, Mark (August 2015). "Tool-mediated coordination of virtual teams in complex systems" (PDF). Proceedings of the 11th International Symposium on Open Collaboration. pp. 1–8. doi:10.1145/2788993.2789843. ISBN 9781450336666. S2CID 11963811.

- ^ Ruvo, Guiseppe de; Santone, Antonella (June 2015). "Analysing Wiki Quality Using Probabilistic Model Checking" (PDF). 2015 IEEE 24th International Conference on Enabling Technologies: Infrastructure for Collaborative Enterprises. pp. 224–229. doi:10.1109/WETICE.2015.18. ISBN 978-1-4673-7692-1. S2CID 10868389.

- ^ Giuseppe De Ruvo, Antonella Santone: A Novel Methodology Based on Formal Methods for Analysis and Verification of Wikis doi:10.1109/WETICE.2014.25 http://www.deruvo.eu/preprints/W2T2014.pdf

- ^ Fetahu, Besnik; Markert, Katja; Anand, Avishek (October 2015). "Automated News Suggestions for Populating Wikipedia Entity Pages" (PDF). Proceedings of the 24th ACM International on Conference on Information and Knowledge Management. pp. 323–332. arXiv:1703.10344. doi:10.1145/2806416.2806531. ISBN 9781450337946. S2CID 11264899.

- ^ Teplitskiy, M.; Lu, G.; Duede, E. (2015). "Amplifying the impact of open access: Wikipedia and the diffusion of science". Journal of the Association for Information Science and Technology. 68 (9): 2116–2127. arXiv:1506.07608. doi:10.1002/asi.23687. S2CID 10220883.

- ^ Kumar, Srijan; Spezzano, Francesca; Subrahmanian, V.S. (August 2015). "VEWS: A Wikipedia Vandal Early Warning System" (PDF). Proceedings of the 21th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. pp. 607–616. arXiv:1507.01272. doi:10.1145/2783258.2783367. ISBN 9781450336642. S2CID 2198041.

- ^ Vaidya, Gaurav; Kontokostas, Dimitris; Knuth, Magnus; Lehmann, Jens; Hellmann, Sebastian (October 2015). "DBpedia Commons: Structured Multimedia Metadata from the Wikimedia Commons" (PDF). Proceedings of the 14th International Semantic Web Conference.

- ^ Brailas, Alexios; Koskinas, Konstantinos; Dafermos, Manolis; Alexias, Giorgos (July 2015). "Wikipedia in Education: Acculturation and learning in virtual communities". Learning, Culture and Social Interaction. 7: 59–70. doi:10.1016/j.lcsi.2015.07.002.

- ^ Croce, Marta (2015-04-30). "Depiction of cultural points of view on homosexuality using Wikipedia as a proxy". Density Design.

- ^ Jimmy Lin: The Sum of All Human Knowledge in Your Pocket: Full-Text Searchable Wikipedia on a Raspberry Pi https://www.umiacs.umd.edu/~jimmylin/publications/Lin_JCDL2015.pdf Short paper, JCDL’15, June 21–25, 2015, Knoxville, Tennessee, USA.

- ^ Ertl, Peter; Patiny, Luc; Sander, Thomas; Rufener, Christian; Zasso, Michaël (2015-03-22). "Wikipedia Chemical Structure Explorer: substructure and similarity searching of molecules from Wikipedia". Journal of Cheminformatics. 7 (1): 10. doi:10.1186/s13321-015-0061-y. ISSN 1758-2946. PMID 25815062.

- Supplementary references and notes:

- ^ Benkler, Yochai; Nissenbaum, Helen (2006). "Commons-based Peer Production and Virtue*". Journal of Political Philosophy. 14 (4): 394. doi:10.1111/j.1467-9760.2006.00235.x. S2CID 10974424.

- ^ Malone, Thomas W.; Crowston, Kevin (1994). "The interdisciplinary study of coordination". ACM Computing Surveys. 26: 87–119. doi:10.1145/174666.174668. S2CID 2991709.

- ^ Stvilia, Besiki; Twidale, Michael B.; Smith, Linda C.; Gasser, Les (2005). "Assessing Information Quality of a Community-based Encyclopedia" (PDF). Proceedings of ICIQ 2005.

- ^ Dalip, Daniel Hasan; Cristo, Marco; Calado, Pável (2009). "Automatic quality assessment of content created collaboratively by web communities: A case study of wikipedia". Proceedings of the 9th ACM/IEEE-CS joint conference on Digital libraries. pp. 295–304. doi:10.1145/1555400.1555449. ISBN 9781605583228.

- ^ Keegan, Brian; Gergle, Darren; Contractor, Noshir (October 2011). "Hot off the Wiki: Dynamics, Practices, and Structures in Wikipedia's Coverage of the Tohoku Catastrophes" (PDF). Proc. WikiSym 2011.

- ^ Ford, Heather; Sen, Shilad; Musicant, David R.; Miller, Nathaniel (August 2013). "Getting to the source: Where does Wikipedia get its information from?" (PDF). Proc. WikiSym 2013.

- ^ Steiner, Thomas; van Hooland, Seth; Summers, Ed (May 2013). "MJ no more: using concurrent Wikipedia edit spikes with social network plausibility checks for breaking news detection". Proc. WWW 2013. arXiv:1303.4702.

- ^ Hecht, Brent; Gergle, Darren (2009). "Measuring self-focus bias in community-maintained knowledge repositories". Proceedings of the fourth international conference on Communities and technologies - C&T '09 (PDF). p. 11. doi:10.1145/1556460.1556463. ISBN 9781605587134. S2CID 8102524.

- ^ Archambault, É.; et al. (2014). Proportion of Open Access Papers Published in Peer-Reviewed Journals at the European and World Levels—1996–2013 (PDF) (Report). RTD-B6-PP-2011-2.