English Wikipedia editors: "We don't need no stinking banners"

Last month, we reported on discontent with fundraising on Wikipedia. It all came to a head this month, as a widely-participated "Request for Comment" survey rejected the current plans for the fundraising campaign. Luckily for us, given this all happened three days before publication, the closing admin, Joe Roe, provided a thoughtful, nuanced summary of the dispute and decision:

| “ | This was a request for comment (RfC) on whether the fundraising banners planned to be shown on the English Wikipedia in December 2022 were appropriate, and if not what changes needed to be made. Based on the samples provided by the Wikimedia Foundation (WMF), there was a broad, near-unanimous consensus that these fundraising banners should not run on the English Wikipedia in their current form.

Nearly all participants agreed that the banner texts are at least partly untruthful, and that soliciting money by misleading readers is an unethical and inappropriate use of this project. Specifically, participants clearly identified that banners that state or imply any of the following are not considered appropriate on the English Wikipedia:

A significant minority of participants objected to running banner campaigns at all. In my view beyond the scope of this RfC – arguably out of the scope of local discussions on this project entirely. Similarly, there was substantial discussion of the WMF's fundraising model and financing in general which, as several participants noted, is probably better taken up in other venues (e.g. Meta). In any case, no consensus was reached on these issues. Few participants explicitly supported the banners. Many of those that did acknowledged the problems summarised above, but concluded that the banners were acceptable because they were effective (at raising money), comparable to similar campaigns by other organisations, and/or are an improvement over the WMF's campaigns in previous years. A number of members of WMF staff and the WMF Board of Trustees were amongst the most vocal in support of the banners. It is worth noting that, though their participation is welcome as anyone else's, it also carries no more weight than anyone else's. Their comments (understandably) tended to focus on the potential ramifications that changes to fundraising on the English Wikipedia, which constitutes a significant portion of the Foundation's income, could have on the rest of the movement. Like critical comments from opposers on movement finances in general, I considered this discussion largely irrelevant in assessing consensus on the questions posed by this RfC. To the extent that they engaged with the specific objections summarised above, a number of supporters, including several Board members, acknowledged that there were problems with the fundraising text that the WMF has placed on the English Wikipedia, though they disagreed on whether this is a fit topic for discussion on this project. There was also significant discussion of how this consensus should be enforced, if the WMF chooses not to modify the banners before running them. This is a fraught topic given that our policies state that authorised acts of the WMF Board take precedence over consensus on this project, but that attempts to actually apply this principle have historically proved controversial. No consensus was reached on this issue, which is also strictly speaking outside the scope of this RfC. But taking off my closer's hat for a moment, I would like stress that this needn't come up – the preferred outcome for almost all participants, I believe, is that the English Wikipedia community and relevant WMF staff can come to an agreement on the content of fundraising banners. |

” |

Maryana Iskander, Chief Executive officer of the Wikimedia Foundation, gave a detailed response to this, quoted below:

| “ | I've been the CEO of the Wikimedia Foundation for nearly 11 months now. I am posting here as a follow up to the Request for Comment to change fundraising banners.

I agree that it is time to make changes at the Wikimedia Foundation, including more direct community input into fundraising messaging. We have taken the guidance provided by the close of the RfC to change banners on the English Wikipedia campaign as early as Tuesday. The fundraising team welcomes your help and ideas on the specifics. The task at hand in responding to the guidance provided by the RfC is that Wikipedia's existence is dependent on donations. Donated funds are used primarily to support Wikipedia. I think what we heard is that while this may be true, how we say it matters. We need banners that better recognize the real stake our communities have in how we communicate to our donors. In the next few months, the fundraising team will work more closely with local communities to guide future campaigns. The Foundation will measure the financial results of using new banners in this year's English campaign, and we will share this information when the campaign is completed. I will briefly address a few other areas of concern that were raised about the future direction of the Wikimedia Foundation, and commit to writing again in January after we finish this campaign. I believe some things at the Foundation can in fact be different, because they already are:

None of these things may happen as quickly as those of you who have been very frustrated for many years would like. I think we are heading more in the right direction, and I am sure you will tell me if we aren't. I will write again in January with more information. In the meantime, you can reach me on my talk page or by email. MIskander-WMF |

” |

I'm sure we'll have an update of some sort next month as well. It's a bit inevitable once the fundraising campaign starts. Hopefully, though, it'll be entirely positive. – AC

WMF releases Fundraising Report and audited financial statements for 2021–2022 year

In related news, the Wikimedia Foundation this month published its –

- Fundraising Report for 2021–2022

- Consolidated Financial Statements for 2021–2022, complete with an Independent Auditors' Report by KPMG

Note that the Wikimedia Foundation's financial year runs from July 1 to June 30.

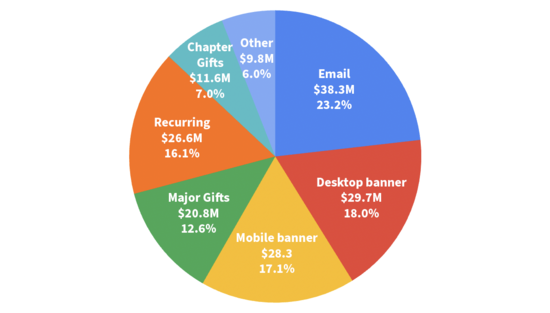

Fundraising Report

In 2021–2022, the Wikimedia Foundation took $165,232,309 USD from over 13 million individual donations, an increase of more than $10 million over the year prior. $58 million, or 35.1% of the donations total, was brought in by banner campaigns on Wikipedia. The breakdown was as follows:

For comparison, the donations total in 2020–2021 was $154,763,121 raised from over 7.7 million donors (a different way of counting was used this year), with banner campaigns bringing in $57.3 million, or 37% of the total.

As in 2020–2021, the Wikimedia Foundation ran a fundraising campaign in India this financial year (see previous Signpost coverage; note that while the 2021 Indian fundraising campaign was cancelled, the 2020 campaign was not held in the spring but in August, thus falling into the 2020–2021 financial year).

Consolidated Financial Statements

The Financial Statements reported an unusual situation: for the first time in its history, the Wikimedia Foundation reported a negative investment income: –$12 million. Investment income had been positive at +$4.4 million in 2020–2021 and +$5.5 million in 2019–2020. At the time of writing, the Wikimedia Foundation had not responded to questions about the precise circumstances responsible for the negative result.

- Total support and revenue was $155 million (a decrease by $8 million compared to the year prior, with the negative investment result cancelling out the increase in donations).

- Total expenses were $146 million (an increase of $34 million, or 30.5%, over the year prior). Some key expenditure items:

- Salaries and wages rose to $88 million (an increase of $20 million, or 30%, over the year prior).

- Professional service expenses: $17 million.

- Awards and grants: $15 million.

- Other operating expenses: $12 million.

- Internet hosting: $2.7 million.

- Net assets at end of year increased by $8 million to $239 million (net assets increased by $51 million in the year prior). Interestingly, the third-quarter (January–March 2022) Tuning Session presentation published by Finance & Administration in May 2022 still forecast a net asset increase of $25.9 million for the year.

For the 2022–2023 financial year, the Annual Plan envisages an increase in both income and expenditure to $175 million, representing a planned increase in revenue by $20 million and a planned increase in expenses by $29 million (20%, more than twice the rate of inflation) compared to the year prior (total expenses in 2021–2022 were $146 million).

According to the minutes of the June 2022 Wikimedia Foundation board meeting, WMF board members and executives looking ahead at the 2022–2023 financial year now underway anticipated "moderate growth in terms of staffing. Next year, the fundraising team will be increasing targets in each of their major streams, with a particular focus in Major Gifts." – AK

Brief notes

- Wikimedia Summit event report: Wikimedia Deutschland has published further reports on the 2022 Wikimedia Summit: the Event Report including financial info (overall cost was a little over 500,000 euros) and the participants' feedback report (the hybrid format appears to have been a success this time). Further documentation of the event is available here.

- Page views: Page views in September for all wikis have been up in relation to the past months with 23,657,615,038 views in comparison to 23,033,712,122 in August. Wikipedia has been growing in popularity since January of 2016, when page views were 20,865,413,322. According to Wikimedia Statistics, the number of page views seems to be growing steadily.

- Administrators: TheresNoTime voluntarily relinquished their Checkuser and Oversight permissions after remedies revoking these privileges in the "Reversal and reinstatement of Athaenara's block" arbitration case reached 5 to 1 in favour and also resigned as an admin; they remain a Steward and later reclaimed their admin status here on the English Wikipedia as well.

- Milestones: Chechen Wikipedia reached 500,000 articles; the traditional orthography Belarusian Wikipedia reached 80,000 articles; Banjar Wikipedia and Hakka Wikipedia reached 10,000 articles; and Dagbani Wikipedia, Guarani Wikipedia, and Maltese Wikipedia reached 5,000 articles. In Wiktionaries, Sango Wiktionary reached 40,000 entries; Shan Wiktionary reached 30,000 entries; and Lombard Wiktionary reached 20,000 entries.

- Articles for Improvement: This week's Article for Improvement is Medjed, followed by Human history (starting 5 December), then Telephone line (beginning 12 December). Please be bold in helping improve these articles!

- BernsteinBot disabled: HaleBot has been updating the List of Wikipedians by number of edits since mid-October. We thank BernsteinBot for its service.

- Easy, automatic journal access for editors: The Wikipedia Library has made Taylor & Francis academic journals automatically available for anyone with a Wikimedia unified login, using OCLC EZproxy (via T&F press release)

"The most beautiful story on the Internet"

Could ads turn Wikipedians into Facebook content moderators?

In a well-timed coincidence for this issue, an Australian Broadcasting Corporation Op-Ed by Nicholas Agar asked "Could ads turn Wikipedians into Facebook content moderators?". Agar, a professor of ethics, notes that with the wrong policies around content monetization, the Wikimedia Foundation could "turn Wikipedia into just another tech business using its vast store of data to pursue profit". He recommends the Foundation "ask for help, not money"... – B, J

Internet search in Russia

The BBC investigated how well internet search engines were working in Russia. Yandex has 65% of the market in Russia, followed by Google with 35%. The BBC used a virtual private network (VPN) to view search results for both search engines on controversial topics to make it appear that the search requests originated in Russia. They also used the VPN to give the origin as the UK for Google requests. All requests were typed in Russian. For example, they searched for Bucha, the Ukrainian town where hundreds of civilians were killed during the current war. Yandex search results predominantly gave links to sites following the Russian government's viewpoint. "Glimpses of independent reporting only occasionally appeared in Yandex search results with links to Wikipedia articles or YouTube." Google searches originating in Russia were a bit better, and Google searches originating in the UK gave a full range of viewpoints, even with the search requests typed in Russian. – S

Maybe not so altruistic after all

Many have told the tale of the dramatic flameout of Sam Bankman-Fried's cryptocurrency exchange FTX and sister company Alameda Research, following a series of boneheaded moves that require a couple of whiteboards to explain in full detail. Suffice it to say that there was a bunch of money, and now there isn't.

In a Washington Post article titled "The do-gooder movement that shielded Sam Bankman-Fried from scrutiny", Nitasha Tiku claims that his lost fortune may have been built – at least in part – on his connections in the effective altruism (EA) community. Bankman-Fried's net worth was estimated at $15.6 billion in early November. The bankruptcy of his cryptocurrency firms, and the devaluation of his own securities, is expected to leave him with a net worth estimated at jack shit, and one million unpaid creditors. Yowza! Tiku went further to say that there was an "EA group devoted to writing Wikipedia articles about EA"; it's unclear whether this refers to off-wiki coordination, or merely to the existence of a legitimate EA WikiProject on the English Wikipedia. – S, B, JPxG

Toaster hoax

The BBC has published an in-depth article and radio programme about the Alan MacMasters toaster hoax, featuring interviews with the protagonists as well as Heather Ford (see Book review in this issue). "How did this hoaxer get away with it for so long? And how did an eagle-eyed 15-year-old eventually manage to expose his deception?" (See also prior Signpost coverage in August's In the media, titled "Alan MacMasters did not invent the electric toaster".) – B

In brief

- "La plus belle histoire de l'Internet": Let's fight for Wikipedia says La Libre Belgique (in French), "the most beautiful story on the Internet". The article provides a pretty good one-paragraph summary of the "permanent tension" between radical inclusionists and those who see unfettered "openness as a contradiction of the principles of encyclopedism".

- Encyclopedias are fun: A journalist for Australian science magazine Cosmos shares their view on Wikipedia's position in the global information ecosystem. Do you agree that it "tap[s] the implicit expert within all of us", supplanting "experts and institutions which ring-fenced their expertise"?

- Embraced by higher ed: "Wikipedia, Once Shunned, Now Embraced in the Classroom" - Inside Higher Ed

- Sportsball: Angry Bills fans have taken over Duke Shelley's Wikipedia page (Sports Illustrated)

- "I don't think I'll ever finish": Jess Wade's interview at Mashable. Vice joined in on praising her; see also the coverage in last month's In the media

- Modern pagans want to be Pagans: The question is whether to spell the word "Pagan" with a capital "P". True believers point out that other religions have their names capitalized as proper nouns and that Wikipedians have raised the issue but failed to come to a simple answer.

- Afrikaans error: Did Charlize Theron make fun of a Wikipedia error in the number of Afrikaans speakers (from Eyewitness News (South Africa))? Or was it just an "ill-informed" statement as CNN suggested, not mentioning Wikipedia as the source of the misinformation?

- Back in the old days: In the context of a notional Twitterverse without moderation, US sportsperson Tyrese Haliburton reminisces about the old days when Wikipedia "wasn't always correct"... is someone going to break the news to him? (AP News)

- Got a turkey craving? Try Wikipedia for a taste of wildlife facts, like other people did as explained in this review of an academic report. (Mongabay via Tacoma News Tribune)

- A notable feat: A notable filmmaker Gracie Otto says "'A friend once told me that everyone I've dated has a Wikipedia page'" (Sydney Morning Herald). Perhaps she should start a notable dating service?

- "Is Wikipedia's independence at risk?" asks Volkskrant (in Dutch), covering Wikipedia fundraising and recent Wikipedia-related AI initiatives by Facebook parent Meta.

Lisa Seitz-Gruwell on WMF fundraising in the wake of big banner ad RfC

Much has been said, thought, and shouted about this month's big Request for Comment on the Wikimedia Foundation's proposed banner ads for 2022 – said RfC was closed on the 24th by Joe Roe, citing a "broad, near-unanimous consensus that these fundraising banners should not run on the English Wikipedia in their current form". This interview, carried out a couple days later, is from Lisa Seitz-Gruwell, the WMF's Chief Advancement Officer. Questions were submitted by The Land.

1. The Wikimedia Foundation has indicated some significant changes to its fundraising as a result of a recent Request for Comment. For those who haven't been following – what's changed? How much input are English Wikipedia editors going to have in fundraising banners from now on?

The close of the RfC included some clear direction about some of the messaging that we've used in different fundraising banners. We have been following this RfC over the past two weeks and collecting feedback and specific suggestions about how to change the banners based on some of the comments in the RfC. We created a new page where we are sharing banner messaging for the upcoming English Wikipedia banner campaign alongside volunteers. That page includes five options for banners that were written with ideas from volunteers, and a space for volunteers to suggest other ideas as well.

We will be introducing more direct community input into fundraising messaging. That's a commitment that our CEO Maryana Iskander emphasized in her note to the community on November 25. We don't know what that process looks like yet, however the fundraising team welcomes your help and ideas on the banners.

2. Could you explain what happens inside the WMF when Wikipedians raise concerns about something. For instance, concerns about fundraising messaging have been raised one way or another for some time now. At what point does the WMF notice community concern on an issue, and at what point does it act on it?

There are many people across the Foundation who are constantly reading, discussing and responding to the questions we hear from the communities. For example, Julia Brungs, JBrungs (WMF), Advancement's Community Relations Specialist, is dedicated full-time to engaging our communities around fundraising and can usually be found on talk pages answering questions and sharing the latest updates. With such a global movement, community conversations happen in a variety of different spaces, including on and off-wiki, and determining what the prevailing feeling is amongst a majority of volunteers is not always easy.

There are some changes that staff can make on their own in response to community feedback and do so immediately. However, there are some requests that have far reaching impacts that require buy-in from others. For example, the changes that we are making to fundraising in response to the RfC may have significant budgetary impacts for the Wikimedia Foundation. A decision of this magnitude is not one that the fundraising team can or would make by itself. The Board of Trustees have been thoughtful partners as we've made changes to the fundraising approach in response to the RfC, with the understanding that this may require adjustments to the budget.

3. In recent years the Wikimedia Foundation's expenditure has grown significantly, but the amount of fundraised income has grown even faster than expenditure. In some ways, this is a great problem to have. But will there come a point where the Foundation has enough money?

We try to fundraise more than we spend because it is a best practice for nonprofits to have operating reserves. For example, in order to receive the highest rating from nonprofit rating agencies like Charity Navigator, a nonprofit must have a minimum of 12 months of operating expenses held in reserve. Recently, the board adopted a working capital policy that defines how much the Foundation should have in reserves, which they set at 12 to 18 months of operating expenses. If the annual budget grows, we have to in turn grow the reserve in order to stay within that target range which is why we try to raise more than we spend.

But I want to get to the heart of your question of whether the Foundation has the money it needs. We have a vision to share the sum of all knowledge and a 2030 strategic direction. We have a lot more work to do to come close to achieving these goals, whether that is maintaining and improving our sites so more people can access and participate in knowledge, supporting the growth of knowledge in other languages (many of which have far less content than English Wikipedia), increasing awareness about the Wikimedia projects – the list goes on. As Maryana stated in her response, the Foundation has seen rapid growth over the past several years. We're not going to continue to grow at the rate we have in the past. The emphasis will be on better delivering on these goals with the resources we have.

4. What's the purpose of the Wikimedia Endowment, and how can community members be sure that the money in it will be spent in line with our values?

The Wikimedia Endowment's purpose is “to act as a permanent fund that can support in perpetuity the operations and activities of current and future Wikimedia projects.” If you look at the mission statement of the Wikimedia Foundation the phrase “in perpetuity” is there, meaning we are called upon to build something that ensures we can fulfill the mission forever. Until we founded the Wikimedia Endowment seven years ago, we had very little we could point to that was focused on the long term. Currently, we are in the “Endowment Building Phase,” meaning we are building up the principal of the fund. Last year, we hit our initial fundraising goal and are now considering the plan going forward. Last year, we conducted interviews with donors and community members to get their ideas for more focus for what the Endowment should support. The Community Committee of the Wikimedia Endowment includes community members Phoebe Ayers and Patricio Lorento as trustees, and is developing a proposal for what the endowment should support in the short term and it will be shared in 2023.

5. What are the Wikimedia Foundation's internal policies and expectations about ethical fundraising, and are there any regulatory or professional codes of practice that the Foundation follows? For instance – does the Foundation follow the Association of Fundraising Professionals Code of Ethical Standards?

Ethical fundraising shouldn't just be a policy, but part of our overall culture. This year, we conducted a training on the “Culture of Philanthropy,” first for the fundraising team and then as a plenary at our annual all-staff meeting. This is the idea of acknowledging and working for the common good, the same type of intrinsic motivation that I think drives many in our volunteer communities.

Within that overall approach, we follow best practices for ethical fundraising – including those laid out in the AFP policy, such as valuing the privacy of groups impacted by our fundraising, prioritizing mission over personal gain, and staying up to date on various ethical codes in the philanthropic profession. There is one part of the AFP Code that is slightly at odds with how we operate in that we share more data about our overall fundraising revenue and provide more frequent unofficial data than they recommend, in order to be as transparent as possible.

Further, many staff are members of organizations like the AFP and attend their trainings and conferences. We also have several policies of our own to guide ethics including the Gift Policy which includes a new Anti Harassment Statement, Donor Privacy Policy, the Wikimedia Foundation Code of Conduct, and the Universal Code of Conduct. We train all new fundraising staff on these policies as a part of the onboarding process.

6. How do you see the Wikimedia Foundation's fundraising evolving in future? Are there any challenges on the horizon? In the long term, do you expect fundraising to be decentralised, in line with the Movement Strategy recommendations?

Change is the only constant in our fundraising. We keep evolving our fundraising based on the organization's and the movement's needs and external factors.

Over the years, we have diversified our revenue strategy. Increasingly, people are accessing Wikimedia content in other places besides our sites, including through voice assistants. The potential challenges mean that we need to continue to adapt our fundraising model as we have done over the years, shifting from a primary model of readers seeing banners on the desktop version of Wikipedia, to also engaging on mobile devices, over email, and a monthly giving program, among other things. The Endowment, discussed above, and Wikimedia Enterprise, are also strategies to increase our long term resilience.

Both in the long term and the short term, we expect continued changes in how we raise funds for the movement and where those funds go. When it comes to decentralizing fundraising, we are having conversations with affiliates about that now. In addition, the new funding strategy for movement grants embraces a more participatory decision-making model where volunteer regional committees evaluate and make decisions on grants. There are many other discussions happening that will also have an impact on this question, such as the concept of regional hubs and what that means for the role of affiliates, the Foundation, and new structures in the movement. I don't have answers to these questions yet, and this is something that we will need to decide together alongside the communities.

Privacy on Wikipedia in the cyberpunk future

- Ladsgroup has actively edited Wikipedia since 2006. On the Persian Wikipedia, he's a bureaucrat, oversighter and check user. He currently works as a Staff Database Architect for the WMF. As a volunteer he helps build tools for CheckUsers. This article was written in his role as a volunteer and any opinions expressed do not necessarily reflect the opinions of The Signpost, the WMF, or of other Wikipedians.

When you edit Wikipedia, it will be public. We all know that. But do you know what it actually entails?

Can others tell if I have multiple accounts (such as sockpuppets)?

Some trusted users called Checkusers are able to see your IP address and user agent. Meaning they will know where you live, maybe where you are studying or where you work. They don't disclose such information and it's subject to a really strong policy. However, that's not the only way you can be identified.

The way you use language is unique to you; it's like a fingerprint. There are bodies of research on that. With simple natural language processing tools, you can extract discussions from Wikipedia and link accounts that have similar linguistic fingerprints.

What does this mean? It means people will be able to find, guess or confirm their suspicions on other accounts you have. They will be able to link between multiple accounts without needing access to private data that could reveal where you live or work.

Who can analyze my edits?

Wikimedia projects are public: the license means that all information hosted on them can be reused for any purposes whatsoever, and the privacy policy allows for analysis of edits or other information publicly shared for any reason.

That means anyone with resources or knowledge can analyze data trends in your edit history, such as when you edit, what words you use, what articles you have edited. As technology has advanced, tools for analyzing trends in user data have as well, and include things as basic as edit counters, and as complex as anti-abuse machine learning systems, such as ORES and some anti-vandal bots. Academics have begun utilizing public data to develop models for combatting abuse on Wikipedia using machine learning and artificial intelligence systems, and volunteer developers have created systems that utilize natural language processing in order to help identify malicious actors.

As with anything, these technologies can be abused. That's one of the risks of an open project: an oppressive government or a big company can invest in it and download Wikimedia dumps. They can even go further and cross-check it with social media posts. While not likely in most cases, in areas of the world where free speech is limited, one should be conscious of what information you share on Wikipedia and other Wikimedia projects.

Beside external entities, volunteers have been building such tools to help Checkusers do their job better, with the potential to limit access to private data. The tool we showed graphs from here is being used in several wikis already but is only made available to Checkusers of that wiki by the developer. The tool doesn't give just a number, it builds plots and graphs to make decision-making easier.

Can we ban using AI tools?

Legally, there's nothing we can do to stop external entities from using this data – it's engraved in our license and privacy policy[1] that it's free to use for whatever purpose people see fit.

Because of this, restrictions on the use of natural language processing or other automated or AI abuse detection systems that do not directly edit Wikimedia projects are not possible. Communities could amend their local policies to prohibit blocks based on such technologies or to prohibit consideration of such analysis when deciding whether or not there is cause to use the CheckUser tool. Local projects cannot, however, prevent use of natural language processing or other tools completely because of the nature of the license and the current privacy policy.

Notes

- ^ From the Privacy policy: "You should be aware that specific data made public by you or aggregated data that is made public by us can be used by anyone for analysis and to infer further information, such as which country a user is from, political affiliation and gender."

Missed and Dissed

It's hardly controversial to note that the government of a certain country has had a troubling history of being involved with shady things and then lying about it (and that this is hardly a problem unique to that nation). But the fact that this is true in many cases does not necessarily make it true in any given case. Before we continue, I will pose you a question: Bernie Madoff is well-known to have stolen a bunch of stuff in 2008, and someone stole my bicycle in 2008, so do you think it was Bernie Madoff?

More to the point: do you think the United States Department of Homeland Security is dicking around with pages on Wikipedia?

Stephen Harrison answers "no", in his (aptly-titled) Slate article No, Wikipedia Is Not Colluding With DHS. He responds to claims from various online raconteurs on Twitter that the United States Department of Homeland Security and the Wikimedia Foundation colluded to influence content on Wikipedia prior to the 2020 United States elections (said raconteurs overlapping with the much-vaunted recession affair which the Signpost covered previously). Harrison goes into how Wikipedia actually dealt with the 2020 elections, mentioning that all the meetings between the WMF and the DHS were publicly announced at the time, and detailing what volunteers did to help Wikipedia maintain neutrality. See coverage in The Signpost's post-2020 election "Relying on Wikipedia: voters, scientists, and a Canadian border guard". Harrison calls the framing of the events "insulting, especially to the volunteer Wikipedia editors who do the hard work of curating reliable information for the site."

But what was the whole deal of it? Well, the specific claims Harrison addresses from Twitter loudmouths are based on much broader claims (and implications) from The Intercept in their October article "Truth Cops" (which TechDirt ripped the bajeezus out of), featuring a lot of stuff like this:

| “ | Prior to the 2020 election, tech companies including Twitter, Facebook, Reddit, Discord, Wikipedia, Microsoft, LinkedIn, and Verizon Media met on a monthly basis with the FBI, CISA, and other government representatives. According to NBC News, the meetings were part of an initiative, still ongoing, between the private sector and government to discuss how firms would handle misinformation during the election. | ” |

One has to admit that this sounds pretty disconcerting, but a trained eye can see some cracks in the concrete. First of all, Wikipedia isn't a "tech company", it's an encyclopedia, hosted on a website where all the discussions are public. I sure as hell didn't see User:Winston (Ministry of Truth) poking around at the Village Pump, so the only possible thing this could be referring to is the Wikimedia Foundation, which is a whole different entity, and most importantly, does not concern itself with editing articles here.

This brings us to the second thing, which is the crux of it all – few understand this – the WMF is not in charge of editing articles. It is true that they often "tackle" something, "assign a team" to something, or "investigate" something, but they do not "edit articles" except under extremely limited circumstances, which generally create peculiarly-shaped clouds of wikidrama visible from outer space, of which none have been spied over any American politics articles lately.

Yes, it is true that they put out an enormous report about tackling disinformation during the 2020 election. And it is true that this report contains a bunch of broad gesturing to the effect that they took a bunch of direct actions. But here, you can again read between the lines, and see that they were borrowing a shoulder for the tackle, so to speak: "Security and T&S, once it hit their radars respectively, moved quickly in trying to identify and coordinate resolutions". Coordinate resolutions? That's not editing! They sent a bunch of emails, to volunteer editors, telling them that someone was trying to dick around with Wikipedia, which is already very much against the rules, and the volunteers dealt with it.

I mean, look at this: "T&S needed some time to find an active steward on IRC". What kind of lousy COINTELPRO operation would need to sit around twiddling its thumbs while waiting for some random unpaid hobbyist to tab over to irssi?

Now, why the WMF has such a penchant for exaggerating their role in political editing, and for making their own activities sound creepy – like offering recommendations about a "Content Oversight Committee" to issue binding decisions about "harmful content" – is hard to understand. And, indeed, much has been written about the troubling recent phenomenon where vague, protean categories of harmful information are being increasingly "tackled", "addressed" and "investigated" by vague, protean organizations and consortia. However, in this instance, it would seem that Bernie Madoff did not steal my bicycle.

Diminishing returns for article quality

- Julle began editing the Swedish Wikipedia in 2004. He's authored a book, Wikipedia inifrån (Wikipedia from the Inside), published in 2022, and currently works for the Wikimedia Foundation's Product Department. This text is unrelated to his work, and the opinions expressed are his own.

When Wikipedia took over the world, it wasn't on the basis of article quality. If Wikipedia one day is replaced, it likely won't be because someone does what we do better.

In his widely influential 1997 book The Innovator's Dilemma, American scholar of business administration Clayton Christensen investigated how dominant technologies are overtaken by new ones, and how the old organisations rarely managed to retain their positions as their industries shifted to a new paradigm. A key observation in Christensen's book is how the new technology, a product that is a break from tradition rather than a continuous improvement of the existing technology, is typically not better than the one it is replacing, but merely cheaper, simpler, more convenient.[1]

This echoes our experience: while Wikipedia's large language versions have long come out favourably in comparison to traditional printed encyclopedias, this largely happened after we achieved our position as the predominant source of information. In recent years, our reputation has changed for the better in countries like the United States or Sweden, as people belatedly realised that the Wikipedia of 2018 was not the same thing as the Wikipedia of 2004, but this was not necessarily correlated with increased readership in these areas.[2] It seems largely irrelevant to readers' decision to use us as a source of information – for that, we didn't need to be good. We just needed to be good enough, and then other factors – price, easy access – made all the difference.

To do what Wikipedia does

There have been attempts to do what Wikipedia does but better, like Citizendium or Everipedia. They seem doomed to fail. Not only because some of these endeavours insist that key aspects behind Wikipedia's success, such as the low threshold of entry, are defects to correct. Most importantly, it doesn't matter if they succeed in their ambition or not: one can't dislodge a supremely dominant entity like Wikipedia – entrenched in the fabric of the internet, with superb name recognition, hundreds of thousands of editors – by doing the same thing but slightly better. No product can win by modestly improving what the users are already doing; as human beings, we put a value on something simply because we are already using it.[3] There is no oxygen left to breathe for an English encyclopedia competing in the same niche. The strong competitors to Wikipedia exist in languages where the Wikimedia movement has been obstructed, like Baidu Baike in China.

Anyone wanting to dislodge Wikipedia from its place in the information ecosystem can't have article quality as their main selling point. This isn't just because the Wikipedian system of quality control, chaotic as it seems in theory, sort of works in practice, and our articles are often quite good – but because Wikipedia was good enough for the readers to start using it a long time ago. We have years of continuous editing and improvements beyond that point. It's not that Wikipedia is perfect, merely that we're probably way past the mark where additional quality will be attractive enough to change reader behaviour. Whether this is an indication of Wikipedia’s excellence or a reason for bleak despair as we look at how humans handle information may be in the eye of the beholder.

The rust in our machinery

There are, of course, concerns. Wikipedia was created with the assumption that anyone reading it would also be sitting in front of a keyboard. The conflation of the reader and the writer, the erasure of the strict line between the two roles, depended on the readers having the tools to efficiently contribute. Not only does this seem inherently more difficult on a phone – while certain things, such as patrolling, could arguably be equally easy or easier on a phone if our workflows weren't still primarily built for desktop users, most find adding text and references easier with access to a bigger screen and a physical keyboard – but we have over the years erected barriers ourselves. It is increasingly difficult to write new articles. And so we're at risk, when the constant editor attrition in some wikis outpaces the recruitment of new writers.

Many who attempt are thwarted not by the confusing code or technology, but by the sheer amount of norms and guidelines we have produced over the years. Wikipedia's guidelines have, like gneiss, been formed under external pressure, a reaction to attempts to fool or influence the encyclopedia, or in our own recognition of our shortcomings. As we have grown our concerns have shifted, in what seems to be a general pattern on Wikipedias of a certain size and age: from focusing on making sure we have the information to better control of the information. Other changes seem to be our internal definition of who we are. We gradually move towards stricter interpretation of our policies, like English Wikipedia's recent decision to require more sources to prove notability of Olympic athletes or how we prune the lush garden that is in-universe content related to popular culture, defining what is fancruft to be weeded out. This is where English alternatives to Wikipedia can thrive, rather than in the space we so firmly occupy: the corners we explicitly don't want, like the Fandom wikis, serving another purpose.

Quality and the reader

In their 2014 study, Lehmann et al.[4] mapped reader behaviour to see how it corresponded to our definition of article quality. Readers behave in different ways: they can read an article with focus, they might explore a topic jumping from article to article, or give it a cursory glance. Whether someone would sit down and spend time reading the entire article or just wanted to quickly peek at it had very little to do with our concept of article quality.[4] A common interpretation of this seems to be that Wikipedia, where we often invest time in what interests us rather than based on future pageviews, has a problem in misaligning article quality with the topics our readers are interested in – a pattern we see not just in what our audience chooses to read carefully, but also in relationship to page views.[5]

A different explanation would be that our concept of quality doesn't necessarily coincide with readers' needs. The goal of the encyclopedic article is to arm the reader with the right amount of knowledge. As we try to find the right amount of information to serve, we celebrate ambition and length. This is not necessarily wrong: there seems to be a correlation, albeit weak, between quality, as defined by the Wikipedia community, and reader trust in the article.[6] It is important that we actively fight disinformation in our articles; requiring sources is our best tool for doing so. However, a featured Wikipedia article has most likely long surpassed what would satisfy the reader.

Technology and shifts

Wikipedia grew in a symbiotic relationship with the concept of the search engine, not the least of which is Google. The encyclopedia significantly enhanced the quality of information gained when searching, and the search engines escorted readers to Wikipedia. Later, some of that balance has shifted, as Google now retains readers by serving the information they are looking for already in the search result.[7] Even the limited information in the Google Knowledge Graph, put together from various sources including Wikipedia and Wikidata, is often good enough. But increasingly, new internet users seem to abandon search engines as a way of looking for information.[8][9] They are happy using TikTok: to them, using the platform where they already spend their time is a simpler and more convenient way of looking for information.

We're not a company. We don't exist to bring value to shareholders and our purpose is not to be a tool for enrichment. When we work on our articles, we don't do it to better position ourselves, but to better fulfil our mission. To some degree, we don't have competitors: if someone is providing the world with information, they are merely doing what we want to be done. There is an argument to be made that we should do our thing, and if someone else comes along and does something else, something better, fine – we have served our purpose. But there are values which might make Wikipedia worth defending, even if information would be available elsewhere. Our belief in neutrality, in transparency and being able to show the reader from where we have collected the information. These are principles which deserve to survive technological shifts.

The day Wikipedia is replaced, it will likely be by something completely different that didn't even set out to compete with the Wikimedia wikis. There will be a niche we don't cover where a new initiative can thrive and find their audience, and grow until they – like we did – take up so much room there isn't enough oxygen left for us to breathe.

Article quality is important, as a method to achieve our mission. But article quality will not in itself save us if technology and user patterns leave us behind.

References

- ^ Christensen, Clayton (2016). The Innovator's Dilemma : When new technologies cause great firms to fail. Boston: Harvard Business Review Press. ISBN 978-1-63369-178-0.

- ^ Total pageviews, Swedish Wikipedia January 2016 to October 2022. Wikistats. Retrieved 19 October 2022.

- ^ Gourville, John T. (2006). "Eager Sellers and Stony Buyers: Understanding the Psychology of New-Product Adoption". Harvard Business Review. 84 (6): 98–106. Retrieved 2022-10-08.

- ^ a b Lehmann, Janette; Müller-Birn, Claudia; Laniado, David; Lalmas, Mounia; Kaltenbrunner, Andreas (2014). "Reader preferences and behavior on Wikipedia". Proceedings of the 25th ACM Conference on Hypertext and Social Media. Association for Computing Machinery. pp. 88–97.

- ^ Morten Warncke-Wang; Vivek Ranjan; Loren Terveen & Brent Hecht (2015). "Misalignment Between Supply and Demand of Quality Content in Peer Production Communities". Proceedings of the The 9th International AAAI Conference on Web and Social Media (ICWSM).

- ^ Elmimouni, Houda; Forte, Andrea; Morgan, Jonthan (September 2022). "Why People Trust Wikipedia Articles: Credibility Assessment Strategies Used by Readers". OpenSym '22: Proceedings of the 18th International Symposium on Open Collaboration. Association for Computing Machinery. pp. 1–10.

- ^ McMahon, Connor; Johnson, Isaac; Hecht, Brent (3 May 2017). "The Substantial Interdependence of Wikipedia and Google: A Case Study on the Relationship Between Peer Production Communities and Information Technologies". Proceedings of the Eleventh International AAAI Conference on Web and Social Media. pp. 142–151.

- ^ Moon, Julia (2022-07-28). "Why I Use Snap and TikTok Instead of Google". Slate. Retrieved 2022-10-08.

- ^ Rebollo, Clara (2022-09-14). "Rápido, adictivo y entra por los ojos: TikTok ya es el buscador de la generación Z". El País (in Spanish). Retrieved 2022-10-08.

Writing the Revolution

- Writing the Revolution: Wikipedia and the Survival of Facts in the Digital Age is a new book by Heather Ford, with a foreword by Ethan Zuckerman. It was published by MIT Press on November 15, 2022. Ford is Associate Professor and Head of Discipline for Digital and Social Media, School of Communication, University of Technology Sydney, as well as a former member of the Wikimedia Foundation advisory board.

- Andreas Kolbe is a former co-editor-in-chief of The Signpost, and has been a Wikipedia contributor since 2006.

Heather Ford's Writing the Revolution is a timely book. Wikipedia, along with its younger sibling Wikidata, is as influential as it has ever been. Its contents inform the Google Knowledge Graph and are read out by Apple's, Amazon's and Google's voice assistants to millions of people, as definitive answers to users' queries. It's a level of success few people would have predicted fifteen or twenty years ago.

It's also a development worth questioning. While facts can be contested and changed on Wikipedia, this possibility is lost when Wikipedia content is delivered by an electronically generated voice or displayed as incontrovertible fact in a search engine's knowledge panel: You cannot argue with your voice assistant, and Google is famously unresponsive to users wishing to draw the company's attention to errors in its knowledge panels. As Ford says in the first chapter,

Wikipedia is no longer just an encyclopedia. Like Google, Wikipedia constitutes critical knowledge infrastructure. Like electricity grids, telephone and sewerage networks, platforms including Wikipedia and Google provide knowledge infrastructure that millions of people around the world depend on to make decisions in everyday life. Despite their importance, we are usually unaware of the workings of our knowledge infrastructure until it breaks down. When that happens, we get a glimpse not only of how platforms share data between one another but also the attempts of people trying to influence their representations.

Given Wikipedia's near-ubiquity (China being the most notable exception; the country has its own huge, crowdsourced, internet encyclopedias, and Wikipedia is not welcome), it is therefore appropriate to scrutinise how information on Wikipedia is compiled. This applies in particular to current-affairs articles, which, in a departure from the traditional encyclopaedia model, are among the most consulted on Wikipedia, shaping perceptions and thus actually influencing the very events they are reporting on.

Ford focuses her attention on the development over time of a single Wikipedia article, the one on the 2011 Egyptian revolution, from its preparation in the days before the 2011 Egyptian protests even started, through its early Sturm und Drang days on-wiki, and on to the present day.

Retold in meticulous detail, based on both edit histories and interviews with the Wikipedians who shaped the article's contents and development, it becomes a fascinating journey, a microcosm that serves to illustrate the practical operation of Wikipedia's collaborative editing process, the strategies contributors employ to make content "stick", and the functioning and occasional non-functioning of the policies and guidelines supposedly steering and controlling the entire enterprise.

Above all, the story Ford recounts makes clear how much emotion there is in Wikipedia. Writing Wikipedia is not a dry, analytical endeavour designed to arrive at a sober description of consensus reality, but one driven by passion and rewarded by the occasional exultation. As she recounts the actions of Wikipedians like The Egyptian Liberal, Ocaasi, Tariqabjotu, Lihaas, Mohamed Ouda, Amr, Silver seren, Aude and others, it becomes clear that struggle is inherent in the process:

Because of Wikipedia's status not only as an encyclopedia, as one representation among many, but also as the infrastructure for the production and travel of facts, the ways in which decisions are made about which descriptions, explanations, and classifications are selected over others become critical. Wikipedia is authoritative because it seems to reflect what is the consensus truth about the world. How, then, is so-called consensus arrived at? Who (or what) wins in these struggles? What does it take to win? How do battles play out across the chains of circulation in which datafied facts now travel? Does this ultimately represent a people’s history, reflective of our global collective intelligence? Is history now written by algorithms, or do certain groups and actors actually dominate that representation?

These are the questions that the book seeks to answer. Journalists may write the first draft of history, and historians document expert accounts by revisiting those sources after events have occurred. But on Wikipedia, accounts of historic events are being created in ways that are more powerful and more popular than any single authoritative source. Rather than representing global consensus, the facts that are curated and circulated through the web’s most trusted terrains are the result of significant struggles and the constant discarding of alternatives. Some actors and technologies prevail in this struggle, while other knowledges are either actively rejected or never visible from the start.

This is the premise of the book, and it succeeds brilliantly at explaining how Wikipedia works to the general public – and indeed to Wikipedians themselves, who may have become so habituated to Wikipedia's internal processes that they don't consciously perceive them anymore, just like a carpenter who uses a hammer daily generally only has eyes for the structures built, rather than the tool they use to build them.

In the end, Ford argues that our knowledge infrastructure suffers from three key weaknesses: first, Wikipedia is vulnerable to crowds driven by collective emotion; second, algorithms that carry Wikipedia facts to other delivery channels remove the traces of the facts' origins; and, third, knowledge authority is vested in a very small number of platforms, all hosted in the United States.

Ford's Writing the Revolution provides a more clear-eyed explanation of what Wikipedia is, who Wikipedians are, and why it matters, than any other book published to date. It reveals a profoundly human project – full of human talent and human flaws – as well as a writer whose own humanity shines through in the way she relates the stories of the Wikipedians involved.

____________

More about this book has been written elsewhere –

- Financial Times

- Prospect Magazine

- Lit Hub's Andrew Keen interviews Heather Ford on Now.TV (41 minutes; introduction ends at 3:30)

There is also a related article by Heather Ford on The Conversation:

Galactic dreams, encyclopedic reality

"AI" is a silly buzzword that I try to avoid whenever possible. First of all, it is poorly defined, and second of all, the definition is constantly changing for advertising and political reasons. If you want an example of this, look at this image, which illustrates our own article on "AI": it was generated using a single line of code in Mathematica. Simply put, the "AI effect" is that "AI" is always defined as "using computers to do things computers aren't currently good at", and once they're able to do it, people stop calling it "AI". If we just say the actual thing that most "AI" is – currently, neural networks for the most part – we will find the issue easier to approach. In fact, we have already approached it: the Objective Revision Evaluation Service has been running fine for several years.

With that said, here is some silly stuff that happened with a generative NLP model:

Meta, formerly Facebook, released their "Galactica" project this month, a big model accompanied by a long paper. Said paper boasted some impressive accomplishments, with benchmark performance surpassing current SoTA models like GPT-3, PaLM and Chinchilla – Jesus, those links aren't even blue yet, this field moves fast – on a variety of interesting tasks like equation solving, chemical modeling and general scientific knowledge. This is all very good and very cool. Why is there a bunch of drama over it? Probably some explanation of how it works is appropriate.

While we have made ample use of large language models in the Signpost, including two long articles in this August's issue which turned out pretty darn well, there is a certain art to using them to do actual writing: they are not mysterious pixie dust that magically understands your intentions and synthesizes information from nowhere. For the most part, all they do is predict the next token (i.e. a letter or a word) in a sequence – really, that's it – after having been exposed to vast amounts of text to get an idea of which tokens are likely to come after which other tokens. If you want to get an idea of how this works on a more basic level, I wrote a gigantic technical wall of text at GPT-2. Anyway, the fact that it can form coherent sentences, paragraphs, poems, arguments, and treatises is purely a side effect of text completion (which has some rather interesting implications for human brain architecture, but that is beside the point right now). The important thing to know is that they just figure out what the next thing is going to be. If you type in "The reason Richard Nixon decided to invade Canada is because", the LLM will dutifully start explaining the implications of Canada being invaded by the USA in 1971. it's not going to go look up a bunch of sources and see whether that's true or not. It will just do what you're asking it to, which is to say some stuff.

This would have been a great thing to explain on the demo page, but for some reason it was decided that the best way to showcase this prowess would be to throw a text box up on the Internet, encouraging users to type in whatever and generate large amounts of text, including scientific papers, essays... and Wikipedia articles.

So we made a request for an article about The Signpost in the three days the demo was up. The writing was quite impressive, and indeed was indistinguishable from a human's output. You could learn a lot from something like this! The problem is that we were learning a bunch of nonsense: for example, we apparently started out as a print publication. Unfortunately, we didn't save the damn thing, because we didn't think they were going to take everything down three days after putting it up. The outlaws at Wikipediocracy did, so you can see an archived copy of their own attempt at a Galactica self-portrait, which is full of howlers (compare to their article over here).

Ars Technica later wrote a scathing review of the demo. They note several issues, and a little digging into their sources found a Twitter user who managed to get Galactica to write papers on the benefits of eating crushed glass, and got multiple papers that resembled the basic appearance of valid sources, while containing claims like "Crushed glass is a source of dietary silicon, which is important for bone and connective tissue health", and a generated review paper described all the studies that show feeding pigs crushed glass is great for improving weight gain and reducing mortality. Of course, if there were health benefits of eating crushed glass, this is probably what papers about it would look like, but as it stands, the utility of such text is dubious. The same goes for articles on the "benefits of antisemitism", which mrgreene1977 wisely did not quote from, but one can imagine what kind of tokens would come after what kind of other tokens.

Will Douglas Heaven's article for MIT Technology Review "Why Meta's latest large language model survived only three days online" leads with the statement, "Galactica was supposed to help scientists. Instead, it mindlessly spat out biased and incorrect nonsense", and things get worse from there. Apparently, the algorithm was prone to backing up its points (like a wiki article about spacefaring Soviet bears) with fake citations, sometimes from real scientists working in the field in question. Lovely! Well worth reading, with far too many great examples in there to quote, and even more if you follow their suggestion to look at Gary Marcus's blog post on it.

In their defense, the Galacticans did note, at the bottom of a long explanation of how much the website rules:

| “ | Language Models can Hallucinate. There are no guarantees for truthful or reliable output from language models, even large ones trained on high-quality data like Galactica. NEVER FOLLOW ADVICE FROM A LANGUAGE MODEL WITHOUT VERIFICATION. [...] Galactica is good for generating content about well-cited concepts, but does less well for less-cited concepts and ideas, where hallucination is more likely. [...] Some of Galactica's generated text may appear very authentic and highly-confident, but might be subtly wrong in important ways. This is particularly the case for highly technical content. | ” |

But then, even when attempting to use it correctly, it had problems. The MIT Technology review report links to an attempt by Michael Black, director at the Max Planck Institute for Intelligent Systems, to get Galactica to write on subjects he knew well, and ended up thinking Galactica was dangerous: "Galactica generates text that's grammatical and feels real. This text will slip into real scientific submissions. It will be realistic but wrong or biased. It will be hard to detect. It will influence how people think." He instead suggests that those who want to do science should "stick with Wikipedia".

Perhaps it would be best to give the last, rather spiteful word to Yann LeCun, Meta's chief AI scientist: "Galactica demo is offline for now. It’s no longer possible to have some fun by casually misusing it. Happy?"

What does it mean for us?

Most of the issues and controversies we run into with ML models follow a familiar pattern: some researcher decides that "Wikipedia" is an interesting application for a new model, and creates some bizarre contraption that serves basically no purpose for editors. Nobody wants more geostubs! But this is not a problem with the underlying technology.

The field of machine learning is growing extremely quickly, both in terms of engineering (the implementation of models) and in terms of science (the development of vastly more powerful models). Anyone who has an opinion about these things is simply going to be wrong about anything a few months from now. They will only grow in importance, and I think that any editor who does not try to read as much about it as possible and keep abreast of developments is doing themselves a disservice. Not wanting to be a man of talk and no action, I wrote GPT-2 (while its successor model is more relevant to current developments, it has identical architecture to the old one, and if you read about GPT-2 you will understand GPT-3).

Moreover, we have already been tackling the issue of neural nets on our own terms: the Objective Revision Evaluation Service has been running fine for several years. It seems to me that, if we were to approach these technologies with open minds, it could be possible to resolve some of our most stubborn problems, and bring ourselves into the future with style and aplomb. I mean, anything is possible. For all we know, the Signpost might start putting out print editions.

The Six Million FP Man

Sometimes, we all reach milestones in our time at Wikipedia. Sometimes you reach 100 featured articles. Sometimes you get elected to ArbCom. Sometimes you hit 600 featured pictures, which, as far as I can tell, is more than anyone else has ever achieved, about 8.2% of all featured pictures, and the result of fifteen years of work.

And sometimes, no one else cares about this fact.[1] So how does one write an article about oneself while not appearing completely vain and self-promotional? Well, one doesn't, but let's do it anyway because it'll be at least a couple years until the next milestone.

Option one: Select some of your favourites

Why not make a gallery of your favourite restorations, showing off how much work you put into these? For example, you could go to your user page and copy over the conveniently pre-formatted list you made, that shows before and after!

| BEFORE | AFTER |

|---|---|

|

|

|

|

|

|

|

|

|

|

It's a good start! But maybe some sort of animation too?

...Perfect!

Option two: How about a history?

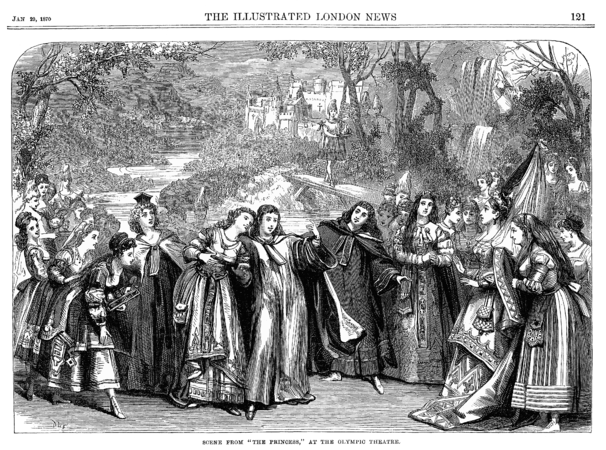

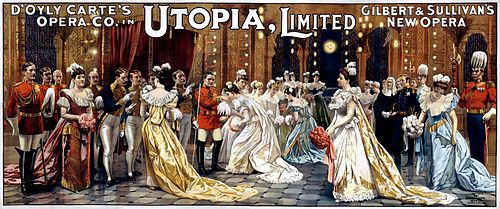

You could describe how you got into your field of editing. For example, I got into image restoration through an image that I don't even count as one of my "official" list of featured pictures anymore (I do my official count based on the ones featured on Adam Cuerden, which ignores or gives half-value to anything I didn't work hard enough on, leaves out a lot of my very early works, and definitely ignores anything I just nominated). It's an illustration to the play The Princess by W. S. Gilbert. It's not the biggest restoration, nor the most impressive original, but if you look roughly under the "T" of "THE ILLUSTRATED LONDON NEWS" you'll see a very obvious white line that shouldn't be there. I spent hours fixing that in Microsoft Paint. 2007 was a very different time. I got better from there.

-

Original

-

Restoration

By 2009, I was scanning my own books, and doing rather impressive images from Gustave Doré. Would I do it different now? Well, I'd probably fix up the border a bit, but it's not bad. It's also so large that I couldn't upload the original file, because Commons wasn't configured to allow anything as large as a lossless file of that type has to be:

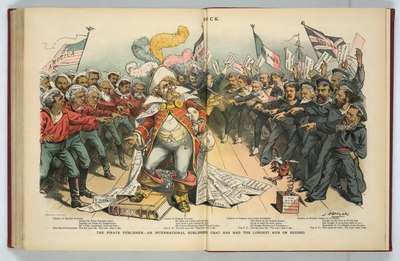

2010 saw the stitching together of the poster of Utopia Limited we saw earlier. 2012 saw this incredibly difficult Battle of Spottsylvania image, which is also about the time I started to get a bit more confident with colour:

-

Before

-

After

In 2016 I went to Wikimania in Esino Lario, met Rosie Stephenson-Goodknight, and got introduced to Women in Red. This was the point I realised that there was rather a gender bias in my contributions, and I began work to improve things. It wasn't that I hadn't done images of women before, but they were a sometimes food, and images of women should be more of a main course. Here's a selection of my favourite images of women I brought to featured pictures after joining Women in Red, in no particular order because Wikipedia galleries work best if you space out landscape images with as many portrait orientation ones as possible:

I was originally planning for Ulmar to be my 600th featured picture. However, the vagaries of "Does Featured Picture Candidates have enough participation for things to pass?" said no, which leads us into our next technique of shameless self-promotion dealing with the issue at hand.

Option three: Talk about the thing that pushed you over the top

One could discuss the thing that pushed you over the top, and how it relates to your history in Wikipedia. While I don't talk about it much, I have eight featured articles, my first, from October 2006, was W. S. Gilbert, and that really got me into Wikipedia as a whole.[2]

So, when choosing something significant to my Wikipedia career....

- My 600th Featured Picture: W. S. Gilbert by Elliott & Fry

-

Before

-

After

I had been looking for a high-resolution picture of him for, well, over a decade, probably. I stumbled upon the Digital Public Library of America, decided to give it a go, and found this, of all places, in the University of Minnesota library collections. But then, I suppose it's always going to be somewhere a little unexpected if you checked everywhere you expected. I think this is one of my featured pictures where zooming in is necessary to really tell the work done, but having an image of him that can be safely zoomed in to about a foot wide or so is probably going to be very helpful to a lot of Gilbert and Sullivan societies out there.

Oh, and to answer the obvious question, Arthur Sullivan is, if anything, harder to find an image of than Gilbert. I mean, I did, he's Featured Picture number 601, but it wasn't easy to find.

Was kind of odd, though: I found him in a collection I thought I knew very well already. Which just goes to show you, I suppose. Anyway, he will hopefully be joining many more in the next months and years. See you for Number 700!

Note

(Wiki)break stuff

Sometimes life gets messy. Here are a few tips on not sweating the small stuff.

Life!

Like most people, I happen to have a life. The pandemic years have been a bit more chaotic for me than most, involving a poorly-timed job loss, moving multiple times, unstable employment, etc. This didn't really get in the way of my editing save perhaps not having access to my computer for a few days here and there. However, most recently, I decided to return to studies, which involve a two hour commute a few times a week, during a period of financial strain mostly due to bureaucratic paperwork hellholes that are yet to be resolved, and lots of homework, projects, on top of class time.

It's this last straw that kind of butted against my Wikipedia priorities. While I wanted to write more Tips and Tricks columns (you might have noticed they were missing in the last two issues), and, if push came to shove, I could certainly have done so, the reality was simply that I just didn't have it in me if I wanted to remain sane and dabble in my other more social hobbies. I needed the famous Wikibreak. I still edited, mostly on weekends, but I couldn't have long periods of time with a clear mind, so I just dabbled in mostly mindless stuff, reference cleanup, etc.

But I'm important, I can't not edit!

Imagine my stress at the thought of me, being a Vanguard Editor, or, dare I say, a Grand Gom, the Highest Togneme of the Encyclopedia, with over 407,000 edits, reducing my productivity output? I'm currently the 78th most prolific editor on the English Wikipedia, having recently fallen from 77th, overtaken by Liz. What if [Placeholder Editor], with their mere 402,000 edits, surpasses me, and I tumble down to 79th? What then? What then?

If you ever find yourself in a similar situation, or have similar thoughts about your own importance to the community, I would invite you to read our article on Grand Poobahs, and realize that my 407,000 edits as of writing, which sounds like a lot (and it is a lot), is really not that much compared to the 1.17 billion total edits made by the community. Mine amount to roughly 0.036% of all edits ever made. I am a drop in the ocean.

This is not to say that you bring nothing to the table. Vandal fighting, DYKs on the front page, new page patrol, adding missing citations, removing poorly sourced content, creating new articles concerning an underrepresented topic or community, fighting copyright violations... these things all matter. But it helps to remind yourself that the world (or worse, Wikipedia) will not end if you take it slow for a while. Maybe it'll be a little bit less awesome if you're not around, but in the long run, Wikipedia will be better off if you take a break when you need it. On your return, you'll be in a better state of mind all around, but you'll also find yourself less prone to get into silly arguments, you'll be less bite-y, and it'll be easier to assume good faith of your fellow editors.

Recognizing you need a break

The hardest step to take a Wikibreak, at least for certain Wikipediholics, is to recognize that you need one. Common symptoms involve

- Having trouble managing work/life/editing balance in general

- Having a feeling of dread when you log on Wikipedia

- Being uninspired to edit when you normally have dozens of ideas about what you could edit.

- Editing until 3AM when you really should be sleeping because you need to get up at 6AM

- Find yourself neglecting "real life" activities/chores/priorities because you don't have enough time to do everything.

For me, it was simply recognizing that my long term goals were incompatible with maintaining my regular editing habits and commitments. I didn't "actively" decide I needed a break, I just realized over time that I needed to edit less because I simply didn't have as much free time as usual.

Announcing your Wikibreak

Some people like to announce they're taking a Wikibreak, mostly out of courtesy to others that might wonder why they might be slower than normal in their replies, or aren't around for some discussions that would normally interest them. WP:WIKIBREAK contains several examples of templates one might display on their user/user talk pages, such as:

| User:Example is busy in real life and may not respond swiftly to queries. |

| User:Example is away on vacation from 1 December 2022 to late Spring 2023 and may not respond swiftly to queries. |

| User:Example is currently wikibonked and is operating at a lower edit level than usual. Hitting the wall is a temporary condition, and the user should return to normal edit levels in time. |

Some templates offer more insight than others as to the cause of your Wikibreak, but certainly don't feel obliged to divulge more details than you feel comfortable doing so. A generic notice, like:

| User:Example is taking a short wikibreak and will be back on Wikipedia in late July/early August. |

...is perfectly acceptable!

You can also simply take a Wikibreak and not tell anyone. I didn't announce mine, for example, mostly because I realized over time I needed to pull back, rather than actively making an decision to have a break. That's OK too!

Enforcing the break

Various tools exist to help you maintain discipline during your Wikibreak. Mine was facilitated by some editing habits. I normally edit Wikipedia from my main computer, which consist of a fairly powerful desktop, with a dual 27-inch screen setup, a high end full mechanical keyboard, and a high end mouse. I won't lie, I'm an extremely privileged editor as far as my setup is concerned. However, when in school, in a entirely different city, I only have access to my laptop, a 13-inch screen dinosaur from 2013 that struggles to open a PDF, with a crappy laptop keyboard and crappy external mouse (if I even bother hooking it up), which is a decidedly less optimal editing experience. But I have kept this crappy laptop around for the express purpose of having a miserable editing experience on it.

Having a "desktop = Wikipedia, laptop = School" separation helps me tremendously in my discipline, and I would highly encourage anyone that struggles to separate work/school from hobbies/home life to have physically distinct setups, one for productivity/work, the other for hobbies. I do recognize that this is not something all people can afford, in which case I encourage you to find another "mental switch", like "laptop in the kitchen = work/school, laptop in the bedroom = hobbies" that helps you with this separation. Or even simply working from a different side of the table to create some sort of distinction that your mind can latch on.

For those that can't self-regulate, there is always the WikiBreak Enforcer user script, which prevents you from logging in until a certain date. You can also ask for a block if you'd rather not deal with scripts.

What's next?

There's a saying in the video game industry that a delayed game is eventually good, but a rushed game is forever bad. I would argue it is the same with our work on Wikipedia. Don't put yourself in a crunch situation if you can avoid it. We're all volunteers, if you need to take a week or four to yourself, take the time off! We'll still be here when you return.

For me, my involvement on Wikipedia (and the Signpost) will wax and wane with my workload, which will alternate between classes and work placement every two months until June of next year. But for you, I hope that when you next encounter high levels of Wikistress, either in yourself or in a Wikifriend, you'll keep the Wikibreak in mind as one possible way to get on top of things and avoid a Wikiburnout.

To end things, I'll invite you to share your own own Wikibreak story in the comment section! Or if you never took a Wikibreak, maybe you can share a moment where, in hindsight, you wish you had taken one.

Tips and Tricks is a general editing advice column written by experienced editors. If you have suggestions for a topic, or want to submit your own advice, follow these links and let us know (or comment below)!

Study deems COVID-19 editors smart and cool, questions of clarity and utility for WMF's proposed "Knowledge Integrity Risk Observatory"

A monthly overview of recent academic research about Wikipedia and other Wikimedia projects, also published as the Wikimedia Research Newsletter.

"Wikipedia as a trusted method of information assessment during the COVID-19 crisis"

- Reviewed by Piotr Konieczny

This book chapter,[1] unfortunately not yet in open access, provides a good overview of Wikipedia's history and practices, concluding that Wikipedia's coverage of the COVID-19 pandemic is "precise and robust", and that this generated positive coverage of Wikipedia in mainstream media. The author provides an extensive and detailed overview of how Wikipedia volunteers covered the pandemic, and highlights the efforts of the dedicated WikiProject COVID-19 (on English Wikipedia, while also mentioning COVID-19 "portals in several other languages such as French, Spanish, or German"), an offshoot of the larger WikiProject Medicine, as important in creating quality content in this topic area. He also praises Wikipedia editors for their dedication to the fight against fake news and misinformation, and the Wikimedia Foundation's "embrace" of these editors' actions.

One of the most interesting observations made by the author – if somewhat tangential to the main topic – is that Wikipedia "is used [by readers] in ways similar to a news media", which "generates a tension between Wikipedia's original encyclopaedic ambitions and these pressing journalistic tendencies" (an interesting and, in this reviewer's experience, under-researched topic, at least in English – see here for a review of a recent French book on this topic). The author concludes that this, somewhat begrudging, acceptance of current developments by the Wikipedia community has significantly contributed to making its coverage relevant to the general audience, and at the same time, notes that despite reliance on media sources, rather than waiting for scholarly coverage, Wikipedia was still able to main high quality in its coverage, which the author attributes to editors' reliance on "legacy media outlets, like The New York Times and the BBC".

Wikimedia Foundation builds "Knowledge Integrity Risk Observatory" to enable communities to monitor at-risk Wikipedias

- Reviewed by Tilman Bayer

A paper titled "A preliminary approach to knowledge integrity risk assessment in Wikipedia projects"[2] by two members of the Wikimedia Foundation's research team provides a "taxonomy of knowledge integrity risks in Wikipedia projects [and an] initial set of indicators to be the core of a Wikipedia Knowledge Integrity Risk Observatory." The goal is to "provide Wikipedia communities with an actionable monitoring system." The paper was presented in August 2021 at an academic workshop on misinformation (part of the ACM's KDD conference), as well as at the Wikimania 2021 community conference the same month.

The taxonomy distinguishes between internal and external risks, each divided into further sub-areas (see figure). "Internal" refers "to issues specific to the Wikimedia ecosystem while [external risks] involve activity from other environments, both online and offline."