WMF Terms of Use now in force, new Creative Commons licensing

Terms of Use update: move to CC BY-SA 4.0, adherence to Universal Code of Conduct now an intrinsic part of the ToU

The Wikimedia Foundation has advised the community that the updated Terms of Use are now in force. Important changes include the move to CC BY-SA 4.0 and that adherence to the Universal Code of Conduct now forms part of the Terms of Use for all Wikimedia sites.

WMF Associate General Counsel Jacob Rogers posted the following on the Wikimedia-l mailing list:

Hi everyone,

This announcement is to confirm that the Wikimedia Terms of Use[1] have been updated effective June 7. This follows the end of the spring consultation[2] held between February and April, and approvals recorded by the Executive Director and General Counsel[3] per the delegation of policy-making authority from the Foundation Board of Trustees.[4]

As part of this update, we are also happy to announce that the project license has been upgraded to CC BY-SA 4.0[5]! Technical work has already started on this change.

I’d like to extend one last thank you to everyone who participated in the conversation to help us complete this update to serve the community and the projects going forward!

Best,

Jacob

[1]:

https://foundation.wikimedia.orgview_html.php?sq=&lang=&q=Policy:Terms_of_Use

[2]:

[3]:

https://foundation.wikimedia.org/w/index.php?title=Policy_talk:Terms_of_Use&diff=prev&oldid=260916 and https://foundation.wikimedia.org/w/index.php?title=Policy_talk:Terms_of_Use&diff=prev&oldid=261297

[4]

[5]

https://creativecommons.org/licenses/by-sa/4.0/

-- Jacob Rogers

Associate General Counsel

Wikimedia Foundation

Pronouns: He/him

A diff showing all the Terms of Use changes made this year is here.

WMF emergency emails landing unread in spam folder

The WMF's Jackie Koerner has advised the community that some emergency emails sent to the Wikimedia Foundation appear to end up in a spam folder and may therefore fail to receive a timely response.

As of June 2023, it has come to our attention that some messages sent to emergency@ wound up in our spam folder. This seems to be a backend issue with our email provider and we are currently reviewing the problem. If you do not receive a response to your message within 1 hour, please send a note to ca

wikimedia.org. Thank you.

Corresponding notes were added to Wikipedia:Responding to threats of harm. Village Pump discussion.

"First solo museum exhibition of a Wikipedia photographer" concludes

Last month, the Museum of Northern California Art in Chico, California, concluded what has been described as "the first solo museum exhibition of a Wikipedia photographer." Titled "Northern California on Wikipedia", it featured photos by User:Frank Schulenburg (also known as the owner of the "Wikiphotographer" website and as the Executive Director of the Wiki Education Foundation), taken between 2012 and 2023.

-

Above: Frank Schulenburg discussing one of pictures featured in the exhibition, File:Old Crissy Field Coast Guard Station in the fog (December 2015).jpg (below, also a featured picture on Wikimedia Commons)

Brief notes

- Annual Plan: The Foundation's Annual Plan and Budget are due to be approved this week at the 21 June 2023 Board of Trustees meeting. The Board will be joined by staff, representatives from the Movement Charter Drafting Committee, Jon Huggett (governance consultant), and Matt Thompson (facilitator).

- Annual reports: Wikimedia Germany, Wikimedia Czech Republic, Wikimedia Italy

- Articles for Improvement: This week's Article for Improvement (beginning 19 June) is Electroencephalography. It will be followed the week after by Water frame. Please be bold in helping improve these articles!

- New administrators: The Signpost welcomes the English Wikipedia's newest administrator, Novem Linguae.

- Volunteers wanted for talk page conversations study: A Cornell research group is recruiting volunteers for a user study involving a prototype tool called "ConvoWizard" to help Wikipedians have healthier discussions on Talk pages, noticeboards, and other discussion spaces. There is more information about the project at its Meta-wiki project page and a discussion on the Village Pump. Wikipedians who are interested in participation should sign up here.

English WP editor glocked after BLP row on Italian 'pedia

Italian Wikipedia controversy leads to global lock of English Wikipedia contributor's account

Italian daily Il Fatto Quotidiano reports (Google Translation) on what it calls a "bizarre soap opera" surrounding the Italian Wikipedia biography of Alessandro Orsini (it). According to his university website, Orsini is an Associate Professor at LUISS University of Rome, where he teaches General Sociology and Sociology of Terrorism in the Department of Political Science, as well as a long-time (2011–2022) Research Affiliate at the MIT Center for International Studies. He has also written as a journalist for Il Messaggero and Il Fatto Quotidiano.

The dispute on the Italian Wikipedia was focused on whether his biography was unduly negative. It led to the global lock of User:Gitz6666, a user with around 7,500 edits and a clean block log on the English Wikipedia, though some prior blocks on Italian Wikipedia. Gitz6666 had recently been a party in the World War II and the history of Jews in Poland arbitration case; the case decision contained no findings of fact or remedies concerning them.

According to the Il Fatto Quotidiano article, Gitz6666 and another user, now also blocked, had been defending Orsini, arguing that the Italian Wikipedia biography had become an attack page. The press article agrees with their assessment, pointing out that the biography paints an unbalanced picture of the reception of Orsini's book Anatomy of the Red Brigades (Ithaca: Cornell University Press, 2011). The Italian Wikipedia article, currently protected by an admin, acknowledges that the book won an award but then focuses exclusively on negative reviews, including one published on an ex-terrorist's personal blog, while positive reviews (e.g. [1][2][3][4]) or mixed reviews (e.g. [5][6]) in reliable sources are not represented. The Il Fatto Quotidiano article, written by Lorenzo Giarelli, ties the biography controversy to critical comments Orsini has made about NATO.

The global lock of Gitz6666's account was made by a steward, User:Sakretsu. The rationale given was "violation of the UCoC, threatening and intimidating behaviour". – AK

World Book Encyclopedia, 2023 edition

Like many of us, including Jimmy Wales, Benj Edwards grew up reading the World Book Encyclopedia. His article in Ars Technica, "I just bought the only physical encyclopedia still in print, and I regret nothing", explains that there is still a demand, in the thousands per year, for a print encyclopedia, mostly from schools and public libraries. He even gives a link to the World Book website, where you can buy your own copy for $1,199, and says that he can occasionally find a copy on Amazon for $799. Slightly older editions go for much less.

Advantages of owning your own print encyclopedia include

Every morning as I wait for the kids to get ready for school, I pull out a random volume and browse. I've refreshed my knowledge on many subjects and enjoy the deliberate stability of the information experience.

Disadvantages include explaining the purchase to your family, and the shark photo on the "spinescape". – S

Holocaust and Polish nationalism: Critic proposes history advisory board

In The Forward, Shira Klein (chair of the Department of History at Chapman University) accuses English Wikipedia of "repeatedly allow[ing] rogue editors to rewrite Holocaust history and make Jews out to be the bad guys", reiterating and expanding her criticism of the recent ArbCom decision in the "World War II and the history of Jews in Poland" case (see also last issue's "In the media"). The case had been prompted by an academic paper (Signpost review) where Klein and Jan Grabowski had identified "dozens of examples of Holocaust distortion which, taken together, advanced a Polish nationalist narrative, whitewashed the role of Polish society in the Holocaust and bolstered harmful stereotypes about Jews." Klein also gave a keynote speech about "Wikipedia's Distortion of Holocaust History" at the Wikihistories conference on June 9.

In her Forward article, Klein argues that "The problem is not the individual arbitrators, nor even ArbCom as a whole; the committee's mandate is to judge conduct, never content. This is a good policy. [...] But this leaves a gaping hole in Wikipedia's security apparatus. Its safeguards only protect us from fake information when enough editors reach a consensus that the information is indeed fake. When an area is dominated by a group of individuals pushing an erroneous point of view, then wrong information becomes the consensus."

Klein proposes that the Wikimedia Foundation (which "boasts an annual revenue of $155 million" and has previously intervened "to stem disinformation in Chinese Wikipedia, Saudi Wikipedia [sic] and Croatian Wikipedia, with excellent results"), "must harness subject-matter experts to assist volunteer editors":

In cases where Wikipedia's internal measures fail repeatedly, the foundation should commission scholars — mainstream scholars who are currently publishing in reputable peer-reviewed presses and work in universities unencumbered by state dictates [apparently a reference to the situation in Poland] — to weigh in. In the case of Wikipedia's coverage of Holocaust history, there is a need for an advisory board of established historians who would be available to advise editors on a source's reliability, or help administrators understand whether a source has been misrepresented. [...] This is no radical departure from Wikipedia's ethos of democratized knowledge that anyone can edit. This is an additional safeguard to ensure Wikipedia's existing content policies are actually upheld."

– H

Tenth Russian fine this year, Foundation sues again

Reuters reports (citing Interfax) that on June 6, a Russian court "fined the Wikimedia Foundation, which owns Wikipedia, 3 million roubles ($36,854) for refusing to delete an article on Ukraine's Azov battalion." According to Interfax, "This is the tenth time Wikipedia has been penalized in 2023 for not removing prohibited information. The ten fines total 15.9 million rubles." (See previous "In the media" about one of these fines that was issued in February: "Russia fines Wikipedia for failing to toe the party line on the Ukraine War".) Separately, on May 29 Interfax also reported that the Foundation was filing lawsuits against the Prosecutor-General's office and Roskomnadzor, "asking the court to invalidate Roskomnadzor's notices about violations of procedures governing dissemination of information on Wikipedia, as well as the Russian Prosecutor General's Office's demands for measures to be taken to restrict user access to this information." In November last year, the Foundation already reported it had several cases "pending before the Russian Courts including an appeal against a verdict where the Foundation was fined a total of 5 million rubles (the equivalent of approximately USD $82,000) for refusing to remove information from several Russian Wikipedia articles." – H

In brief

- "Best ever" citation needed no longer needed: BoingBoing felt certain they had located the best ever use of "[citation needed]" on Wikipedia. It was in the I've got your nose article. However, as might be expected with an encyclopedia updatable in real time, a reliable source was soon found, meaning that the "[citation needed]" tag is no more.

Content, featured

-

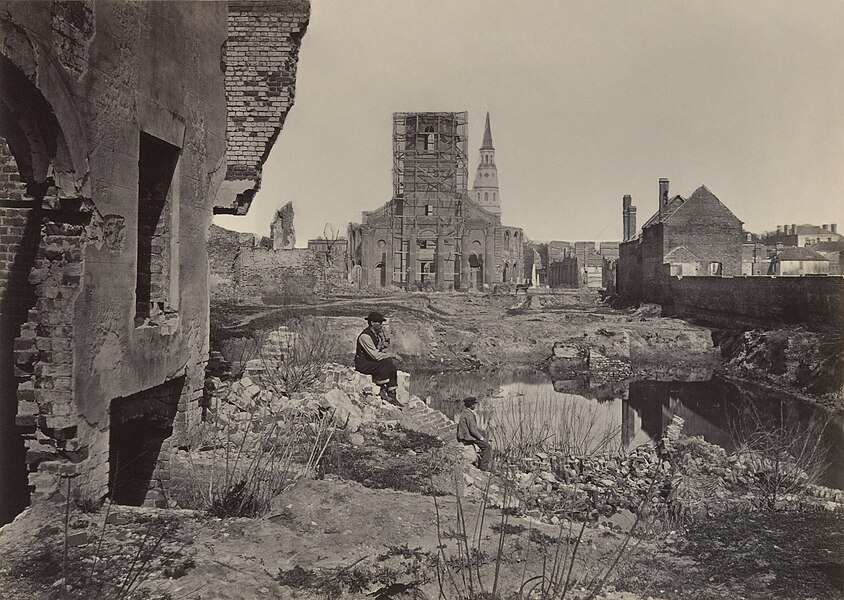

Ruins in Charleston, South Carolina, from Photographic Views of Sherman's Campaign by George N. Barnard, restored by Adam Cuerden, one of our new featured pictures.

Not much to say this week. I rushed it a bit, but, y'know. One does what one can. From past experience, if a featured content item isn't published, it's just spiked; none of the content in it ever gets mentioned in the Signpost and the next issue just carries on with the next batch of content.[1]

Probably best to avoid that.

Featured articles

Sixteen featured articles were promoted this period.

- Thomas A. Spragens, nominated by PCN02WPS

- Centre College in Kentucky

- To get Spragens was quite lucky.

- The Dance of the Twisted Bull, nominated by Premeditated Chaos

- Through the torso of one of his models

- Alexander McQueen shoved spears.

- It's just an optical illusion,

- But not in your worst fears.

- James Madison, nominated by ErnestKrause

- One of America's founding fathers,

- His reviews would probably be raves

- For writing The Federalist Papers

- If he didn't promote keeping slaves.

- North East MRT line, nominated by ZKang123

- Want to go to north-east Singapore?

- Well, that's exactly what this line's for!

- USS Marmora (1862), nominated by Hog Farm

- A sternwheel steamer that got clad in tin,

- The American Civil War is the one she fought in.

- Irish nationality law, nominated by Horserice

- Want to be an Irish citizen? Unless there's a flaw,

- This article will tell you what sayeth the law.

- Wood River Branch Railroad, nominated by Trainsandotherthings

- A Rhode Island railway, the article's strong,

- For a line that was only five point six miles long.

- Cherry Valentine, nominated by Another Believer

- A drag queen raised as a Traveller, a fact he made clear

- Though few knew about Travellers LGBT or queer.

- "White Horse" (Taylor Swift song), nominated by Ippantekina

- Taylor Swift sings that fantasy stories

- Teach morals that they shouldn't do.

- She's no princess; her boyfriend's no knight

- And she shouldn't have believed that's true.

- Coccinellidae, nominated by LittleJerry

- Ladybird, ladybird fly away home,

- Your house is on fire, your children will burn.

- Pasqua Rosée, nominated by SchroCat

- He opened the first coffeeshop in London town

- What else did he do? It's not written down.

- Untitled Goose Game, nominated by MyCatIsAChonk

- In a quaint English village,

- The sons of the tillage

- Find their problems never cease

- Because of prankster geese.

- Hungarian nobility, nominated by Borsoka

- Oh, Hungary,

- Oh, Hungary!

- Oh, doughty sons of Hungary!

- May all success

- Attend and bless

- Your warlike ironmongery! —WSG

- 1980 World Snooker Championship, nominated by BennyOnTheLoose

- Fifty-three entered, though four of them withdrew,

- Cliff Thorburn's the man that saw the thing through.

- "It's a Wrap", nominated by Heartfox

- We're sure Mariah Carey will not have got fed up

- When her old song grew popular once it was sped up.

- A bit earlier this year, it got a new start

- When TikTok discovered it, and it entered the chart.

- Weesperplein metro station, nominated by Styyx

- A metro line was cancelled, and the most

- That remains is under Weesperplein: A ghost

- station.

Featured pictures

Nineteen featured pictures were promoted this period, including those at the top and bottom of this article.

-

Amanda Smith by T. B. Latchmore, restored by Adam Cuerden

-

Limes by Ivar Leidus

-

Sculptures at Rani ki Vav by Snehrashmi

-

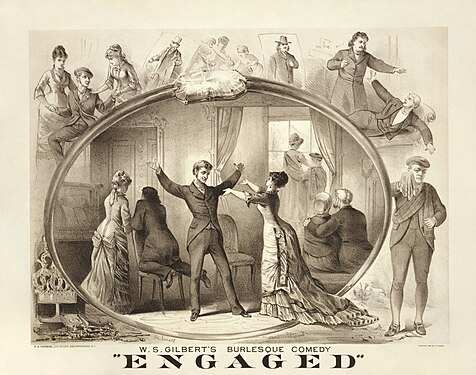

Engaged poster by H. A. Thomas Lith. Studio, restored by Adam Cuerden

Featured lists

- Angel Aquino on screen and stage, nominated by Pseud 14

- She was in many roles on stage and screen,

- But by me none of her roles have been seen.

- I'm sure she's good, but I fear this time,

- I again have to go with a cop-out rhyme.

- List of Top Selling R&B Singles number ones of 1967, nominated by ChrisTheDude

- A Billboard chart

- With Rhythm and Blues heart.

- GLAAD Media Award for Outstanding Limited or Anthology Series, nominated by PanagiotisZois

- A number of shows for this award vied,

- A suitable featured list for this month of Pride.

- List of Alexander McQueen collections, nominated by Premeditated Chaos

- A fashion designer, we talked about him before

- As such, I think I need not say that much more.

- List of Billboard Tropical Airplay number ones of 1999, nominated by Magiciandude (Erick)

- A Billboard chart

- With a tropical heart.

- List of Indianapolis 500 winners, nominated by EnthusiastWorld37

- Round and round and round they goes,

- When do they stop? Nobody kno—

- Oh, after 500 miles. Right. That explains the name.

- List of nitrogen-fixing-clade families, nominated by Dank

- Not all these fix nitrogen (that's a symbiote in rhizomes)

- But many flowering plants in this clade make their homes.

- Werner Herzog filmography, nominated by HAL333

- Famed German director, with many a classic movie,

- Watching them in celebration would be very groovy.

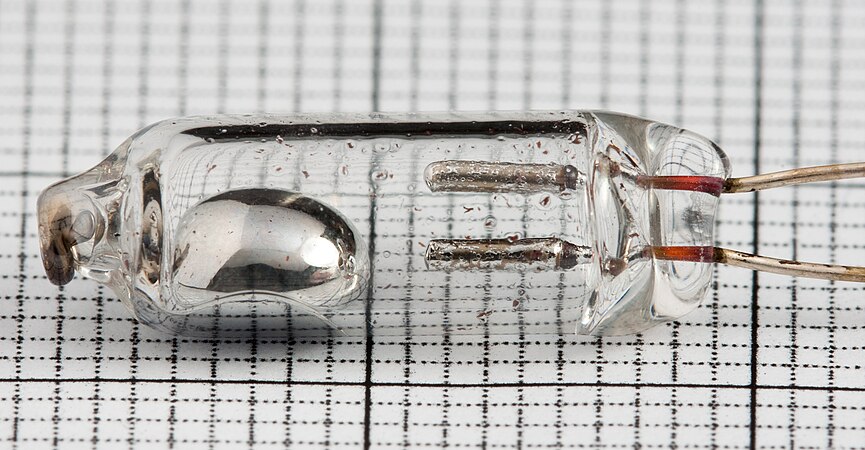

-

Mercury switch by Medvedev, one of our latest featured pictures.

References

- ^ Editor's note: well, we could have run it in this issue lol.

Hoaxers prefer currently-popular topics

A monthly overview of recent academic research about Wikipedia and other Wikimedia projects, also published as the Wikimedia Research Newsletter.

New articles about currently popular topics are more likely to be hoaxes

A study titled "The role of online attention in the supply of disinformation in Wikipedia"[1] finds that

[...] compared to legitimate articles created on the same day, hoaxes [on English Wikipedia] tend to be more associated with traffic spikes preceding their creation. This is consistent with the idea that the supply of false or misleading information on a topic is driven by the attention it receives. [... a finding that the authors hope] could help promote the integrity of knowledge on Wikipedia.

The authors remark that "little is known about Wikipedia hoaxes" in the research literature, with only one previous paper focusing on this topic (Kumar et al., who among other findings had reported in 2016 that "while most hoaxes are detected quickly and have little impact on Wikipedia, a small number of hoaxes survive long and are well cited across the Web"). In contrast to that earlier study (which had access to the presumably more extensive new pages patrol data about deleted articles), the authors base their analysis on the list of hoaxes curated by the community.

Before getting to their main research question about the relation between online attention to a topic (operationalized using Wikipedia pageviews) and disinformation, the authors analyze how these confirmed hoax articles (in their initial revision) differ in various content features from a cohort of non-hoax articles started on the same day, concluding that

[...] hoaxes tend to have more plain text than legitimate articles and fewer links to external web pages outside of Wikipedia. This means that non-hoax articles, in general, contain more references to links residing outside Wikipedia. Such behavior is expected as a hoax's author would need to put a significant effort into crafting external resources at which the hoax can point.

In other words, successful hoaxers might be displaying an anti-FUTON bias.

To quantify the attention of a topic area that an article pertains to (even before it is created), the authors use its "wiki-link neighbors":

The presence of a link between two Wikipedia entries is an indication that they are semantically related. Therefore, traffic to these neighbors gives us a rough measure of the level of online attention to a topic before a new [Wikipedia article] is created.

To understand the nature of the relationship between the creation of hoaxes and the attention their respective topics receive, we first seek to quantify the relative volume change [in attention] before and after this creation day. Here, a topic is defined as all of the (non-hoax) neighbors linked within the contents of an article i.e., its (non-hoax) out-links.

This "volume change" is calculated as the change in median pageviews among the neighboring articles, using the timespans from 7 days before and 7 days after the creation of an article, to account for "short spikes in attention and weekly changes in traffic" (a somewhat simplistic way of handling this kind of time-series analysis, compared to some earlier research on Wikipedia traffic). The authors limited themselves to an older pageviews dataset, that only covers 2007 to 2016, reducing their sample for this part of the analysis to 83 hoaxes. 75 of those exhibited a greater attention change than their cohort (of non-hoax articles started on the same day). Despite the relatively small sample size, this finding was judged to be statistically significant (more precisely, the authors find "a bootstrapped 95% confidence interval of (0.1227, 0.1234)" for the difference between hoax and non-hoax articles, far away from zero). In conclusion, this "indicates that the generation of hoaxes in Wikipedia is associated with prior consumption of information, in the form of online attention."

Other recent publications

Other recent publications that could not be covered in time for this issue include the items listed below. Contributions, whether reviewing or summarizing newly published research, are always welcome.

- Compiled by Andreas Kolbe and Tilman Bayer

"Information literacy in South Korea: similarities and differences between Korean and international students' research trajectories"

From the abstract:[2]

Work on students' information literacy and research trajectories is usually based on studies of Western, English-speaking students. South Korea presents an opportunity to investigate an environment where Internet penetration is very high, but local Internet users operate in a different digital ecosystem than in the West, with services such as Google and Wikipedia being less popular. [...] We find that Korean students use Wikipedia but less so than their peers from other countries, despite their recognition that Wikipedia is more reliable and comprehensive than the alternatives. Their preferences are instead affected by their perception of Wikipedia as providing an inferior user experience and less local content than competing, commercial services, which also benefit from better search engine result placement in Naver, the search engine dominating the Korean market.

"A Wiki-Based Dataset of Military Operations with Novel Strategic Technologies (MONSTr)"

From the abstract:[3]

This paper introduces a comprehensive dataset on the universe of United States military operations from 1989 to 2021 from a single source: Wikipedia. Using automated extraction techniques on its two structured knowledge databases – Wikidata and DBpedia – we uncover information about individual operations within nearly every post-1989 military intervention described in existing academic datasets. The data we introduce offers unprecedented coverage and granularity that enables analysis of myriad factors associated with when, where, and how the United States employs military force. We describe the data collection process, demonstrate its contents and validity, and discuss its potential applications to existing theories about force employment and strategy in war.

"European Wikipedia platforms, sharing economy and national differences in participation: a case study"

From the abstract:[4]

The following exploratory study considers which macro-level factors can lead to the sharing economy being more popular in certain countries and less so in others. An example of commons-based peer production in the form of the level of contributing to Wikipedia in 24 European countries is used as a proxy for participation in the sharing economy. Demographic variables (number of native speakers, a proxy for population) and development ones (Human Development Index-level and internet penetration) are found to be less significant than cultural values (particularly self-expression and secular-rational axes of the Inglehart-Welzel model). Three clusters of countries are identified, with the Scandinavian/Baltic/Protestant countries being roughly five times as productive as the Eastern Europe/Balkans/Orthodox ones.

"Wikipedia, or: the discreet neutralisation of antiracism. A look behind-the-scenes of an encyclopedic giant"

From the abstract[5] (translated):

Journalist and anti-racist activist, known in particular for her work on police crimes, Sihame Assbague has observed the evolution of her own [French] Wikipedia biography. Noticing errors, approximations, sometimes harmless, often intended to harm her, she notes the virtual impossibility of rectifying them — the Wikipedian community asks for sources that are impossible to provide and detractors block her modifications. She then decided to investigate the functioning of the "free encyclopedia", whose watchwords are: freedom, self-management, transparency and neutrality. But are these principles applicable in the same way to the article "Leek" as to the page of an anti-racist campaigner? What does it mean to be "neutral" about "systemic racism" or "anti-white racism"? And who writes these notices? Immerse yourself in the inner workings of the world's largest encyclopedia.

"Between News and History: Identifying Networked Topics of Collective Attention on Wikipedia"

From the abstract:[6]

[...] how is information on and attention towards current events integrated into the existing topical structures of Wikipedia? To address this [question] we develop a temporal community detection approach towards topic detection that takes into account both short term dynamics of attention as well as long term article network structures. We apply this method to a dataset of one year of current events on Wikipedia to identify clusters distinct from those that would be found solely from page view time series correlations or static network structure. We are able to resolve the topics that more strongly reflect unfolding current events vs more established knowledge by the relative importance of collective attention dynamics vs link structures. We also offer important developments by identifying and describing the emergent topics on Wikipedia.

References

- ^ Anis Elebiary and Giovanni Luca Ciampaglia: "The role of online attention in the supply of disinformation in Wikipedia". Proc. of Truth and Trust Online 2022. Also as Elebiary, Anis; Ciampaglia, Giovanni Luca (2023-02-16). "The role of online attention in the supply of disinformation in Wikipedia". arXiv:2302.08576 [cs.CY].. Code and figures

- ^ Konieczny, Piotr; Danabayev, Kakim; Kennedy, Kara; Varpahovskis, Eriks (2023-06-07). "Information literacy in South Korea: similarities and differences between Korean and international students' research trajectories". Asia Pacific Journal of Education: 1–16. doi:10.1080/02188791.2023.2220936. ISSN 0218-8791.

- ^ Gannon, J. Andrés; Chávez, Kerry (2023-05-30). "A Wiki-Based Dataset of Military Operations with Novel Strategic Technologies (MONSTr)". International Interactions. 49 (4): 639–668. doi:10.1080/03050629.2023.2214845. ISSN 0305-0629. S2CID 259005333.

- ^ Konieczny, Piotr (2023-04-08). "European Wikipedia platforms, sharing economy and national differences in participation: a case study". Innovation: The European Journal of Social Science Research: 1–30. doi:10.1080/13511610.2023.2195584. ISSN 1351-1610. S2CID 258048118.

Author's copy

Author's copy

- ^ Assbague, Sihame (October 2022). "Wikipédia, ou la discrète neutralisation de l'antiracisme. Enquête sur la fabrique quotidienne d'un géant encyclopédique" [Wikipedia, or: the discreet neutralisation of antiracism. A look behind-the-scenes of an encyclopedic giant.]. Revue du Crieur. 21 (2): 140–159. doi:10.3917/crieu.021.0140. S2CID 253090137.

- ^ Gildersleve, Patrick; Lambiotte, Renaud; Yasseri, Taha (2022-11-14). "Between News and History: Identifying Networked Topics of Collective Attention on Wikipedia". arXiv:2211.07616 [cs.CY]. ("accepted for publication in Journal of Computational Social Science")